Generative AI vs. LLMs, A Complete Guide to Their Differences and How They Work

Preetam Das

June 19, 2025

14 min

Table of Contents

It’s almost impossible to stay away from AI today, right?

From app updates and tech headlines to electronics and everyday tools, it seems like everything now has to be AI-driven. In fact, AI is even changing how we search for information itself. For the longest time, our default habit was to “Google it.” But within just a couple of years, that’s shifted to “Ask ChatGPT or Gemini,” whichever you prefer.

With this rapid adoption, a lot of terms are being thrown around, some used interchangeably, some misunderstood. Two of the most common and often confused terms are generative AI and large language models (LLMs).

What is Generative AI and Large Language Model (LLM)

Although both are closely connected, they aren’t the same thing. Each serves a different purpose, with its own use cases, capabilities, and underlying architecture. The simplest way to understand the relationship is that LLMs are a subset of generative AI, which means all LLMs are forms of generative AI, but not all generative AI models are LLMs.

What is Generative AI?

Generative AI refers to a class of AI systems designed to create original content by learning patterns from massive datasets. Unlike traditional AI, which is built to recognize, classify, or predict, generative models produce something entirely new, be it text, an image, an audio track, or even a piece of code.

These models work by learning from real-world examples, then generating output that mimics the style, tone, and structure of what it was trained on, often using architectures like autoencoders, attention mechanisms, or context-aware agent models. They’re now used everywhere, from content creation and marketing to design, research, and product development.

What is a Large Language Model (LLM)?

Large Language Models (LLMs) are a type of generative AI trained specifically on text. They’re built to process and generate human-like text by learning from massive datasets made up of books, websites, articles, transcripts, and other written content. This training helps them understand not just the words themselves, but the underlying semantic understanding, tone, structure, and intent behind them.

Today, LLMs are becoming foundational across industries. Beyond just writing, they’re being used in research, education, customer support, and digital experiences, helping teams work faster, communicate better, and make more informed decisions.

In fact, most modern chatbots and AI agent builders are using LLMs like ChatGPT, and one of the most significant areas of impact has been in customer service, where they enable instant, intelligent responses at scale.

Generative AI vs. Large Language Model

| Aspect | Generative AI | Large Language Models (LLMs) |

|---|---|---|

| Definition | A broad class of AI models that create new, original content based on training data. | A specialized type of generative AI focused exclusively on understanding and generating text. |

| Scope | Covers a wide range of generative models across different formats, including text, visuals, and sound. | Focused purely on text-based tasks like writing, summarizing, translating, and answering questions. |

| Use Cases | Image generation, music composition, video synthesis, product design, drug discovery, etc. | Chatbots, search assistants, document summarization, customer service, and email generation. |

| Architecture | Includes GANs, VAEs, diffusion models, NeRFs, RNNs, transformers, etc. | Built using transformer-based deep learning models that rely on attention mechanisms to understand context across long sequences. |

| Training Data | Trained on multimodal data like image sets, video clips, sound recordings, codebases, or any dataset matching the output type. | Exclusively trained on massive text datasets (books, websites, forums, etc.). |

| Training Objective | To learn patterns and structures in data and generate novel content across different modalities. | To predict and generate the next token or word in a sequence based on prior context. |

| Strengths | Creative flexibility supports many data formats, rich in visual and multimedia generation. | High accuracy in language tasks, understands semantics, style, tone, and nuance. |

| Limitations | Often challenged by output quality, bias, data privacy concerns, and potential misuse for misinformation or deepfakes. | May hallucinate facts, limited to language tasks, and requires careful prompt engineering. |

| Resource Requirements | Often very high, especially for video or 3D generation. | Text-based, so generally more efficient than full multimodal models. |

| Examples | DALL·E, Midjourney, Runway, Sora, Eleven Labs, Adobe Firefly. | ChatGPT, Claude, Google Gemini, GitHub Copilot, Mistral. |

The Process and Architecture Behind Generative AI and LLMs

How Generative AI Works

Generative AI models are designed to learn patterns from existing data and use that knowledge to produce original outputs. Unlike traditional discriminative models that classify or predict based on existing data, generative models aim to create something new.

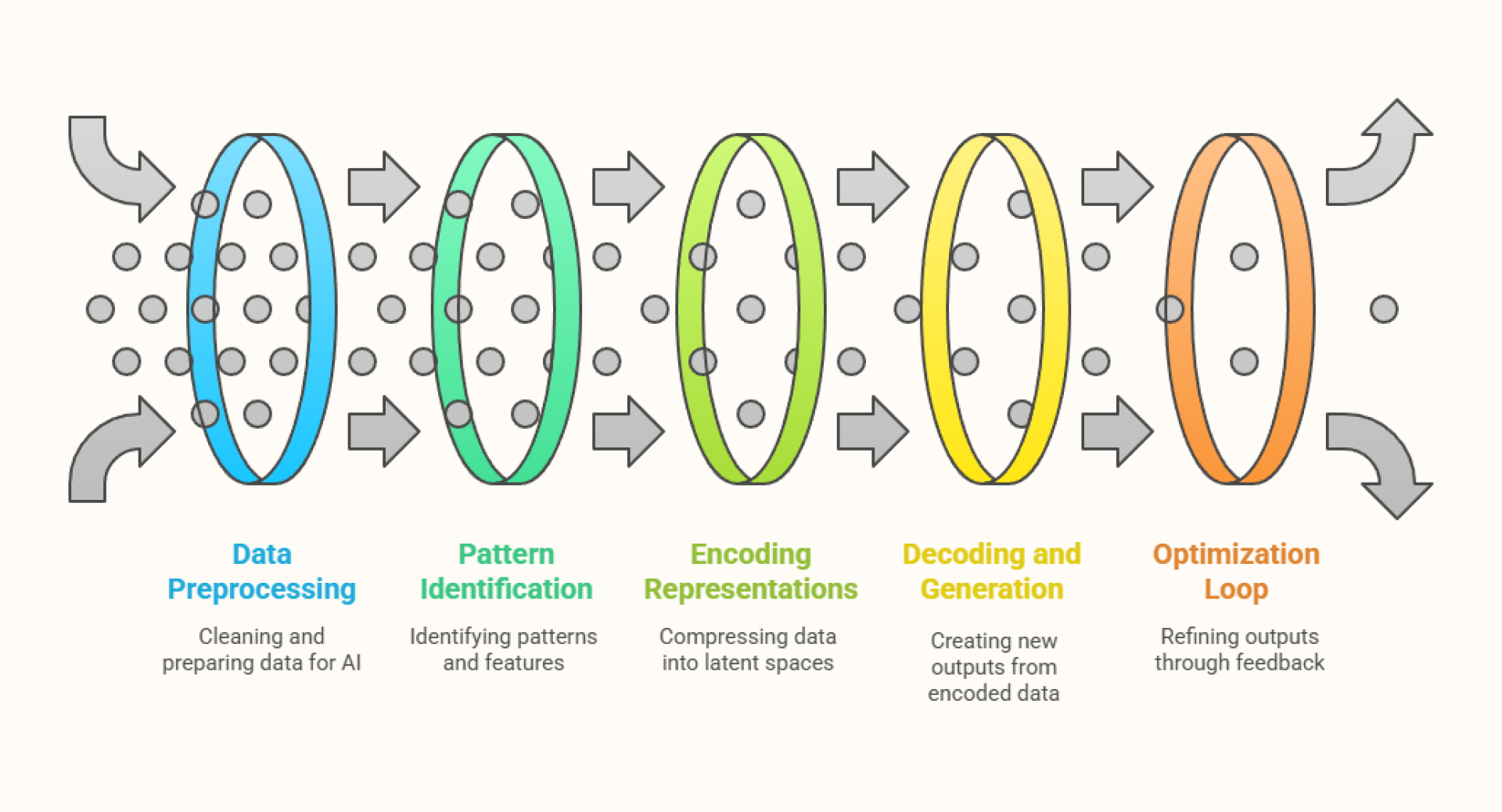

Data ingestion and preprocessing

It begins with large, diverse datasets of images, audio, text, or video. Data is cleaned, labeled, normalized, and encoded into formats suitable for machine learning models.

Pattern identification and feature learning

Using deep neural networks, the model identifies patterns, structures, statistical dependencies, and latent variables within the input data. This phase involves learning correlations between features, such as the relationships between color, texture, and shape in image datasets.

Encoding representations

Most generative models operate by encoding input data into lower-dimensional latent spaces, e.g., with autoencoders or transformers. These representations compress essential information while discarding irrelevant noise, enabling the model to understand underlying distributions.

Decoding and generation

The encoded features are then used to generate new samples. The model predicts one element at a time using a probability distribution that mirrors the learned patterns.

Optimization and feedback loop

Loss functions are applied to evaluate how close the generated output is to real data. Based on this, weights and parameters are adjusted. Techniques like reinforcement learning with human feedback (RLHF) and adversarial training help models refine outputs iteratively.

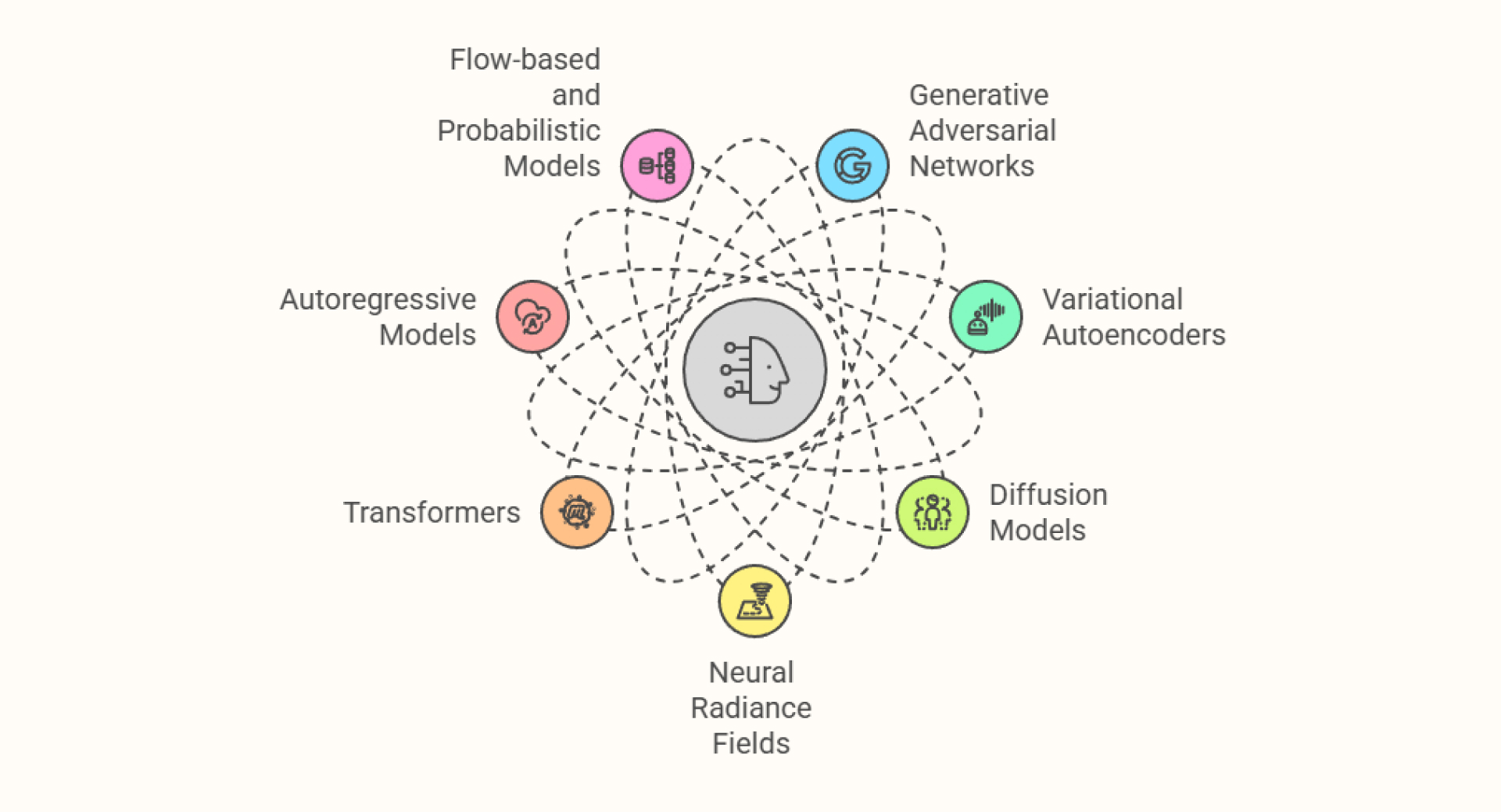

Architectures of generative AI

Generative AI uses multiple architectural families, each optimized for specific types of content generation.

Generative adversarial networks (GANs)

- Generative adversarial networks (GANs)

It uses two models, a generator that creates fake data and a discriminator that judges if it’s real or not. They train by competing with each other, which helps improve realism in outputs. Ideal for image synthesis, style transfer, and deepfakes. - Variational autoencoders (VAEs)

These encode input into a compressed space, then decode it with controlled randomness to generate variations. Useful for generating similar but not identical outputs, like different facial expressions or music styles. - Diffusion models

These models learn to generate new data by reversing the process of adding noise. They’re highly effective for producing detailed, high-quality images and are used in tools like Stable Diffusion and Midjourney. - Neural radiance fields (NeRFs)

NeRFs reconstruct 3D scenes from 2D images by learning how light interacts across views. Commonly used for photorealistic 3D generation in AR/VR and robotics. - Transformers (non-language)

Originally built for language tasks, transformers are now used in image and video generation too. They apply self-attention to understand how different parts of data relate over long sequences. - Autoregressive models

These models generate content step-by-step, predicting each next element based on what came before. Used in applications like speech synthesis, generative code, and text-to-image models. - Flow-based and probabilistic models

These explicitly model data distributions, allowing precise control and interpretation. Useful in fields like science and finance, where understanding probability matters as much as generation.

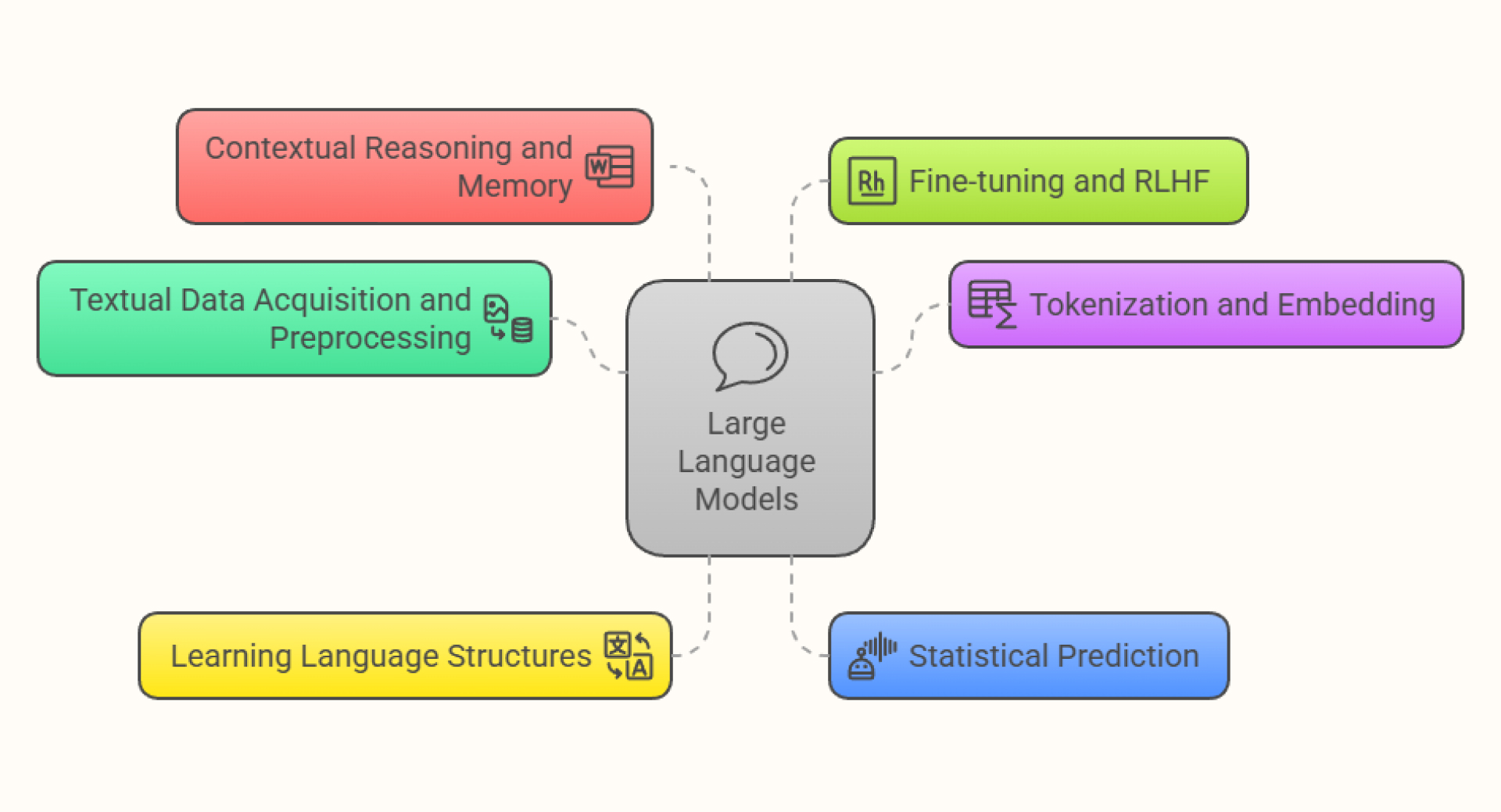

How Large Language Models (LLMs) Work

LLMs are designed to understand and generate natural language by converting massive volumes of unstructured text into statistically informed predictions about what word or sentence should come next.

- Textual data acquisition and preprocessing

LLMs are trained on huge corpora of books, articles, websites, forums, and transcripts processed using techniques like tokenization, stemming, and lemmatization. - Tokenization and embedding

The model breaks text into tokens and converts them into high-dimensional vectors known as embeddings. These embeddings encode semantic, syntactic, and even emotional information about the words. - Learning language structures

During training, LLMs use masked language modeling or next-token prediction to learn grammar, syntax, semantics, and discourse patterns. They capture deep structures like coreference resolution, verb-noun agreement, and pragmatic inferences. - Statistical prediction

At runtime, LLMs predict the next token based on context using logits, which represent the probability distribution over all possible next tokens. This process allows the model to construct coherent, fluent language sequences. - Contextual reasoning and memory

LLMs are trained to preserve long-range dependencies using self-attention layers. They can track context over hundreds or thousands of tokens, enabling them to answer multi-part questions or continue detailed narratives with high coherence. - Fine-tuning and RLHF

Post-training, LLMs are refined using reinforcement learning with human feedback, which helps them better align with user expectations, ethical constraints, and conversational clarity.

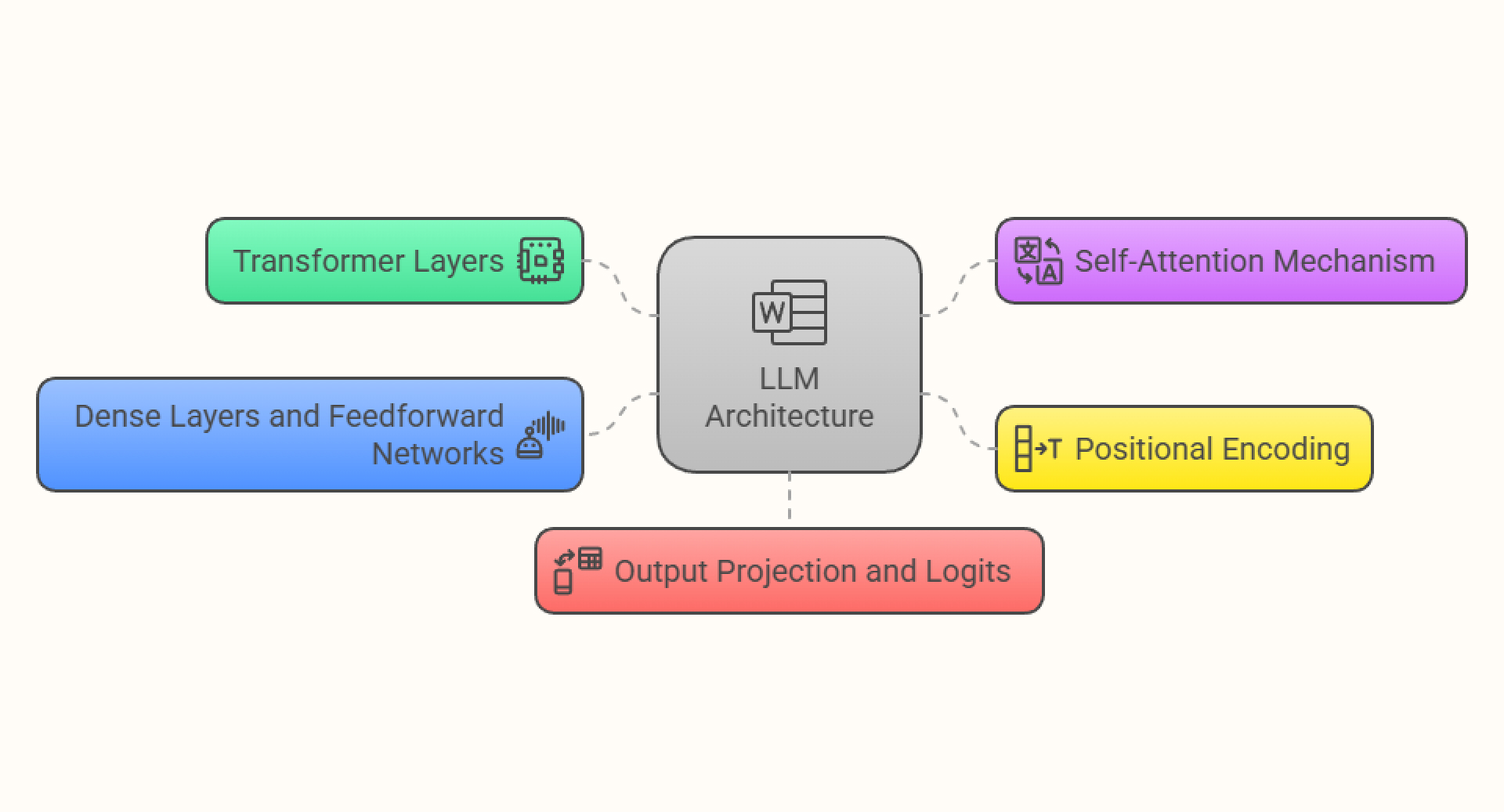

Architecture of LLMs

Most modern LLMs use transformer-based architectures, which enable efficient processing of text, better context understanding, and support for longer input sequences. This structure allows them to perform a wide range of language tasks like summarization, translation, and conversation.

- Transformer layers

These use multi-head self-attention to identify relationships between all tokens in a sequence, no matter how far apart they are. Unlike RNNs or CNNs, transformer layers allow for parallel processing, significantly improving efficiency and scalability in handling long-form language tasks. - Self-attention mechanism

Calculates attention weights that determine how much focus to place on each token relative to others in the sequence. This enables the model to understand complex language patterns like metaphors, idioms, or references that depend on distant context. - Positional encoding

Because transformers don’t process tokens sequentially, positional encodings are added to each token’s embedding to capture order information. This helps the model distinguish between similar sentences with different word arrangements. - Dense layers and feedforward networks

Fully connected layers apply transformations to the attention outputs, enabling deeper abstraction and pattern recognition. These help the model build a richer understanding of context, structure, and semantic meaning as inputs pass through successive layers. - Output projection and logits

At the final stage, the model maps internal representations to its vocabulary space using an output projection layer. Predictions are made based on logits, which represent the probability of each token appearing next. Text generation is guided by decoding strategies like greedy sampling, top-k, or temperature-based sampling to balance accuracy and creativity.

Common Challenges in Generative AI and LLMs

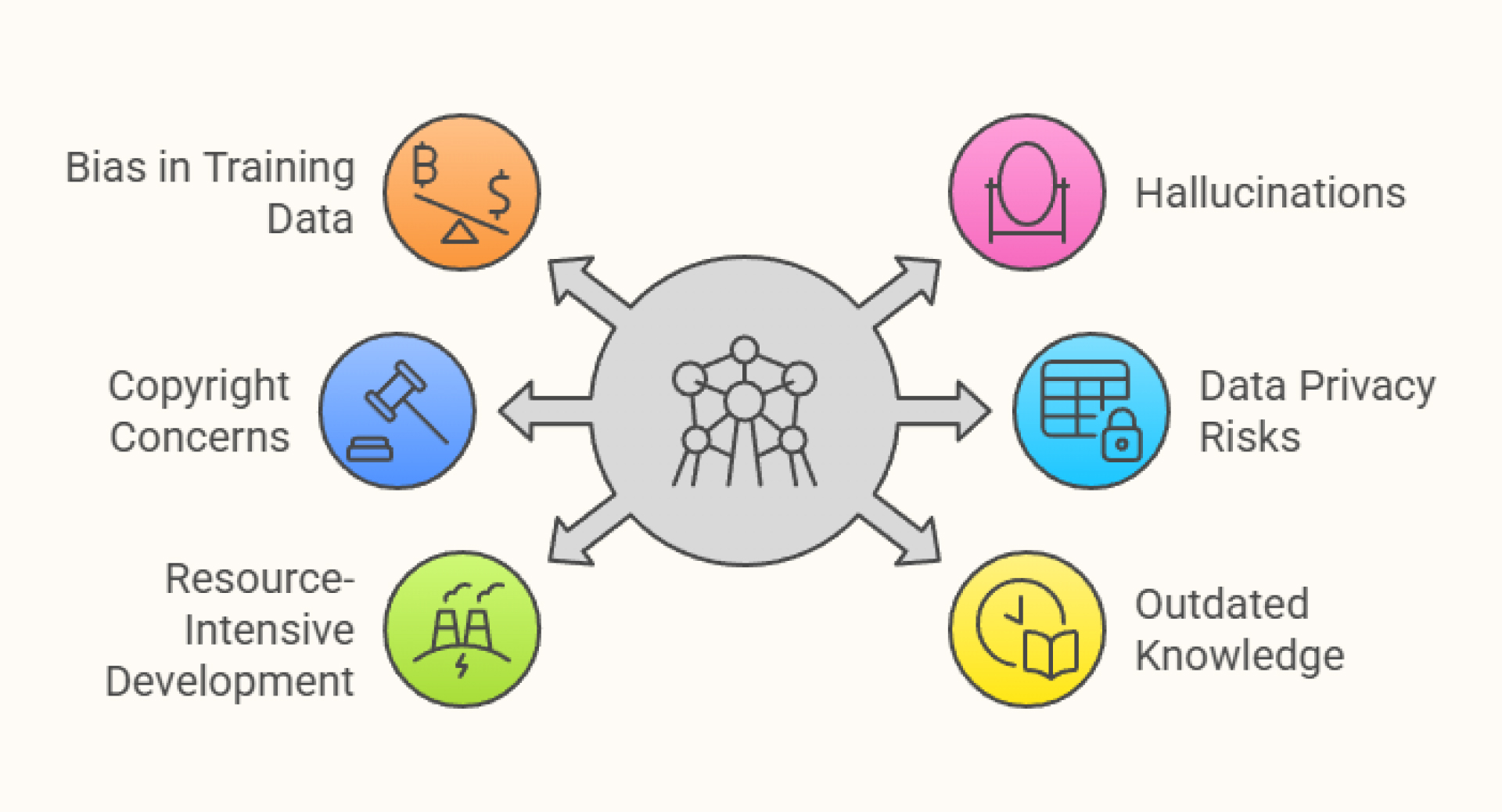

Like everything, despite being powerful, they have limitations. Here are some common challenges shared by both, mostly due to the shared foundations of large-scale training data, complex model architectures, and unpredictable output generation.

- Bias in training data

These systems learn from public datasets that often reflect real-world biases. As a result, outputs may unintentionally replicate stereotypes or unfair associations. Human feedback mechanisms, like RLHF, can also introduce subjective or cultural bias depending on who provides the feedback. - Hallucinations

Models sometimes generate false or misleading outputs, known as hallucinations. These are especially problematic in factual or high-stakes scenarios like healthcare, finance, or legal documentation. Errors may be subtle and difficult to detect, making them riskier than obvious flaws in visual content. - Copyright and ownership concerns

Because models are trained on vast, scraped datasets that may include copyrighted material, questions around ownership, licensing, and fair use remain unresolved. This has led to legal actions, especially around the commercial use of generated outputs. - Data privacy risks

The ingestion of unfiltered or sensitive data during training creates potential privacy issues. There's also a risk that private or confidential information could resurface in model outputs, especially in open or public-facing deployments. - Resource-intensive development

Training large models, whether LLMs or multimodal generative systems, demands immense computational power, energy, and infrastructure. This limits accessibility and raises concerns about sustainability and carbon footprint. - Outdated knowledge

Unless models are continuously updated or connected to real-time sources, they reflect the state of the world only up to their last training date. This is particularly limiting for time-sensitive applications like news, medicine, or current events.

That’s why, when models are trained on your own data, they tend to perform better, reducing hallucinations, staying more relevant, and giving you control over what they say. Thinkstack makes this easy by letting you build AI agents trained on content you already own, whether it’s documents, websites, Notion pages, or even live data from apps like Zapier.

It also helps overcome common issues like outdated knowledge and resource-intensive setups. You can update responses quickly, connect to multiple data sources, and fine-tune outputs all without needing to write a single line of code. Built-in privacy controls and secure access settings ensure that your data stays protected.

- Also read: Understanding the difference between llms and nlps

Challenges in generative AI

Generative AI covers a wide range of modalities beyond language, which introduces its own set of technical and ethical challenges.

- Content quality across modalities

Maintaining consistency and realism across different output types like video, music, and images is difficult. High-fidelity generation, especially in video, demands intensive training and post-processing. Output quality often varies across formats and styles. - Hardware and speed limitations

Generating high-quality outputs, especially videos or 3D scenes, remains highly resource-intensive. Models like diffusion networks or NeRFs require significant computing power, which makes running them locally impractical for most users. Even cloud-based tools often restrict output volume due to high processing costs, limiting generations under standard subscriptions and making scalability difficult for budget-conscious or consumer-grade applications. - Limited generalization across domains

Some generative models perform exceptionally well in specific styles or narrow tasks but struggle to generalize outside their trained domain.

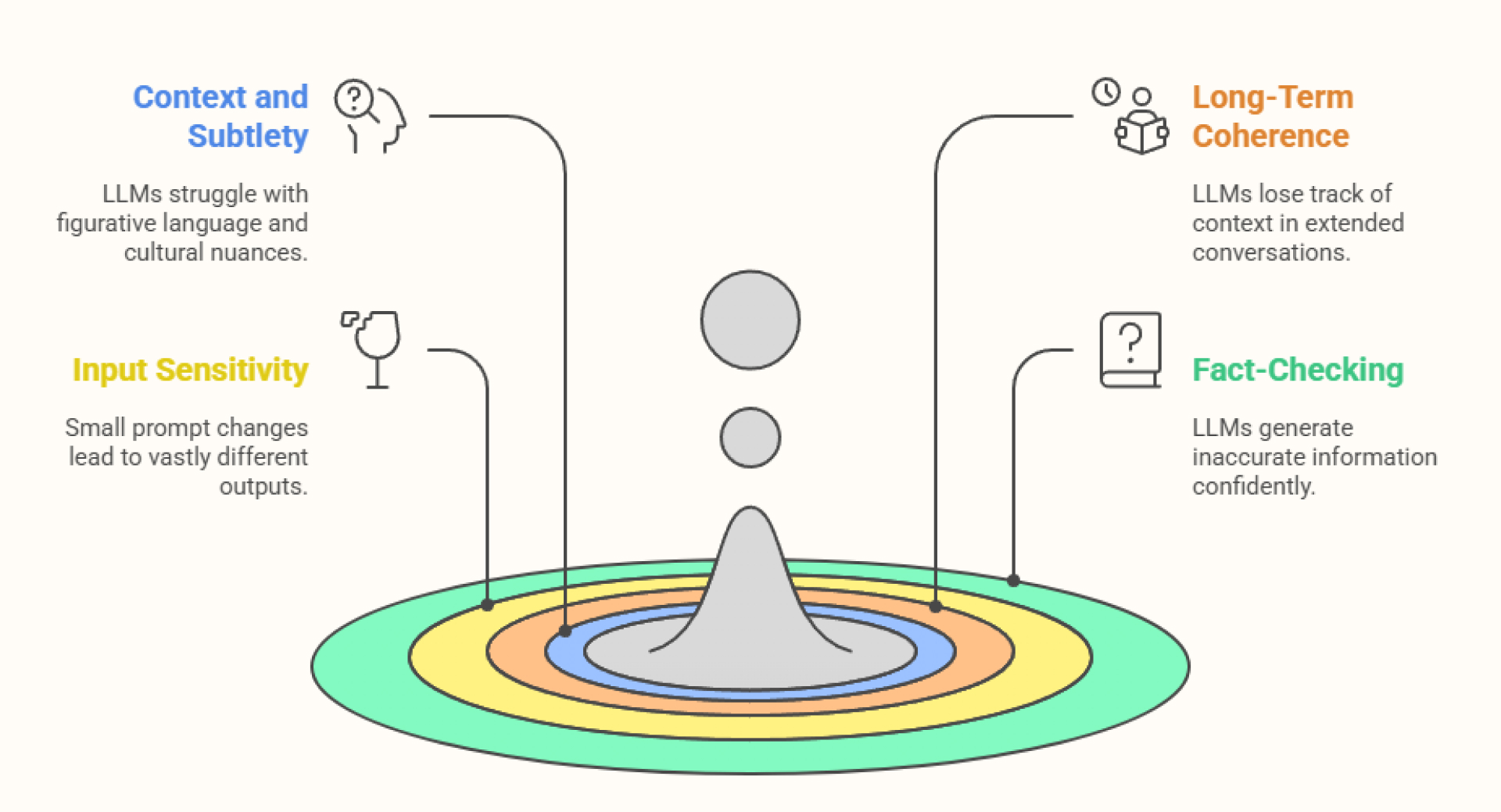

Challenges in large language models (LLMs)

LLMs face unique hurdles because of the linguistic complexity they handle and the expectations of their fluency in human conversation.

- Understanding context and subtlety

Even large models often struggle with figurative language, cultural nuance, idioms, or sarcasm. Outputs may appear fluent but lack depth or real understanding. This can lead to surface-level coherence without conceptual accuracy. - Maintaining long-term coherence

Over-extended conversations or long-form generation, LLMs can lose track of context or produce internally inconsistent content. This becomes noticeable in tasks like storytelling, summarization, or technical documentation. - Input sensitivity and prompt fragility

Small changes in prompt wording can produce vastly different outputs, making it difficult to guarantee reliable results. Prompt engineering is often required to extract useful content, which can be a barrier for non-technical users. - Fact-checking and trust

LLMs don’t have built-in mechanisms to verify the truthfulness of their outputs. They can confidently generate information that sounds correct but is factually inaccurate. This undermines trust and places the burden of validation on users.

Conclusion

Generative AI and LLMs are increasingly becoming part of how businesses and individuals work with information and content. From automating text generation to creating visuals, audio, or video, they offer practical ways to save time and improve output across different tasks.

If your work is centered on language, like drafting emails, writing content, summarizing documents, or building chatbots, LLMs are more suitable. For creative needs like image editing, video creation, or generating audio, broader generative AI tools are a better fit.

In many real-world cases, though, the two often work together. Tools like ChatGPT and Gemini now support text, image, and even audio inputs, while visual platforms like Runway and Firefly use language prompts to generate media. This overlap makes it easier to integrate both into the same workflow.

Choosing between them comes down to what you’re trying to create and the format you need. In some cases, combining both gives better results than relying on just one.

If you’re exploring how to use these technologies in your product or workflow, platforms like Thinkstack make it easier to deploy AI-powered solutions tailored to your business goals without any complexity.

Try Thinkstack’s AI in action

Get started for freeFrequently Asked Questions (FAQs)

Grow Your Business with AI Agents

- Automate tasks

- Engage customers 24/7

- Boost conversions