LLM vs NLP: The Complete Guide to Understanding the Difference

Preetam Das

July 18, 2025

11 min

Table of Contents

So you’re curious about AI.

If you’ve started exploring the field, you’ve likely come across terms like Generative AI, LLMs, and NLP. These are among the very first concepts you’ll learn about, and they often appear together, which can make them easy to confuse.

They each play a different role in the AI ecosystem, and understanding the difference is crucial for building a solid foundation in AI. In this article, we’ll break down what a large language model LLM is, what natural language processing NLP means, and their differences.

Natural Language Processing vs. Large Language Models

Natural Language Processing NLP and Large Language Models LLMs are closely connected, but they’re not the same. NLP is a broader discipline. It has been around for decades and focuses on how computers can understand, interpret, and generate human language, while LLMs are a powerful type of model developed within this field. The confusion comes from their overlap in tasks and terminology, especially with the rise of advanced LLMs like GPT that can perform nearly all common NLP functions.

What is Natural Language Processing (NLP)?

NLP is a branch of AI that helps machines understand and work with human language, whether it’s reading, analyzing, or generating text in a useful context. It forms the foundation for most language-based AI systems used today.

Earlier NLP systems were built using rule-based methods or classical machine learning models. These approaches relied on manually crafted linguistic rules or statistical techniques trained on limited, task-specific datasets.

They were effective for narrow applications like spam detection, part-of-speech tagging, keyword extraction, and named entity recognition, especially when accuracy and interpretability were prioritized over scale.

However, their performance was often constrained by domain specificity, lack of contextual understanding, and limited adaptability to new or complex language patterns.

Modern NLP relies heavily on machine learning and deep learning, particularly neural network architectures like transformers.

These models are trained on large-scale, unstructured datasets, allowing them to capture complex patterns, context, and intent in human language. Unlike earlier approaches, they support more flexible, scalable, and general-purpose applications from semantic search and sentiment understanding to document summarization, conversational assistants, and multilingual translation.

How NLP Shapes the Capabilities of LLMs and AI

Without NLP, there would be no LLMs, because LLMs are not a replacement for natural language processing, but rather a more advanced version of it. Every capability of a large language model is built on decades of NLP research, linguistic frameworks, and foundational techniques that made language understanding in machines possible in the first place.

LLMs build upon these foundations through deep learning and transformer-based architectures, enabling them to operate at scale with a richer understanding of context. Core components of LLMs, such as tokenization, embeddings, attention mechanisms, and semantic modeling, are built directly on NLP principles. These would not function without the preprocessing methods, linguistic structures, and task definitions that NLP has long provided.

Even today, NLP continues to serve as the invisible yet essential layer powering the language intelligence behind nearly every AI system. It plays a critical role in text normalization, language detection, intent recognition, syntactic parsing, and entity tagging. And for use cases that don’t require the scale or complexity of LLMs, NLP offers a lightweight, cost-effective, and more interpretable alternative.

Specifically, NLP enables LLMs to:

- Preprocess input effectively by cleaning, segmenting, and standardizing raw text for analysis.

- Understand linguistic structure through part-of-speech tagging, dependency parsing, and entity recognition.

- Resolve ambiguity by applying contextual rules to determine word meaning in complex sentences.

- Evaluate output quality by using established NLP metrics like BLEU, ROUGE, and F1-score.

- Adapt across tasks by leveraging task-specific fine-tuning frameworks grounded in traditional NLP methods.

What is a Large Language Model (LLM)?

Large language models LLMs are a specialized class of models within the field of natural language processing NLP. These models are trained using self-supervised learning on massive datasets that include web pages, books, scientific papers, and code.

LLMs learn by analyzing vast amounts of text to determine what word or phrase is likely to come next, based on what’s already written. This allows them to generate fluent, human-like responses across a wide variety of language tasks.

What distinguishes LLMs from traditional NLP models is their scale and generalization. They use billions of parameters and are optimized using methods like backpropagation and careful adjustment of the learning rate to minimize loss during training. This scale allows them to perform zero-shot learning, generalizing to new tasks without requiring explicit examples, and to support applications like automated machine learning (AutoML) in domains where labeled data is limited.

| Aspect | NLP (Natural Language Processing) | LLM (Large Language Model) |

|---|---|---|

| Scope and relationship | Broad AI field focused on processing and understanding human language using various techniques. | A specialized type of model developed within NLP, built to handle language at scale. |

| Foundation & techniques | Uses rule-based systems, statistical models, classical ML, and deep learning depending on the task. | Built on transformer architecture using deep learning and self-attention mechanisms. |

| Training data needs | Can work with smaller, domain-specific datasets. | Requires massive, diverse datasets to learn language patterns effectively. |

| Capabilities | Performs well on focused, rule-based or pattern-driven tasks like tagging or classification. | Excels at open-ended tasks like summarization, translation, and contextual content generation. |

| Learning style | Task-specific learning. Needs retraining to adapt to new problems or domains. | General-purpose learning. Adapts to new tasks via few-shot or zero-shot learning. |

| Output style | Generates structured or labeled outputs like keywords, categories, or summaries. | Produces fluent, human-like text across paragraphs or entire documents. |

| Interpretability | Easier to understand and debug due to simpler architecture and logic. | Harder to interpret due to complexity and large number of parameters. |

| Resource usage | Lightweight, low-cost, and suitable for smaller systems deployment. | Requires significant computing resources, both for training and deployment. |

| Reliability | Stable for narrow use cases but limited by rules or lack of context. | Despite their capabilities, these models can generate outputs that are factually incorrect or misleading, often presented with high confidence. |

| Ethical considerations | Easier to monitor for bias due to smaller scope and controlled datasets. | Riskier in terms of bias, misinformation, and unintended use due to scale and data diversity. |

| Language support | Often, language-specific, low-resource languages may need dedicated models. | Can support multiple languages natively due to multilingual training data. |

Limitations and Challenges of NLP and LLMs

Despite their significant progress, both NLP and LLMs face practical, technical, and ethical challenges. Some of these issues are shared between the two, while others are specific to their design and deployment.

Common Challenges in NLP and LLM

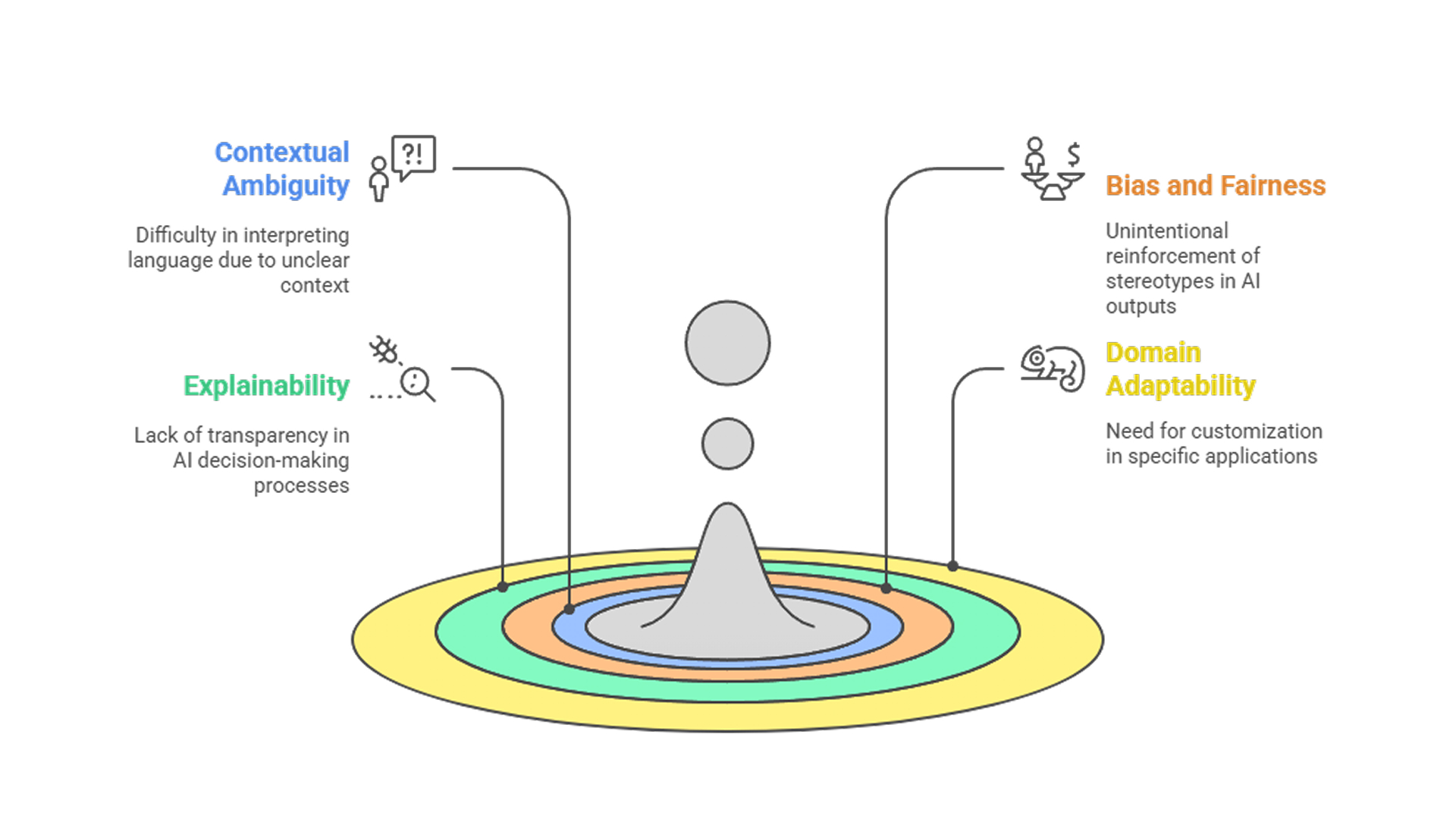

Contextual ambiguity

Both NLP systems and LLMs can misinterpret language when context is unclear or when multiple meanings are possible. Sarcasm, idioms, and informal phrasing remain difficult to consistently decode without additional cues.Bias and fairness

Models trained on biased or unbalanced data can unintentionally reinforce stereotypes or produce misleading results. This is a shared challenge across both traditional NLP systems and large language models, making fairness and responsible training essential in any language-based AI application.Explainability and transparency

Most large language models are difficult to interpret because they rely on deep learning architectures with billions of parameters that learn complex statistical patterns from data without using transparent, rule-based logic. As a result, it becomes challenging to understand or explain how a specific output was generated.Domain adaptability

Language systems, whether traditional NLP or LLM-based, may require significant customization or fine-tuning to perform reliably in domain-specific applications such as legal contracts, scientific papers, or multilingual corpora.

Challenges of Natural Language Processing (NLP)

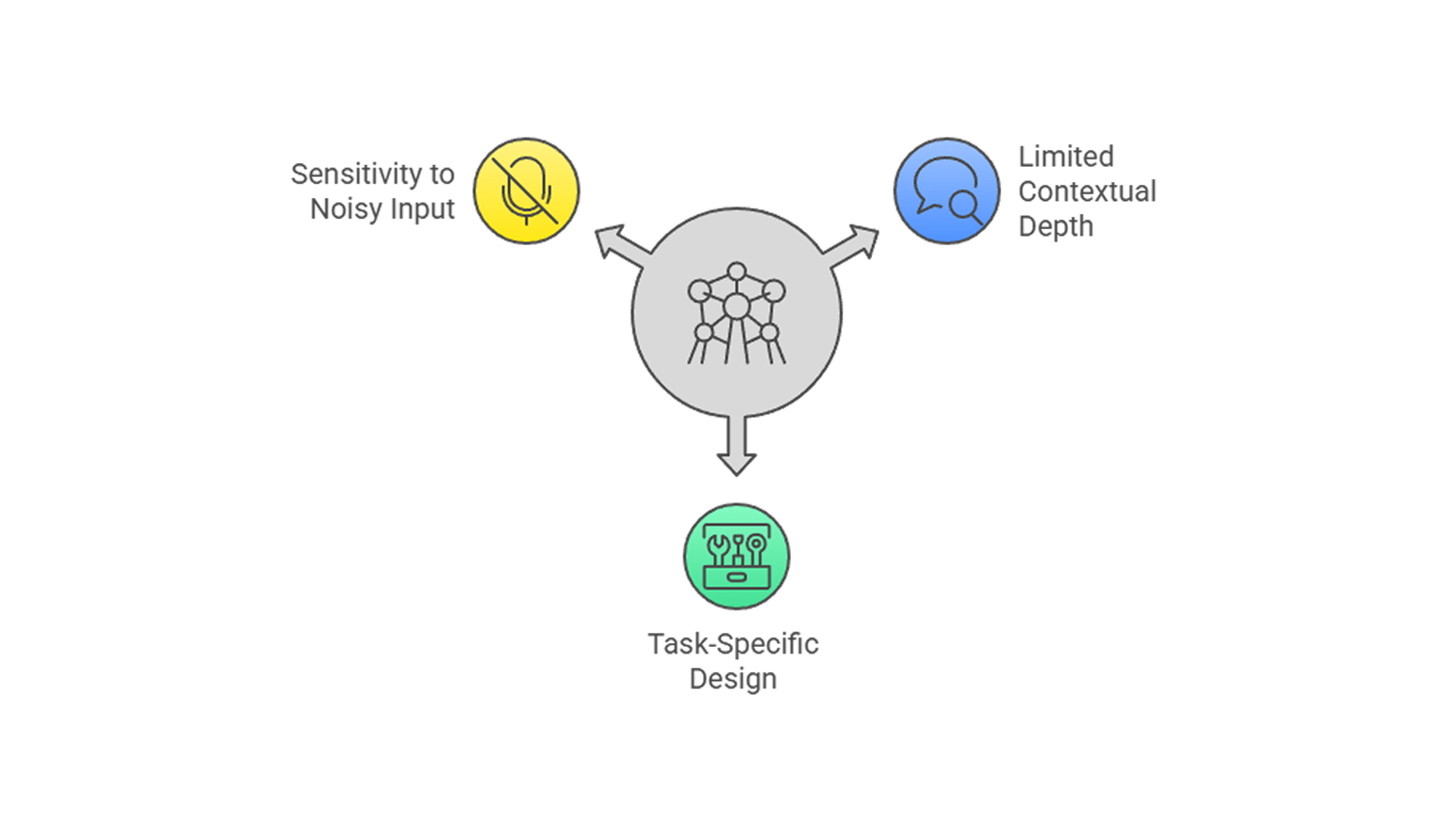

Limited contextual depth

Classical NLP systems often struggle to process long-range dependencies across paragraphs or documents. They excel in syntactic parsing or named entity recognition but lack the deep semantic understanding needed for more nuanced tasks.Task-specific design

NLP models are typically designed for narrow functions such as classification, tagging, or extraction, and must be retrained or re-engineered for new use cases. This limits scalability and flexibility across workflows.Sensitivity to noisy input

NLP systems are sensitive to spelling errors, informal grammar, or background noise (in speech-based systems). Misinterpretations are common when inputs deviate from expected formats.

Challenges of Large Language Models (LLMs)

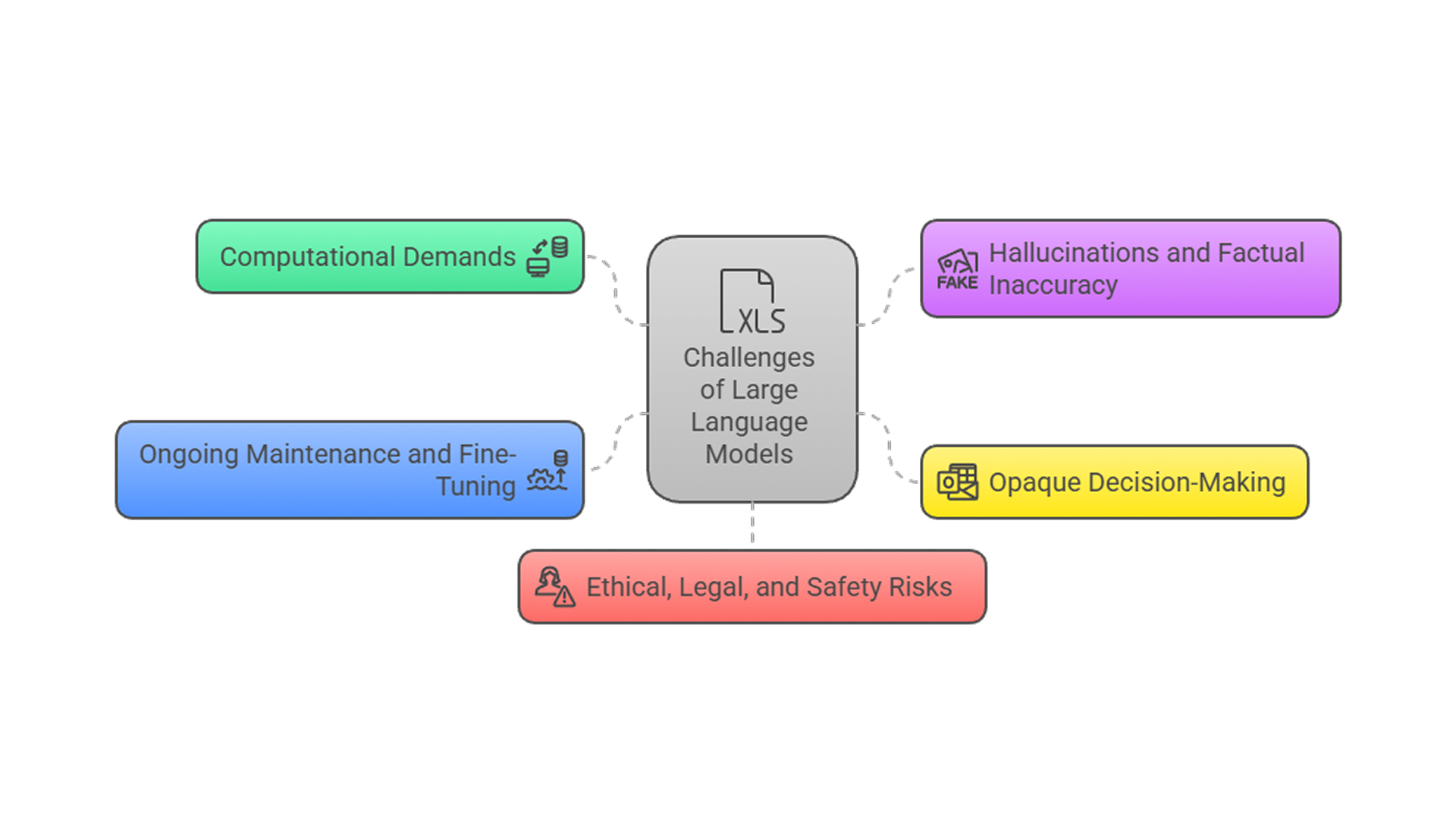

Computational demands

LLMs are resource-intensive to train and deploy. They require massive GPU/TPU infrastructure, high memory capacity, and continuous power, making them expensive and less accessible for smaller organizations.Hallucinations and factual inaccuracy

LLMs can produce confident but factually incorrect outputs, a phenomenon known as hallucination. These errors can be subtle and difficult to detect, reducing reliability in critical use cases.Opaque decision-making

Due to their scale and complexity, LLMs are difficult to interpret or audit. Understanding why a particular output was generated is often infeasible, which limits their use in environments requiring full transparency.Ongoing maintenance and fine-tuning

Pretrained LLMs often need frequent updates or fine-tuning to stay relevant in dynamic domains. Without this, their performance can degrade or fail to reflect the latest developments or terminology.Ethical, legal, and safety risks

LLMs inherit the biases of their training data, which can lead to discriminatory or harmful responses. They also pose broader risks such as content misuse, data leakage, or producing outputs that violate copyright or ethical boundaries.

Architectural Differences Between NLP and LLMs

| Aspect | NLP (Natural Language Processing) | LLM (Large Language Model) |

|---|---|---|

| Foundational architecture | Built on rule-based systems, statistical models, and compact neural networks. Still used for structured tasks like parsing, tagging, or classification. | Built entirely on deep learning, primarily using Transformer architecture with self-attention to model language patterns at scale. |

| Context handling | Often processes inputs sequentially or in local windows. Long-range dependencies remain challenging for older architectures. | Designed to handle long contexts using attention mechanisms. Can understand non-linear and distant relationships across full documents. |

| Model structure | Modular pipelines with distinct stages—e.g., preprocessing, tagging, and parsing handled separately. | Unified architecture with end-to-end processing where all language understanding is embedded into a single model. |

| Data requirements | Can train on smaller or domain-specific labeled datasets. Compact models like BiLSTMs or quantized transformers are common. | Traditionally trained on massive corpora, but newer techniques like RAG, LoRA, and QLoRA allow effective fine-tuning on smaller, focused datasets. |

| Text representation | Uses methods like TF-IDF, Bag of Words, and static word embeddings (e.g., Word2Vec, GloVe) for numerical modeling. | Learns contextual embeddings dynamically. No manual feature extraction, word meanings are inferred from deep patterns in text. |

| Attention mechanism | Absent or limited in traditional models, small-scale attention is used in more modern, lightweight neural setups. | Core component of transformer models. Self-attention allows the model to weigh all tokens in a sequence simultaneously for context awareness. |

| Positional understanding | Maintains word order using sequential models (like RNNs) or parsing trees. Limited in handling positional variation. | Positional encodings are built into transformer layers to capture sequence structure effectively. |

| Model adaptability | Task-specific and less transferable. Requires retraining or manual engineering for new domains. | Highly adaptable using transfer learning, instruction tuning, and parameter-efficient fine-tuning. Strong few-shot and zero-shot capabilities. |

| Flexibility in application | Performs well in well-defined domains like search, POS tagging, or form extraction. Struggles with open-ended tasks. | Strong in general-purpose language understanding and generation. Supports a wide range of creative, analytical, and reasoning tasks. |

Conclusion

Today, NLP and LLMs are no longer separate tools they’re part of a single, unified language ecosystem. Modern large language models are built on the core principles of NLP, embedding everything from parsing and entity recognition to semantic understanding within a single architecture.

It is behind many of the systems we interact with every day, from smarter search engines and voice assistants to real-time translation, content generation, and document processing. These capabilities are not just technical milestones, they’re practical tools being used across industries to automate workflows, extract insights, and build more intelligent digital experiences.

Businesses today are leveraging LLMs to build AI agents and chatbots that drive real results, handling customer support, generating content, analyzing documents, and improving workflows across industries like finance, healthcare, and education. These technologies are helping companies scale faster, boost revenue, and operate more efficiently.

In today’s competitive market, adopting AI tools is essential for building a successful, future-ready business. Thinkstack AI agent builder makes this easy by allowing you to build custom AI agents trained on your own data.

And that’s just the beginning. From automating workflows to enhancing customer experiences, and that’s just scratching the surface. There’s a lot more you can do.

Try Thinkstack’s AI in action

Get started for freeFAQs

Grow Your Business with AI Agents

- Automate tasks

- Engage customers 24/7

- Boost conversions