The Complete Guide to AI Agents in 2026

Preetam Das

November 04, 2025

9 min

Table of Contents

It’s a busy Monday, and you’re staring at a mountain of tasks, maybe it’s reviewing shipping invoices, handling customer complaints, organizing CRM data, or just replying to emails.

What if you could simply ask for help, and AI takes care of all the routine work automatically, freeing you to focus on strategic tasks that actually need your expertise?

That’s what an AI agent can do.

It’s more than just generative AI that writes content or answers questions. AI agents can take action; they execute tasks toward a goal by interacting with tools, systems, and their environment.

What are AI Agents

At the most fundamental level, an AI agent is an autonomous software system that can observe its environment, reason about its objectives, and take actions to achieve a defined goal, without needing constant human instruction.

Unlike passive models or rigid automation scripts, AI agents can operate across multiple steps, adapt to changing conditions, and even correct their own mistakes.

What makes this possible is the system design that provides the agent with structure, memory, logic, and the ability to interact with its environment.

How System Design Creates AI Agents

The effectiveness of an AI agent hinges on its cognitive architecture, which includes three core components:

- Model: Often an LLM, this is the decision engine that reasons, plans, and chooses actions.

- Tools: APIs, extensions, data sources, or actuators the agent can use to gather information or affect the world.

- Orchestration layer: The logic that governs perception, memory, planning, execution, and self-correction.

Without this design, the agent can’t function autonomously. It would be limited to single-turn interactions, like a chatbot that forgets everything after one reply.

The Role of Large Language Models

Modern AI agents are almost always built around large language models (LLMs). These models provide the underlying reasoning engine, capable of natural language understanding, logic, and planning, enabling agents to comprehend goals, break down complex tasks, interpret data, and communicate effectively.

An LLM alone is not an AI agent. It simply responds to prompts, much like an AI chatbot. An AI agent is defined by its agent architecture, the full system of models, tools, memory, and orchestration that enables it to act, reason, and interact autonomously.

When a large language model is combined with tools, memory, and an orchestration layer, it becomes an LLM agent, a specific type of AI agent.

This design enables the LLM to operate autonomously, interact with external systems, manage context over time, and pursue complex goals through structured reasoning and action.

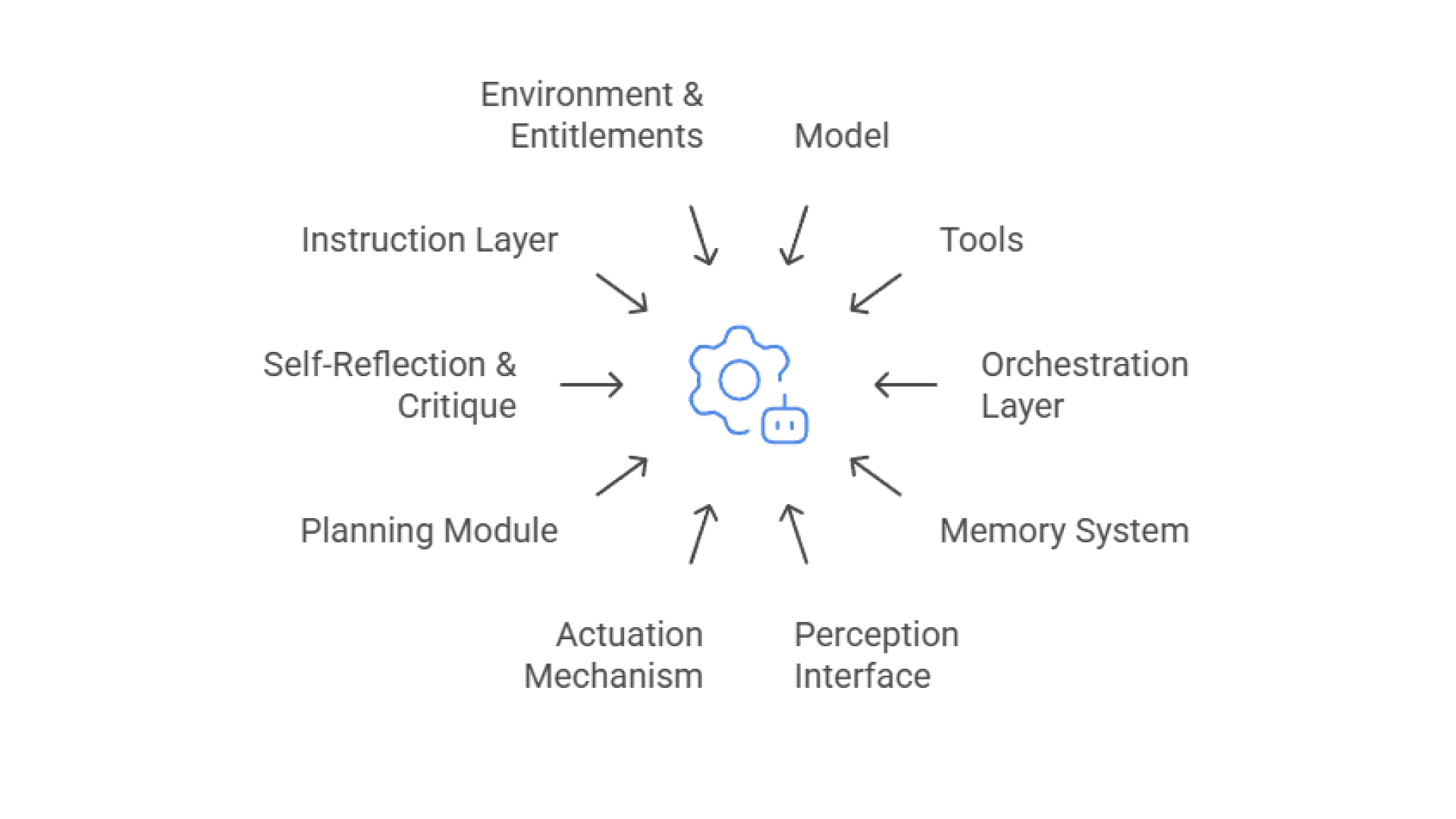

Components of AI Agent

To operate effectively, AI agents need more than a model and tools. They rely on a set of integrated systems that manage planning, memory, perception, and execution. These components work together to ensure the agent can function reliably and adapt to real-world tasks.

- Model

The model, typically an LLM, serves as the agent’s reasoning core, enabling it to understand goals and generate logical next steps. On its own, it’s limited; paired with tools and orchestration, it becomes part of a system capable of intelligent action. - Tools

Tools allow the agent to take action, calling APIs, retrieving data, or executing tasks. They extend the model’s capabilities, turning reasoning into real-world output. Different tool types serve different needs, from secure client-side functions to real-time API access. - Orchestration layer

This governs how the agent sequences reasoning, tool use, and memory across steps. It maintains flow, context, and goal alignment, enabling dynamic, multi-step execution. - Memory system

Memory allows the agent to retain and reuse context, from short-term interactions to long-term knowledge and preferences. It supports continuity, personalization, and learning over time. - Perception Interface

Perception involves how agents ingest data through user inputs, documents, APIs, or physical sensors. It forms the agent’s understanding of the environment and drives the next actions. - Actuation mechanism

Actuators carry out the agent’s decisions, sending messages, modifying data, or controlling hardware. They bridge logic and execution. - Planning module

This module breaks down goals into steps, prioritises actions, and adapts plans based on feedback. It allows agents to operate beyond predefined flows. - Self-reflection & critique

Reflection mechanisms help agents evaluate their performance, identify errors, and improve output, sometimes autonomously, sometimes with human oversight. - Instruction layer

Instructions define the agent’s role, behaviour, and scope. They include goals, tool access, formatting rules, and success criteria, serving as the agent’s operational baseline. - Environment & entitlements

For agents to work effectively, they need secure access to tools, data sources, and operational systems. Entitlements manage secure, scoped permissions within those environments.

Types of AI Agents

| Agent Type | Description |

|---|---|

| Simple reflex agents | React to current input using fixed condition–action rules. Operate without memory or awareness of past events. Best suited for predictable, fully observable environments where tasks are repetitive and logic is straightforward. |

| Model-based reflex agents | Extend reflex logic with a memory of past inputs, allowing decisions to factor in limited context. Maintain an internal state that updates based on perception, enabling them to function in partially observable or slightly dynamic environments. |

| Goal-based agents | Make decisions by evaluating possible actions against a desired outcome. Require mechanisms for goal definition, state evaluation, and planning. Offer more flexible behaviour but depend heavily on clear goal structures and success criteria. |

| Utility-based agents | Choose actions based on calculated utility, selecting the outcome with the highest perceived value. Introduce optimization and trade-off handling using a utility function. Require more data and logic but allow for nuanced, value-driven decisions. |

| Learning agents | Improve performance over time through experience. Agents rely on feedback from their environment or outcomes to refine their actions and improve over time. Capable of adapting to evolving environments, optimising performance, and handling scenarios that are too complex to script manually |

| Autonomous agents | Operate independently in real-time environments. Continuously sense inputs, make decisions, and execute actions without ongoing human input. Key characteristics of agents include reacting in real time, operating with autonomy, and adapting to changing or uncertain inputs. |

| Multi-agent systems (MAS) | Multi-agent systems involve several agents coordinating either independently or collaboratively to achieve different or common objectives. Scalable and modular, though more complex in orchestration and system design. |

| Hybrid agents | Combine multiple agent architectures into one integrated system. Capable of reactive behaviour, strategic planning, context awareness, and learning. Designed for versatility and robustness across complex, multi-layered tasks and environments. |

Read the detailed guide here: 8 Types of AI Agents (Comparison Guide + No-Code AI Solution)

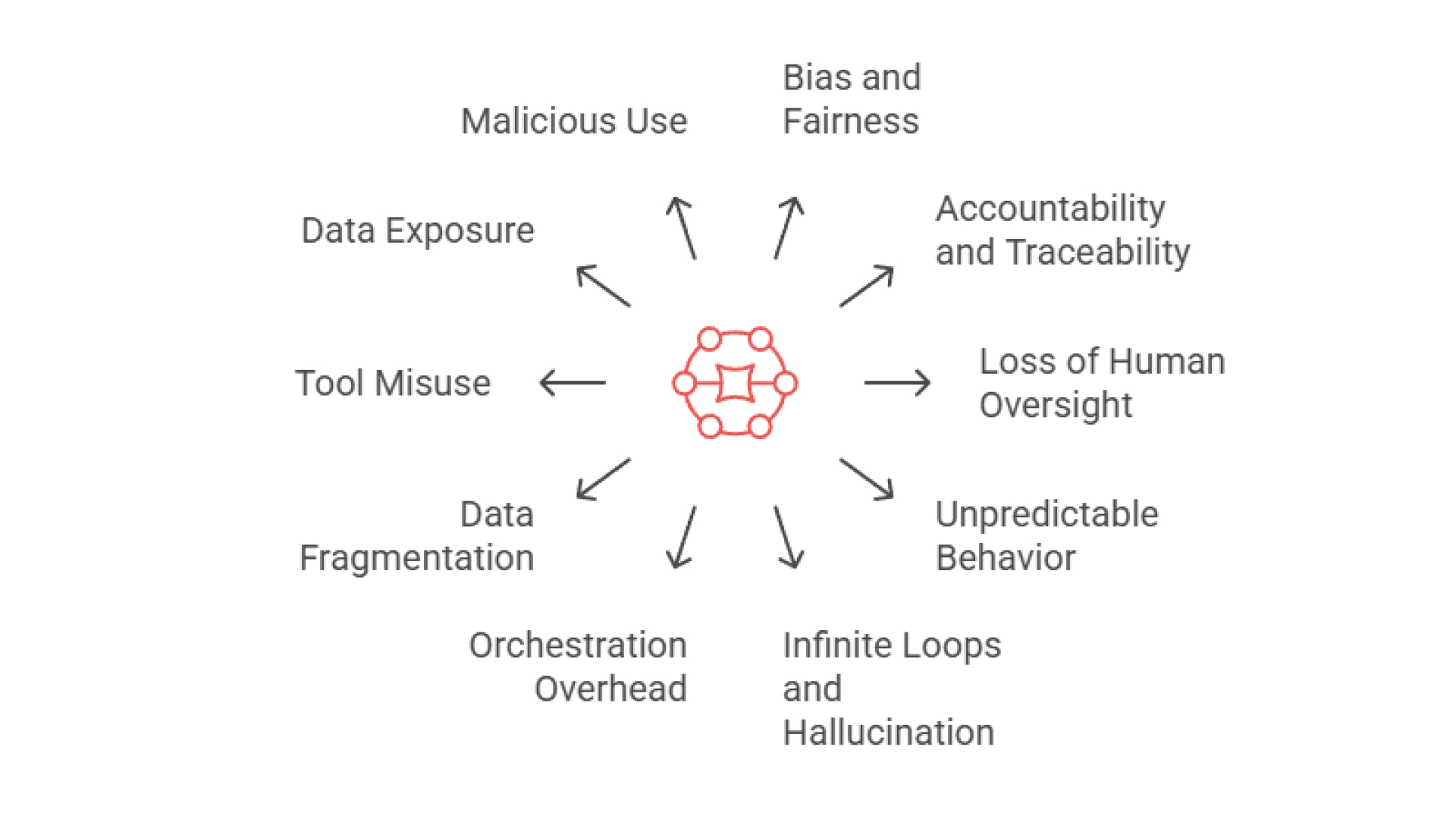

Risks and Challenges of AI Agents

- Bias and fairness

Even with improvements in data auditing and bias mitigation techniques, AI agents can still reflect underlying biases from training data or misaligned objectives, particularly in high-impact areas like customer experience and recruitment. - Accountability and traceability

As organizations adopt more autonomous AI agents, establishing clear accountability and maintaining auditable decision trails is essential to ensure transparency, trust, and compliance at scale. - Loss of human oversight

As businesses scale with low-code and no-code AI agent builders, some systems operate without adequate HITL (Human-in-the-Loop) checks, posing risks in decision-critical flows. - Unpredictable behaviour in complex workflows

Despite improvements in reasoning frameworks such as hierarchical planning and auto-chaining, AI agents can still produce unreliable outcomes in unstructured or loosely bounded environments, where clear guardrails are absent. - Infinite loops and hallucination chains

Agents still frequently fall into retry loops or hallucinate reasoning paths, especially in multi-agent or tool-augmented settings where reasoning steps aren't fully grounded. - Orchestration overhead and complexity

Modern orchestration layers introduce significant operational complexity. Managing agent coordination, shared memory, and branching tasks often requires more engineering effort than anticipated, particularly as system scale grows. - Data fragmentation and access silos

Many agent systems still operate without seamless integration into enterprise data ecosystems. In the absence of unified data pipelines, retrieval-augmented generation (RAG), or real-time access, agent outputs remain limited in accuracy and contextual alignment. - Tool misuse and function miscalls

While toolformer-like self-supervised fine-tuning is emerging, most agents still rely on fragile prompt-based tool calls, which can break or behave incorrectly with API changes. - Data exposure through agents

Agents that execute code, retrieve data, or interact with internal systems introduce risk vectors, especially when permissions, memory policies, or output scopes are not tightly controlled. Improper setup can result in data leaks or compliance violations. - Malicious use of autonomous agents

The expansion of open-source frameworks makes it easier than ever to deploy autonomous agents at scale. Without proper traceability or access governance, these systems could be misused for spam, scraping, or unauthorized automation.

Conclusion

AI agents are no longer experimental. They’re already changing how businesses operate, automating support, improving decision-making, and streamlining day-to-day tasks.

Faster execution, lower costs, better decisions, and systems that scale across teams. As the underlying models and orchestration frameworks improve, agents are becoming more capable, moving from single assistants to coordinated, multi-agent systems designed for real work.

AI agents are now part of how companies manage customer experience, internal workflows, security, and even software development.

Businesses must care about agentic AI, not as a trend, but as a core part of staying competitive. It’s quickly becoming the new normal for how work gets done.

And you don’t need an engineering team to get started.

With Thinkstack, you can build your own AI agent trained on your data, connected to your tools, and ready to handle real work.

Whether it’s an internal AI agent or a sales agent, you can launch it without writing a single line of code.

Build your first AI Agent

Get started for freeFrequently Asked Questions (FAQs)

Grow Your Business with AI Agents

- Automate tasks

- Engage customers 24/7

- Boost conversions