What is Attention Mechanism?

Team Thinkstack

May 09, 2025

Table of Contents

Attention mechanism is a core concept in machine learning that helps a model understand, prioritize, and focus on the most relevant parts of the input instead of treating all parts the same.

Traditional models like Recurrent Neural Networks (RNNs) and LSTMs struggled with long sequences because they had to compress all input into a single fixed-size vector. This often causes key details, especially from earlier parts, to get lost, which is a big problem in tasks like translation, where meaning depends on long-range context.

Attention mechanism solved this by removing the need to squeeze everything into one compressed format. Instead, models can scan the full input and decide what matters most at each step. The model dynamically calculates these weights by comparing the current context to all parts of the input, and then creates a weighted combination based on what’s most relevant. This not only helps with understanding long sequences but also speeds up training and makes model behavior easier to interpret.

Today, attention mechanisms power many top-performing AI systems, from translation and summarization to vision and speech, and are a foundational part of architectures like transformers.

How Attention Mechanism Works

At a technical level, attention works by turning the idea of “focus” into math. It allows models to calculate, at every step, which parts of the input deserve more focus and by how much. Instead of relying on a single compressed summary of the input, attention creates a dynamic, on-the-fly summary based on what the model currently needs. This allows the model to zero in on specific words, tokens, or image patches, whichever parts are most relevant, without losing sight of the broader context.

The QKV framework

Most attention mechanisms follow a structure called the Query-Key-Value (QKV) framework:

- Query (Q): What the model is currently looking for or focusing on.

- Key (K): Acts like an identifier for each part of the input, used to compare against the query.

- Value (V): The actual content or data connected to each key.

The model compares the query with each key to calculate a score of how well that part of the input matches what it’s currently trying to find. The model uses Softmax to turn the similarity scores into attention weight values that represent how much focus each input should receive, adding up to 1. When a section receives a higher weight, it signals the model to focus more heavily on that portion of the input.

Step-by-Step Breakdown

- Input encoding: The process begins by turning raw input into numeric vectors that the model can understand and process.

- Q, K, V generation: These vectors are used to create the query, key, and value vectors through learned transformations.

- Scoring: The model checks how well each key matches the query using similarity calculations like a dot product.

- Weighting: These match scores are converted into probabilities using a Softmax function, so the model knows how much importance to give each input.

- Weighted sum: These weights are then used to compute a weighted average of values, forming what's known as the context vector.

- Integration: The context vector is passed to the next layer or used for generating output.

Types of Attention Mechanisms

- Soft attention: Considers all input parts and assigns each a score, creating a smooth, trainable weighting system.

- Hard attention: Picks one specific part of the input to focus on, rather than spreading attention across all parts.

- Global attention: Considers the complete input sequence to determine which parts deserve the most focus.

- Local attention: Looks only at a limited section of the input near the current point. This makes it more efficient for longer sequences but can sometimes miss important distant connections.

- Self-attention: Each part of the input checks against all other parts to understand how they relate. This lets the model pick up on how different parts of the same sequence relate to each other.

- Cross-attention: One input sequence focuses on another—for example, when generating text based on a different input like a sentence in another language.

- Multi-head attention: Runs several attention layers at the same time, with each one learning to focus on different things. This gives the model a richer, multi-angle view of the input.

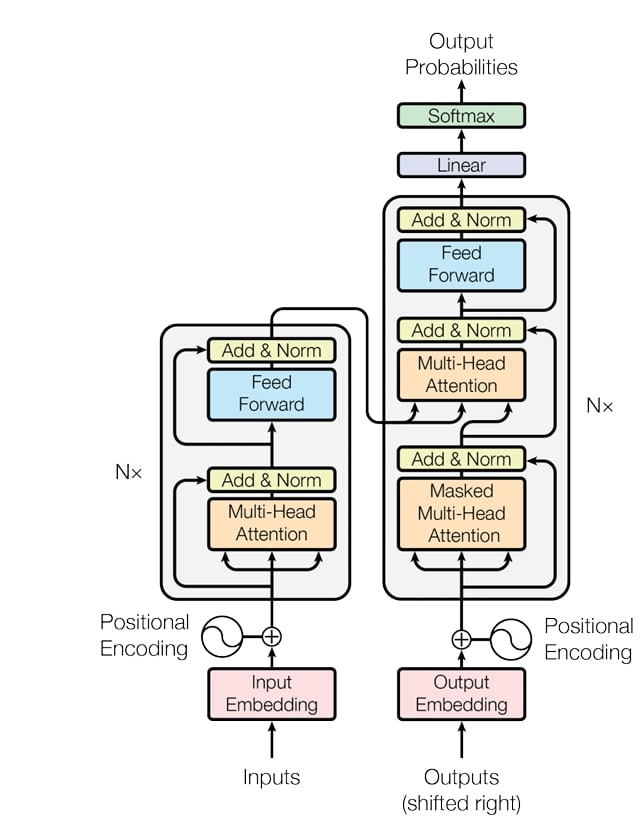

In Modern Architectures

Modern deep learning architectures like transformers rely entirely on attention mechanisms to process and understand data. A key component is self-attention, where each part of the input sequence compares itself with every other part to capture relationships, like how words in a sentence depend on each other for meaning.

Different transformer-based models use attention in tailored ways:

- BERT uses the encoder part, applying unmasked self-attention to understand the entire input at once. This makes it a strong fit for tasks such as classifying text, detecting sentiment, or answering questions based on context.

- GPT uses the decoder with masked self-attention, which allows it to generate one word at a time by only looking at earlier words—ideal for writing, dialogue, and language generation.

- ViT (Vision transformer) adapts the same attention mechanism to vision tasks by treating an image as a sequence of patches and learning how different parts of the image relate.

Conclusion

Attention mechanism has changed how models understand and work with data. It made it possible to handle long sequences better, adapt to different tasks, and process language or images more effectively. From translation and summarization to vision and speech, it powers many of the systems we use today. But it isn’t perfect, it can be costly when dealing with very long inputs, and understanding what the model focuses on isn’t always straightforward. Still, it plays a key role in how deep learning continues to grow and improve across different fields.