What are Autoencoders?

Team Thinkstack

May 09, 2025

Table of Contents

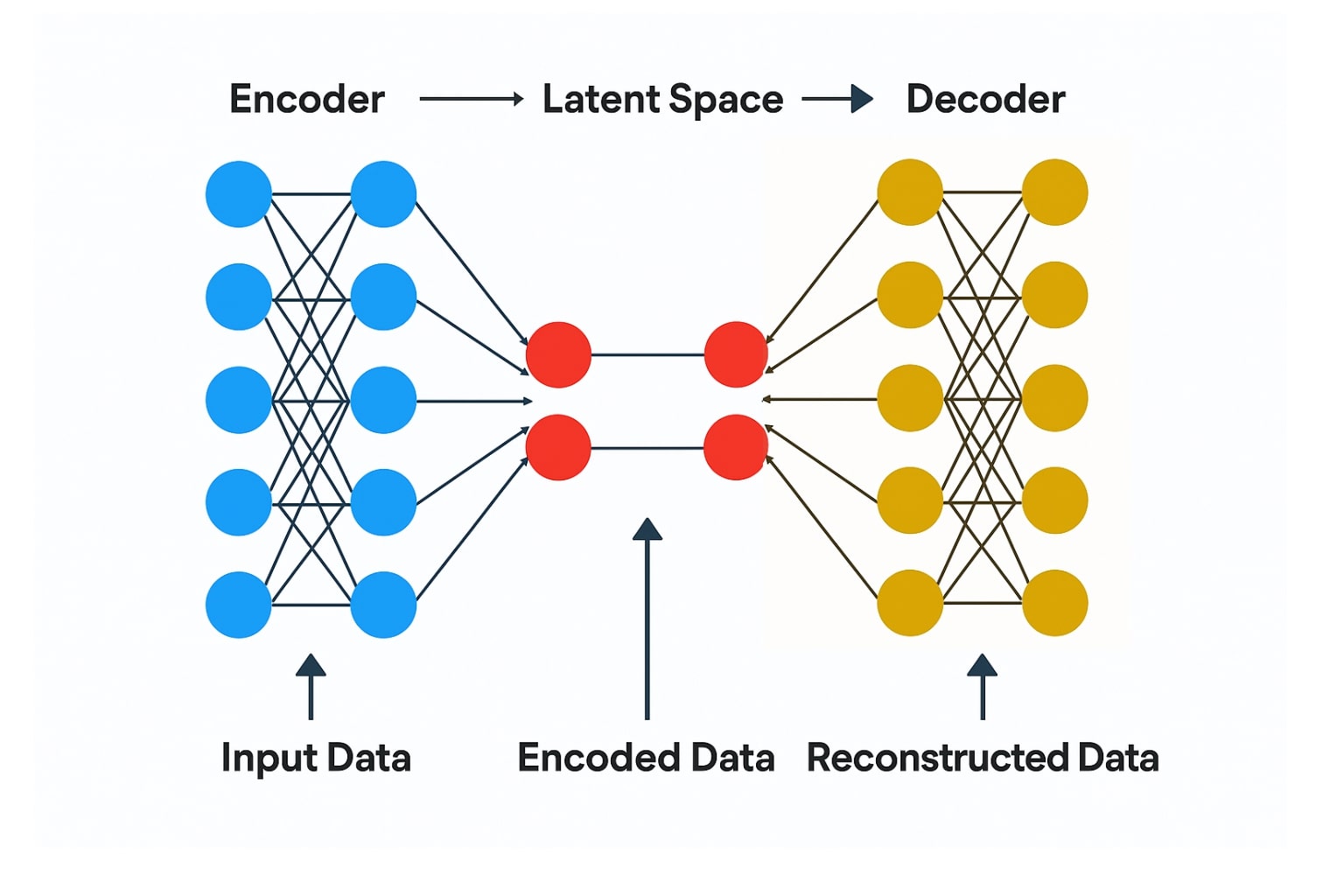

Autoencoders are a foundational concept in machine learning, designed to take in data, compress it, and then try to rebuild it as close to the original as possible. Unlike traditional AI models that require labeled data, autoencoders are trained using self-supervision. The input itself acts as the target. This makes them ideal for learning from large amounts of unlabeled data, which is often cheaper and easier to get.

An autoencoder works through two main components:

- Encoder: Takes the input and compresses it into a smaller, lower-dimensional version called a latent representation.

- Bottleneck layer: That compressed version passes through a bottleneck layer, which turns the input into a compressed code, keeping only the most important features and discarding redundant or less important details.

- Decoder: Which reconstructs the original data from this compressed form.

The entire network is trained to minimize the difference between the original input and the reconstructed output, which is called reconstruction loss. The latent representation, also called an embedding, captures the core structure or meaning of the input data.

Autoencoders have been widely adopted in areas like image processing, anomaly detection, and representation learning due to their ability to distill complex data into compact, meaningful forms. Focusing only on the most relevant aspects of the input while filtering out noise or redundancy.

How Autoencoders Work

We have already covered how autoencoders work and their core components: the encoder, bottleneck, and decoder. Here’s a closer look at how the process flows from input to output.

The encoder applies a series of transformations, layer by layer. These layers learn to spot patterns in the data, each layer reduces complexity, pushing the data toward something more compact but still meaningful.

At the center is the bottleneck, where everything gets squeezed into a latent code. It’s a compact code that keeps just enough to rebuild the input without having memorized it.

The decoder re-expands the data using its own layers in reverse, working back toward something close to the original input. It’s not an exact mirror of the encoder; it follows the patterns the model picked up during training.

The whole system learns through reconstruction loss. During training, the model measures how close the output is to the input to see how well it’s doing. Using backpropagation and gradient descent, it updates its internal weights to improve. The encoder gets better at capturing the core information, while the decoder improves at rebuilding it closer to the original.

What drives learning in autoencoders is constraint. These constraints force the model to focus on what is important, making it good at spotting subtle differences, like in anomaly detection.

Types of Autoencoders

The core concept is the same for all autoencoders. In practice, different tasks need different tweaks, like some data might be noisy, some might need generating, and some might benefit from clearer features. The structure stays the same, but each variant changes how the model learns to handle specific challenges better.

- Vanilla autoencoder is the simplest setup. It uses fully connected layers to compress and rebuild the input. Works for basic tasks like dimensionality reduction, but can just memorize the input if not properly constrained.

- Undercomplete autoencoder uses a bottleneck smaller than the input size to force compression. It’s designed to focus on the most important features and is often used when linear methods like PCA aren’t enough.

- Sparse autoencoders limit how many neurons can activate at once, even in wider layers. This makes the model specialize and is useful when learning clearer, more interpretable features.

- Denoising autoencoder learns by working with noisy data and figuring out how to rebuild the clean version from it.

- Contractive autoencoder adds a penalty during training to reduce sensitivity to small input changes. It encourages the model to focus on stable, consistent patterns, not noise.

- Variational Autoencoder (VAE) and its Variants learn a distribution over the latent space instead of a single fixed code, making them ideal for generating new data like synthetic images, text, or audio. β-VAEs add a stronger focus on disentangling features for better interpretability, while VQ-VAEs replace the continuous latent space with a discrete codebook, enabling high-fidelity generation and serving as effective tokenizers in large multimodal systems.

- Convolutional autoencoder uses convolutional layers to handle images or spatial data. It’s ideal when structure matters, like compressing or denoising an image, because it preserves spatial patterns.

- Sequence-to-sequence autoencoder is made for sequential inputs like text or time series. It uses RNNs or Transformers to compress a full sequence into one vector and then reconstruct it step by step.

Challenges of Autoencoders

One of the biggest strengths of autoencoders is that they don’t need labeled data, making them especially useful in modern machine learning workflows where labeled datasets are often scarce. But like any tool, they come with trade-offs, and understanding both sides is important.

Without enough constraints, an autoencoder might just memorize the input. Since it compresses data, the reconstruction is inherently lossy, so it’s not ideal for tasks that demand pixel-perfect accuracy or lossless output. Sequence models can also lose information when dealing with long inputs, and small datasets often aren’t enough for effective training.

Autoencoders work best when you need flexible, unsupervised learning, but not when the goal is exact output, clear interpretability, or high precision from limited data.

Conclusion

Autoencoders are a basic idea in machine learning that helps models find patterns, simplify large amounts of data, and even create new content like images or text. They’re flexible and can learn without labeled input, but also come with challenges like overfitting and imperfect reconstruction. Still, they remain a key building block in many modern AI systems.