What is Automated Machine Learning?

Team Thinkstack

May 09, 2025

Table of Contents

With the growing demand for AI/ML, traditional machine learning is time-consuming, expensive, and resource-intensive. And with the lack of skilled talent in the market with a strong understanding of data prep, algorithm selection, feature engineering, and fine-tuning, all through iterative, manual processes. Access to ML becomes difficult for users who don’t have deep expertise in data science.

AutoML (Automated Machine Learning) automates and streamlines the entire machine learning pipeline, speeds up experimentation, and reduces the need for constant expert oversight, resulting in high-performing models with minimal manual effort, often outperforming traditional methods built through manual tuning.

It works by removing bottlenecks and breaking down the ML pipeline into modular components, then systematically trying combinations to find the best-performing setup. Many AutoML tools use optimization strategies such as Bayesian search or evolutionary algorithms to search the configuration space efficiently, instead of relying on trial-and-error or grid search.

Key challenges in manual ML workflows include:

- Data prep is slow and inconsistent: Cleaning, formatting, and transforming raw inputs often require custom handling for each dataset.

- Feature engineering is effort-heavy: Creating meaningful features takes domain knowledge and trial-and-error, especially for complex problems.

- Model selection and tuning are manual: Comparing algorithms and adjusting settings is time-consuming and often involves repeated cycles of testing.

- Labeled data isn’t always easy to get: Supervised tasks depend on labeled examples, but collecting and tagging data can be costly and hard to scale.

AutoML addresses these challenges directly. It automates repetitive and complex parts of the pipeline, letting experts focus on more important and valuable tasks, leading to direct benefits like faster development cycles, higher productivity, broader accessibility, scalability/repeatability, and stronger performance. At scale, AutoML reduces the cost and complexity of ML adoption. It enables faster deployment, better resource allocation, and more consistent project results.

How AutoML Works

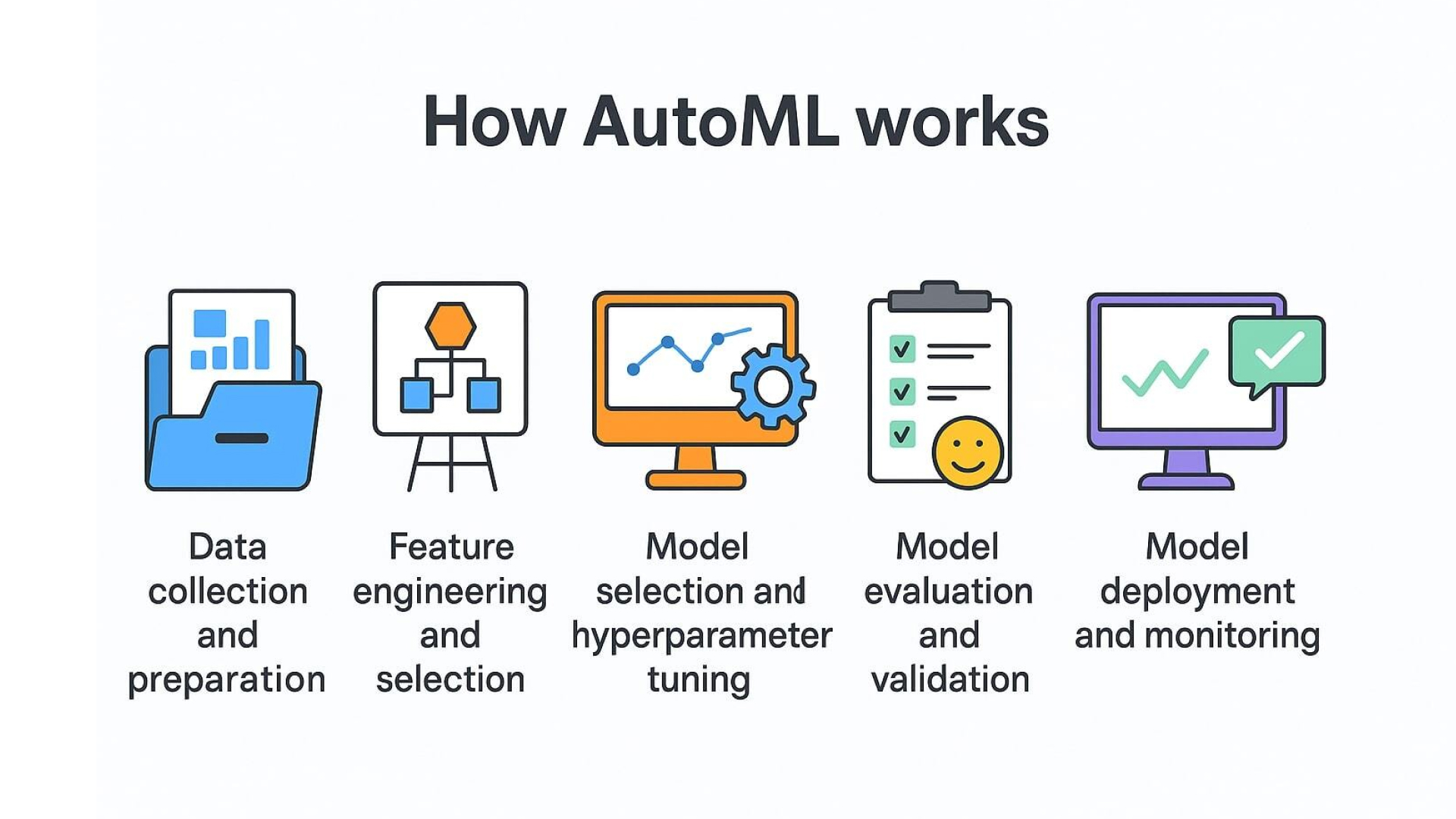

The core workflow of AutoML includes five major stages: data preparation, feature engineering, model selection, evaluation, and deployment.

- Data collection and preparation: This is the foundational step of any machine learning pipeline, and AutoML handles much of it automatically. It begins by collecting data from various sources, then prepares it for training through cleaning, formatting, and preprocessing. AutoML systems filter out low-quality or irrelevant data and automate tasks like missing value imputation, normalization, and deduplication using tools such as Katara, BoostClean, and AlphaClean.

- Feature engineering and selection: Once data is clean, it is transformed into meaningful features for the model to learn from.It automates feature creation and tuning using methods like Bayesian optimization and genetic-style search to find what works best.

- Model selection and hyperparameter tuning: AutoML tests multiple algorithms in parallel and tunes them to quickly identify the best-performing model and configuration for the task. Platforms like Auto-sklearn, TPOT, H2O, and Vertex AI use optimization techniques such as Bayesian search and genetic algorithms to explore different settings, like learning rates, layer sizes, or regularization, and zero in on the most effective setup with minimal manual input.

- Model evaluation and validation: Once trained, models are evaluated using techniques like cross-validation, holdout testing, and early stopping to ensure reliability and prevent overfitting. AutoML measures performance with metrics such as accuracy, F1-score, AUC, or RMSE, and provides detailed reports on feature importance and explainability, making results easier to interpret without manual work.

- Model deployment and monitoring: Models are deployed as APIs or services, with AutoML platforms handling scaling, versioning, and updates. In production, they monitor performance and detect issues like prediction drift (when input data changes over time) or training serving skew (when live data differs from training data). Advanced platforms like Vertex AI support automated monitoring and retraining to keep models accurate over time.

Advanced Components in AutoML

- Neural architecture search (NAS) automates the design of neural networks by searching for the best-performing architecture from a defined space. It uses methods like reinforcement learning, evolutionary algorithms, and gradient-based optimization to discover efficient structures.

- Hyperparameter optimization (HPO) is an essential step in AutoML that automatically tunes a model’s settings to achieve the best performance without manual trial and error. It replaces manual trial-and-error with search strategies like random search, Bayesian optimization, and bandit-based methods.

- Ensemble learning involves combining the predictions of multiple models to achieve higher accuracy and more robust results than any single model could provide. Tools like Auto-sklearn and SageMaker Autopilot automatically build ensembles from top-performing models during the search process.

- Meta-learning allows AutoML systems to leverage insights from previously solved tasks, making it faster and more efficient when tackling new, similar problems. It helps recommend models, speed up optimization, and reduce search time by using experience from similar problems. This makes AutoML smarter and more efficient over time.

Challenges in AutoML

While AutoML offers speed, automation, and accessibility, it also comes with trade-offs that can’t be ignored.

- Black box models:

AutoML models work well but are hard to interpret. This limits trust, especially in critical domains. - Bias and overfitting:

AutoML can amplify bias or overfit without checks. Fairness tools and early stopping help manage this. - High computational cost:

Search processes like NAS and HPO can be compute-heavy. - Limited flexibility:

AutoML may not support complex or custom workflows. Manual tuning still outperforms in niche cases. - Reproducibility issues:

Outcomes may differ between runs because many AutoML methods rely on stochastic search processes. - Overfitting to benchmarks:

Some AutoML tools perform well on benchmarks but don’t generalize beyond them. - Workflow gaps:

Not all tools automate the full pipeline feature engineering and data prep often need manual input. - Lack of domain knowledge:

Most systems don’t factor in task-specific constraints or expert input during search. - Poor transferability:

Models or architectures found for one task don’t always adapt well to others. - Theoretical gaps:

There's limited understanding of why certain AutoML methods work or fail. - Human replacement myth:

AutoML augments, not replaces, human expertise. Overselling full automation is risky. - Scope limitations:

Automating advanced tasks like RL or multimodal learning remains a major challenge. - Collaboration challenges:

Building systems where humans and AutoML work together effectively is still an open problem.

Conclusion

AutoML is widely used across industries like healthcare, finance, marketing, and manufacturing. Its relevance continues to grow as AI adoption scales. AutoML makes ML faster and more accessible, letting automation handle the heavy lifting while people focus on smart, real-world decisions. Letting more people, even those without deep technical backgrounds, access and apply machine learning in real-world projects.