What are Autonomous AI Agents?

Team Thinkstack

May 16, 2025

Table of Contents

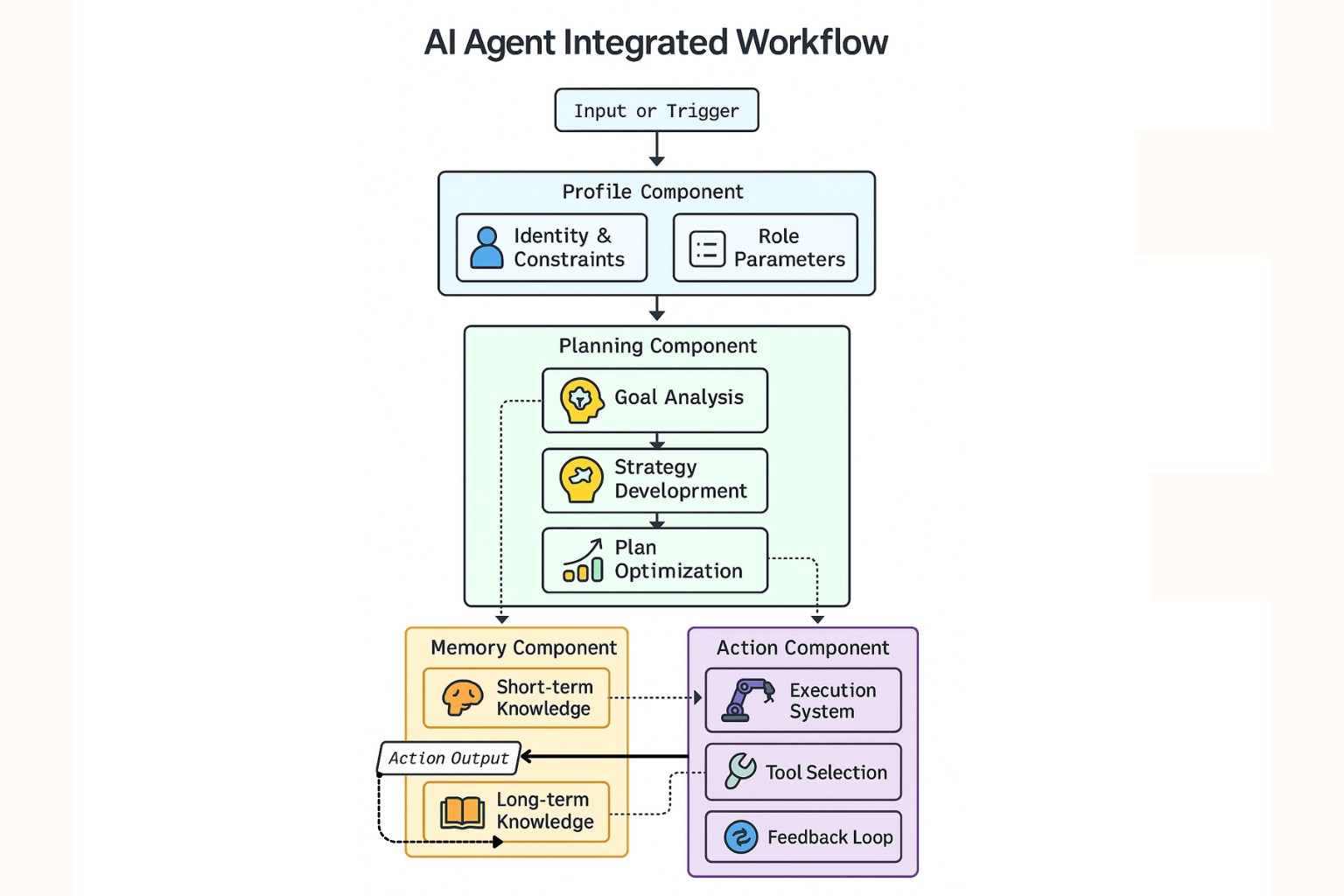

Autonomous AI agents combine the language capabilities of large language models (LLMs) with planning, reasoning, decision-making, and action. It represents a major shift in the field of artificial intelligence (AI) as it goes beyond what traditional AI or LLMs do, like generating text or performing specific tasks. An AI agent can interpret the intent, create strategies, interact with external tools and data sources, evaluate the results to make decisions, and adapt—all with minimal human oversight.

What enables autonomous AI agents to perform in contexts that require continuous thinking and flexibility is their modular structure and added functionalities, such as:

- Planning breaks down high-level objectives into executable steps.

- Reasoning involves thinking through tasks, solving problems, and making informed decisions.

- Tool usage enables access to APIs, web interfaces, databases, and software utilities to perform tasks or retrieve information as needed.

- Adaptation adjusts behavior based on feedback, success criteria, or updated inputs.

- Reflection evaluates past performance to refine future strategies.

Many agents also include a self-evaluation loop, reviewing outputs and adjusting their approach. This creates a flexible feedback cycle that enables the system to learn and optimize over time.

As the technology has advanced, multiple agents can work together as a team. These multi-agent systems, coordinated through frameworks like CrewAI or Swarm, allow for complex collaborative problem-solving, mimicking human teams by assigning specialized roles, sharing information, and coordinating workflows.

By automating complex tasks, assisting with diagnostics in healthcare, research in science, coding in software, and support in customer service, it reduces manual effort and speeds up outcomes.

Core Capabilities and Architecture

To understand how it works in real scenarios, it’s important to look at how the system is built and how each part works together.

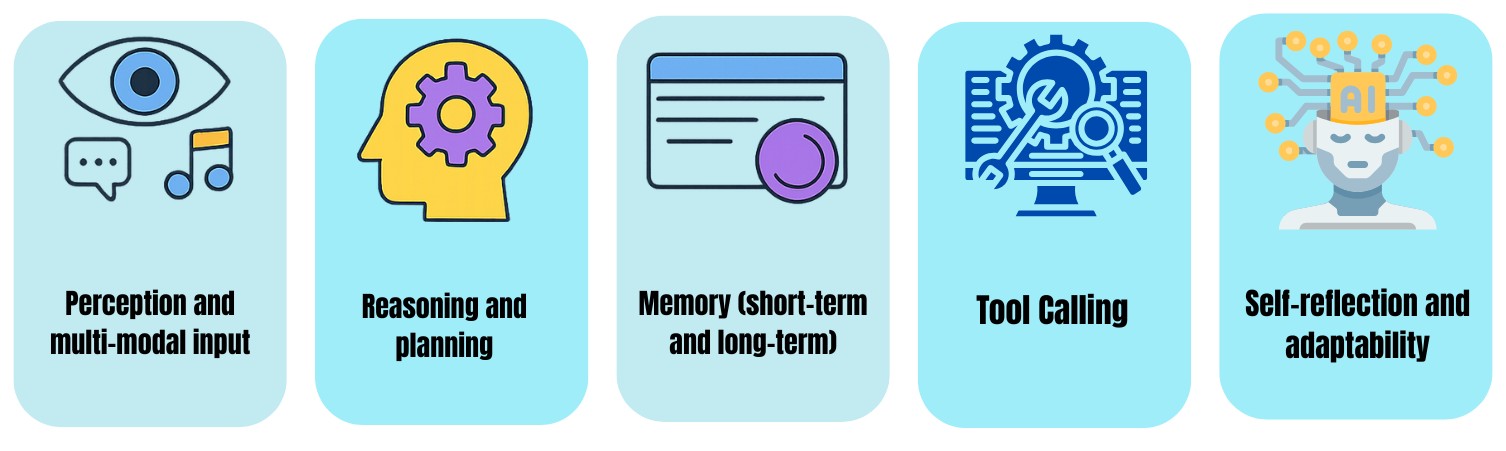

Perception and multi-modal input

Agents can understand and process inputs like text, images, audio, and sensor data. Through technologies like computer vision, speech recognition, and NLP, it can understand and respond to diverse inputs.

Reasoning and planning

Autonomous AI agents, instead of reacting to inputs with fixed responses, break down complex goals into subtasks, reason through the logic, and adapt strategies in real time. Techniques like Chain-of-Thought (CoT) and frameworks such as ReAct and Tree-of-Thought (ToT) allow agents to solve problems step-by-step.

Memory (short-term and long-term)

This enables agents to learn from interactions, remember user preferences, store task history, and continuously improve through experience. Short-term memory is managed through the model’s context window; long-term memory uses external vector stores. Retention and recall of information is important to maintain context.

Tool Calling

Tool calling allows an AI agent to go beyond text generation by interacting with APIs, databases, code environments, and web tools. Using frameworks like LangChain, LlamaIndex, and CrewAI, it selects and executes tools to retrieve data, perform real-time actions, or manage workflows. This capability is essential for autonomy, enabling the agent to act, not just respond, though it also introduces challenges in tool coordination, reliability, and security.

Self-reflection and adaptability

Performance is continuously evaluated through feedback loops that help refine actions, adjust strategies, and improve outcomes. By learning from past results, the agent becomes more efficient and accurate over time, making adaptability essential in dynamic, long-term tasks.

Types of Autonomous AI Agents

Autonomous AI agents can be classified by how they operate and take actions, ranging from simple rule-based models to advanced learning and collaborative systems.

- Reactive agents respond directly to inputs using predefined rules; no learning or memory is involved.

- Deliberative agents rely on internal models to plan and reason through tasks, but require more computational resources.

- Hybrid agents blend quick reactions with planning, making them adaptable in changing conditions.

- Model-based agents rely on an internal map of the environment to predict results and guide decisions.

- Goal-based agents use a built-in understanding of their environment to make predictions and choose actions.

- Utility-based agents weigh different options and select the one that delivers the highest benefit.

- Learning agents adapt their behavior over time by learning from feedback and past experience.

- LLM-based agents use large language models to process and generate natural language, enabling them to follow instructions and reason.

- Multi-agent systems (MAS) involve several agents working together or independently to handle distributed challenges.

Limitations of Autonomous AI Agents

Reasoning gaps and hallucinations

Even with reasoning frameworks, it can still produce inaccurate outputs, break multi-step logic, or hallucinate facts, especially in complex or unfamiliar tasks.

Tool selection and execution

Choosing the right tool and managing chained actions can fail mid-process. Errors in APIs or web tools affect task completion and reliability.

Lack of transparency

It’s not always clear how decisions are made, especially when combining reasoning, memory, and tool use, making outcomes harder to audit or trust.

Security and control risks

When connected to external tools or systems, a flawed prompt or unsafe action can trigger real-world consequences, data leaks, or misuses.

Scalability and resource cost

Running agents with long-context LLMs, tools, and real-time reasoning often demands high compute, which can limit scalability.

Evaluation remains inconsistent

There’s no standard way to measure performance across diverse, real-world tasks, making it hard to compare, improve, or deploy at scale.

Conclusion

Autonomous AI agents are becoming a natural extension of how work is done, quietly handling decisions, managing tools, and adapting to changing goals with minimal oversight. As systems grow more capable, the integration of memory, reasoning, and action is making AI more useful across everyday applications, not just complex tasks. This will let humans focus on higher-value work while the system takes care of what can be delegated. To get the most return on investment (ROI), one must understand how it works, where it fits, and what its limitations are.