What is Batch Size?

Team Thinkstack

May 21, 2025

Table of Contents

Training neural networks and deep learning models efficiently, especially on large datasets, comes with several challenges. Processing the entire dataset in one go can be too slow or memory-intensive, while updating the model after every single example is often unstable and inefficient.

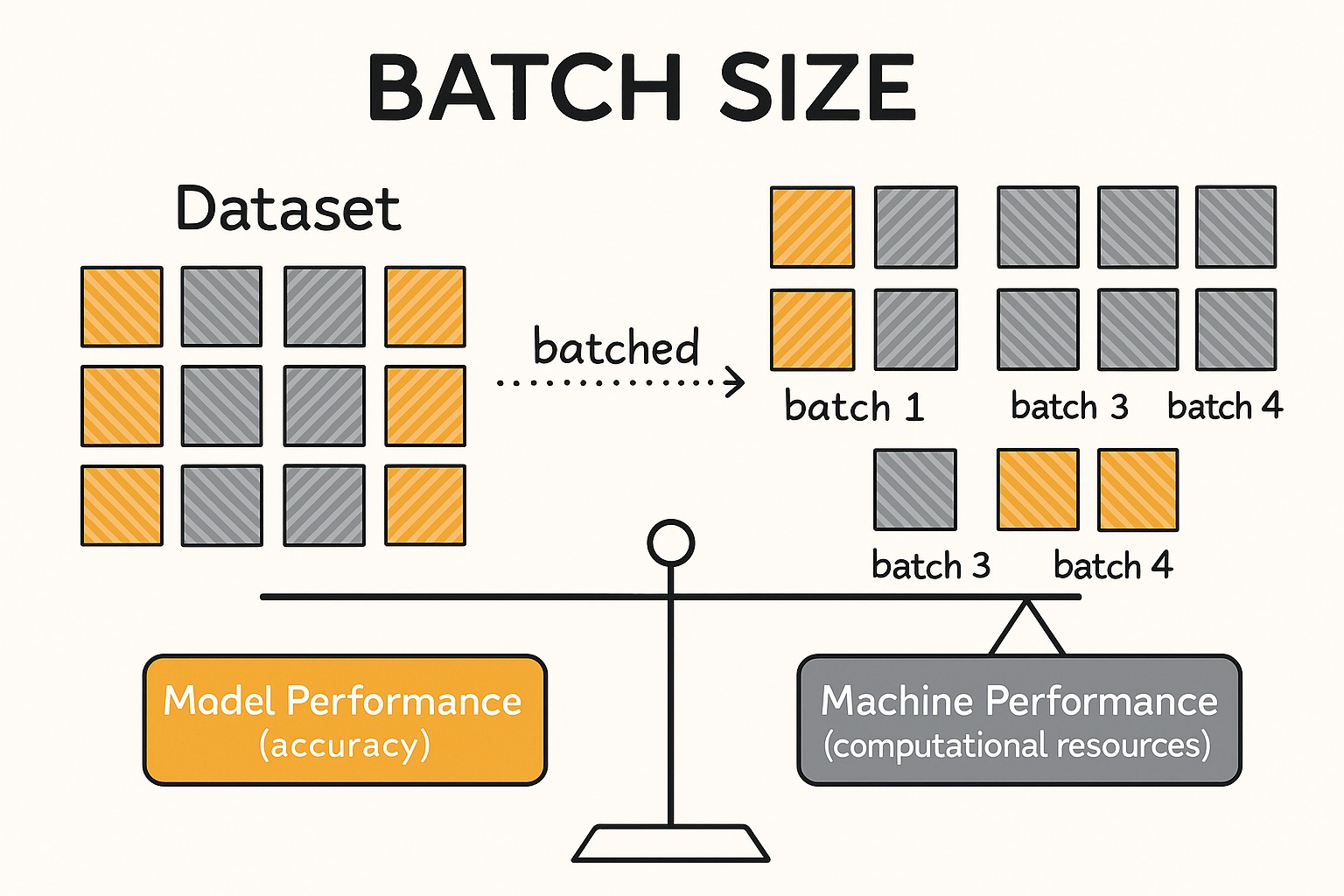

Batch size, a fundamental concept in deep learning, helps solve this problem by breaking the dataset into smaller, manageable groups of samples. Instead of processing all data at once, the model processes a fixed number of samples together in what’s called a batch.

Batch size refers to how many training examples are passed through the network in a single forward and backward pass. It is a key training hyperparameter that directly affects how the model learns. After processing each batch, the model calculates the loss and uses backpropagation to compute gradients, which indicate how changes in each weight or bias would affect the error, and then updates them to reduce that error. This is done using gradient descent or one of its variants.

Gradient descent methods are primarily categorized based on how many training samples they use for each parameter update.

- Batch Gradient Descent processes the entire dataset as a single batch. It computes the loss and gradients across all training examples and performs just one parameter update per epoch. This gives a highly accurate and stable gradient estimate, which can make the optimization path more predictable. However, the need to load and compute over the full dataset at once makes it computationally heavy and memory-intensive, especially for large datasets. While efficient in terms of clean gradient estimation, this method may not always find the most generalizable solution, as it tends to converge to sharp minima in the loss landscape.

- Stochastic Gradient Descent (SGD) updates its parameters after processing each individual sample. Here, the batch size is one. This leads to frequent and fast updates but introduces a lot of noise into the gradient estimates. That randomness can help the model escape sharp local minima and potentially land in flatter regions that generalize better to unseen data. However, training with such small batches can also cause instability and inefficient use of modern hardware, especially on large datasets.

- Mini-Batch Gradient Descent is the most common method used in deep learning today. The dataset is divided into mini-batches, which include more than one sample but less than the full dataset. Typical sizes include 16, 32, 64, or 128 samples per batch. The model adjusts its parameters after processing each mini-batch during training. This approach strikes a balance between the noisy updates of SGD and the heavy computational load of full-batch training. It provides more stable learning, makes better use of parallel hardware, and introduces just enough randomness to help with generalization. The mini-batch approach also offers flexibility in controlling memory usage, training speed, and model stability.

One complete pass through the dataset is called an epoch. An epoch consists of multiple iterations, with each iteration handling one batch. The total number of iterations per epoch depends on the dataset size and chosen batch size.

By adjusting the batch size, practitioners can manage memory usage, influence training speed, and control how frequently the model updates its parameters. It also interacts closely with other hyperparameters, such as the learning rate, affecting how fast or smoothly the model converges.

Batch size affects nearly every aspect of training, from computational efficiency to model accuracy.

- Learning speed: Smaller batches update more frequently, and larger batches enable better parallel processing.

- Stability: Larger batches produce more stable gradient estimates, and smaller batches introduce useful noise to help generalization.

- Memory usage: Bigger batches require more RAM or GPU memory.

- Generalization: The batch size affects how well the model generalizes to new data versus overfitting to the training set.

As a result, batch size is treated as a primary control lever during model development and experimentation.

How to Choose the Right Batch Size

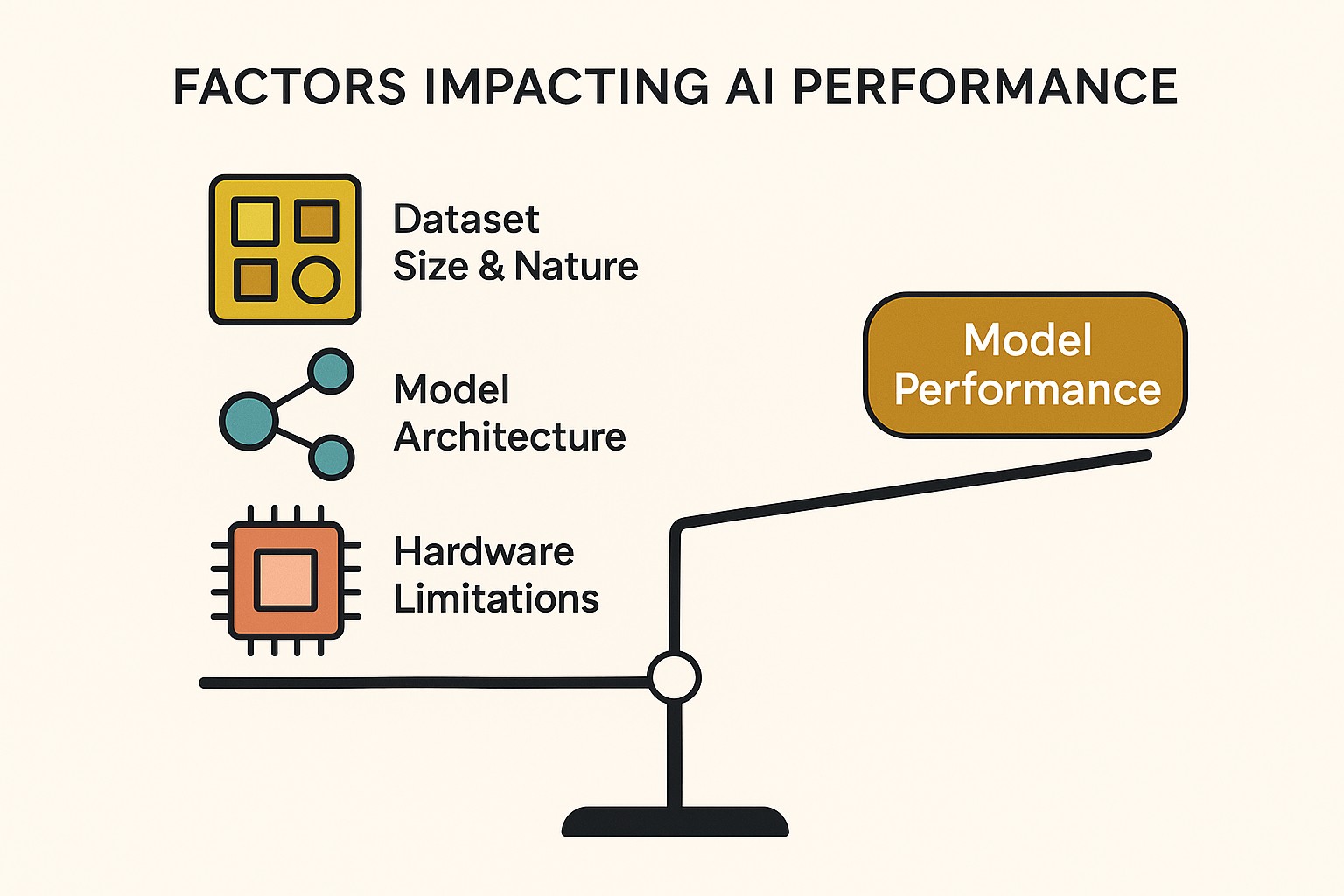

Selecting the right batch size is a key decision in training deep learning models, and doing this early in the development process is a time-saver since it influences nearly every part of the learning process. Changes in batch size often require adjusting several other hyperparameters, so making late-stage changes can be resource-heavy and inefficient. Understanding the factors that influence learning is necessary, since there is no single batch size that works for every case.

Dataset size and nature: For smaller datasets, processing everything in one go can work well and may even offer more stable updates. But for larger datasets, this becomes impractical due to memory and time constraints. In such cases, using smaller groups of data, mini-batches make training faster and easier on memory.

Model architecture: Some models, such as those used in computer vision or language processing, may respond better to specific batch size ranges. More complex architectures often benefit from stable gradients provided by moderate to large batch sizes.

Hardware limitations: Using larger batches demands more memory, as storing data, activations, and gradients requires additional system resources. Systems with high-end GPUs can support bigger batches, but this often increases hardware demands and computational costs, especially in cloud environments.

Conclusion

Batch size is one of the most important factors in deep learning, directly affecting how a neural network learns, how well the model generalizes, how stable the training process is, and how efficiently hardware resources are used. Choosing the right batch size early not only saves time but also leads to better training performance and more reliable results.

Batch size isn’t a one-size-fits-all setting, it comes with its own set of challenges. Common issues include memory constraints, the need for frequent hyperparameter re-tuning, instability at very small or very large batch sizes, and the risk of poor generalization when not properly tuned. Additional challenges like gradient vanishing, sensitivity to learning rate, computational bottlenecks, and diminishing returns at high batch sizes are well known but can be effectively managed with the right strategies.

With the right strategy, including early testing, hardware-aware scaling, and careful adjustment of related hyperparameters, these challenges can be addressed effectively.