What are Contextual Bandits?

Team Thinkstack

May 29, 2025

Table of Contents

Contextual bandits are a powerful and increasingly prevalent machine learning framework, especially in scenarios where dynamic decision-making and continuous learning from user interactions are essential.

From product recommendations, news feeds, to ad targeting and email campaigns, users expect content tailored to their preferences, behaviors, and real-time needs. What users click, watch, or buy is often influenced by who they are and what they’re doing in that moment. Because of this, contextual bandits play a critical role.

Contextual bandits are a class of machine learning algorithms designed for sequential decision-making under uncertainty, where only partial feedback is available and contextual information influences each decision.

At the core of a contextual bandit model is the idea of context-aware decision-making. It provides a structured way to learn which actions work best in different situations by balancing the need to exploit known good options and the need to explore potentially better ones. This setup is ideal for interactive systems that learn incrementally, such as recommendation engines or personalized content delivery platforms.

The term “bandit” comes from a gambling analogy, where a player faces a row of slot machines often called “one-armed bandits” and must figure out which one offers the highest payoff through trial and error. In the contextual bandit version, the gambler sees some side information, like the time of day or their previous results, before choosing a machine. This context helps them make smarter decisions over time.

Unlike traditional machine learning, making decisions based on limited information is a constant challenge, especially in real-time systems that aim to personalize every experience.

How Contextual Bandits Compare to Related Methods

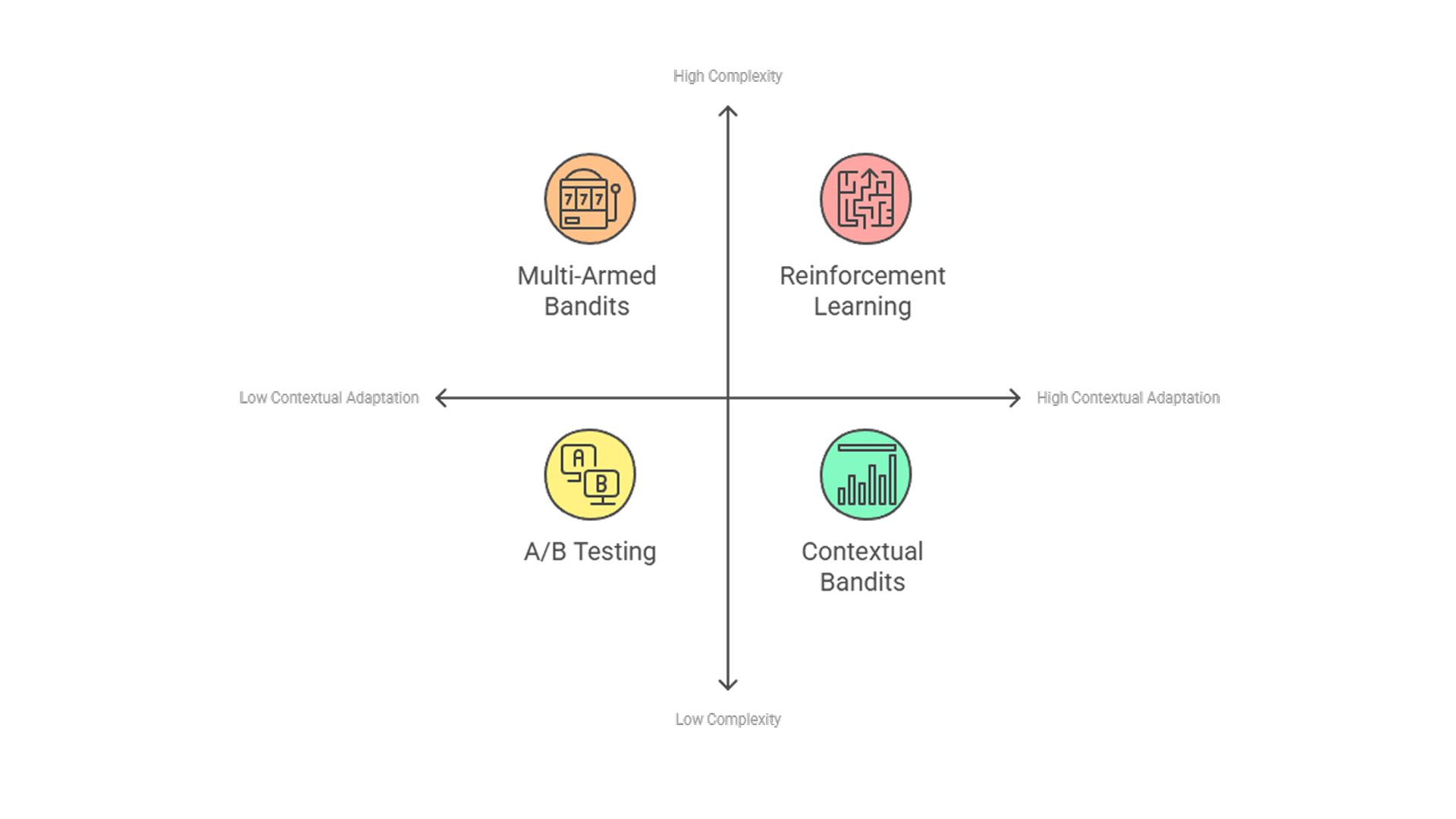

To understand contextual bandits clearly, it’s helpful to contrast them with a few related techniques:

- Multi-armed bandits (MABs): Multi-armed bandits were a common method prior to contextual bandits. Standard MABs assume no context. These algorithms pick the best overall action without considering differences between users or contexts. This approach treats every user the same. They work well in static environments but fall short when personalization is needed.

Running individual bandits for each user type could work in simple cases, but as context dimensions grow, like device, location, time, and behavior, this approach quickly becomes impractical and inefficient.

Contextual bandits handle this by incorporating context into the decision process, allowing the algorithm to adapt actions while continuously learning from recent feedback to improve future decisions. - A/B testing: A/B testing splits traffic evenly across options and waits for long-term statistical results. While useful for static comparisons, it treats all users the same and cannot adapt to individual differences or changes in behavior over time. It continues sending traffic to underperforming options throughout the test period, regardless of early results.

In contrast, contextual bandits adapt in real time and use contextual information to personalize decisions from the start and continuously update traffic allocation based on what performs best for each user or situation. - Reinforcement learning (RL): While both contextual bandits and reinforcement learning belong to the broader class of sequential decision-making algorithms, they differ in complexity and objective. RL requires learning long-term strategies where each action may influence future states of the environment. In contrast, contextual bandits operate under the assumption that actions affect only immediate rewards and do not alter future contexts. This one-step interaction model makes contextual bandits significantly more efficient and practical for scenarios where decisions are independent and real-time adaptation is key.

Core Components

A typical contextual bandit system includes four key elements: context, actions, rewards, and a policy that maps contexts to actions. The main goal is to maximize cumulative reward or, equivalently, minimize regret by learning to choose the best possible action for each context over time.

- Context space: These are the observed inputs before an action is made, such as user data, session details, or environmental variables.

- Action space: A finite (or sometimes infinite) set of possible actions, also known as arms. These are the choices the algorithm can make, for example, showing an ad, recommending a product, or selecting a treatment.

- Reward function: An unknown mapping that assigns a numerical reward to each context-action pair. The feedback is received after taking an action. This could be a click, purchase, or some other measurable outcome.

- Policy: A strategy that the system learns over time that maps contexts to actions, to choose the most rewarding action for each context.

How Contextual Bandits Work

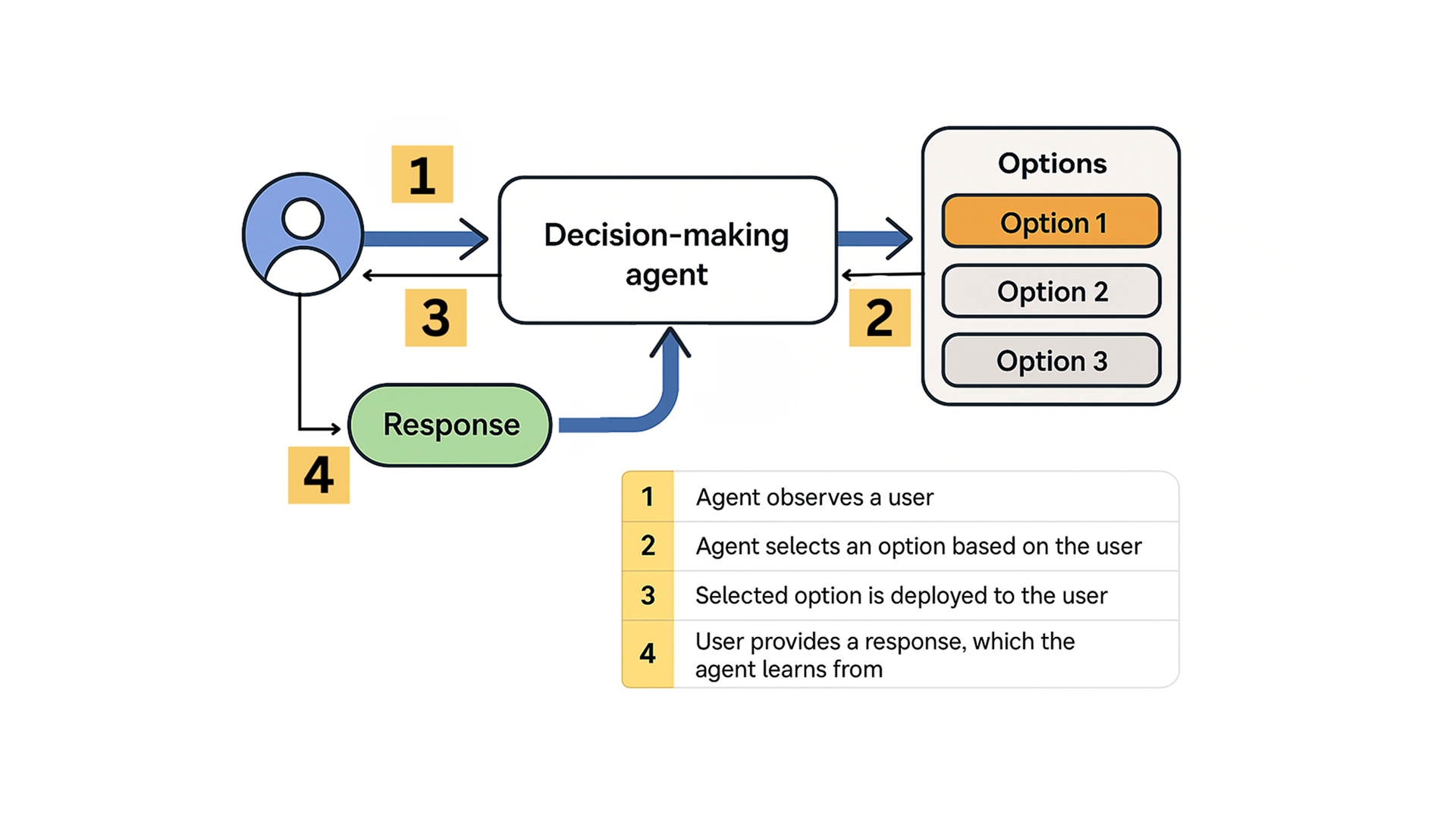

Contextual bandits operate through a repeated loop of decision-making where the system continuously observes, acts, learns, and adapts. At each iteration, the algorithm makes a decision based on available context, receives feedback on that choice, and uses it to improve future decisions, without ever seeing the outcomes of actions it didn’t take. This makes the learning process efficient, but also challenging due to the partial feedback nature of the problem.

1. Observe context: The system receives input data representing the current situation, which could include user demographics, browsing behavior, device type, or session data.

2. Select an action: Based on the context and its past learning, the algorithm selects one action from a predefined set. The goal is to choose the action that is expected to maximize reward given the current context.

3. Receive reward: The system observes the result of that action, such as a click or conversion, but gains no information about the rewards of actions not taken.

4. Update model: Using the context, chosen action, and observed reward, the algorithm updates its internal model. This refinement helps it make more accurate decisions in future, similar contexts.

To guide decisions, contextual bandits use models that estimate expected rewards for each action given the context:

- Linear models (e.g., LinUCB): Fast and efficient, assuming a linear relationship between context and reward.

- Decision trees: Segment the context space and assign actions based on learned patterns.

- Neural networks: Handle complex, non-linear relationships in high-dimensional data.

Challenges in Contextual Bandits

Despite their strengths, contextual bandits come with practical challenges that affect performance and deployment:

- Exploration vs. exploitation: A key challenge is balancing exploration (trying new actions to gather information) with exploitation (choosing the best-known option). Algorithms manage this trade-off using strategies like ε-Greedy (explore randomly with small probability ε), Upper Confidence Bound (UCB), which favors actions with high estimated reward or uncertainty, and Thompson Sampling, a Bayesian approach that samples from reward distributions to naturally balance both goals.

- Partial feedback: The system only observes the reward for the action it chose, with no insight into what would have happened had it chosen differently. This makes learning slower and less certain than in full-feedback settings.

- High-dimensional Contexts: In real-world scenarios, context often includes many features, such as location, device, behavior, and time. Handling this efficiently requires models that can generalize well without overfitting.

- Non-stationarity: User behavior and context distributions change over time. Algorithms must adapt continuously to remain effective in dynamic environments.

- Cold start: Early in deployment or when encountering new users, the algorithm may lack enough data to make confident decisions. Careful initialization or hybrid approaches are often needed to mitigate this.

- Fairness and bias: When decisions are based on context, there's a risk of reinforcing existing biases. Models must be monitored and designed to ensure equitable outcomes across user groups.

Conclusion

Contextual bandits offer a powerful, efficient framework for making personalized decisions in real time using limited feedback. By combining adaptability, context-awareness, and continuous learning, they bridge the gap between supervised learning and full reinforcement learning. Despite their challenges, they remain a practical and essential tool for systems that need to optimize user experience dynamically, especially in environments where every decision matters.