What is Data Augmentation?

Team Thinkstack

June 26, 2025

Table of Contents

Data augmentation is a set of techniques that artificially expand a training dataset by generating modified versions of existing samples or synthesizing new ones. These operations create a richer input space without requiring additional labeled examples, functioning as an implicit form of regularization. By diversifying the data, augmentation prevents models from memorizing idiosyncratic patterns in the training set and compels them to learn more robust, generalizable features. It directly addresses two core challenges in machine learning, by exposing models to a wider spectrum of plausible input variations shifts in orientation, lighting, or background clutter it bolsters resilience to real-world distortions and improves performance on unseen data; and by preventing fixation on spurious correlations in a limited dataset, it mitigates overfitting and forces models to focus on underlying task structure, thereby enhancing both noise robustness and generalization.

Key benefits include:

- Overcoming limited labeled data & class imbalance

Generates additional examples for under-represented classes via simple transformations or targeted methods like SMOTE, yielding more balanced datasets. This is critical in domains such as medical imaging or niche applications, where expert annotation is costly and positive samples are scarce. - Preventing overfitting

Acts as an implicit regularizer by continually presenting novel variants of each training sample. Augmented data prevents networks from fixating on noise or spurious correlations, reducing generalization error on validation and test sets. - Domain adaptation & privacy

Simulates target-domain conditions to narrow distribution gaps between synthetic and real-world data. Simultaneously, synthetic and transformed samples preserve statistical fidelity without exposing sensitive details, enabling privacy-preserving model training and safe data sharing.

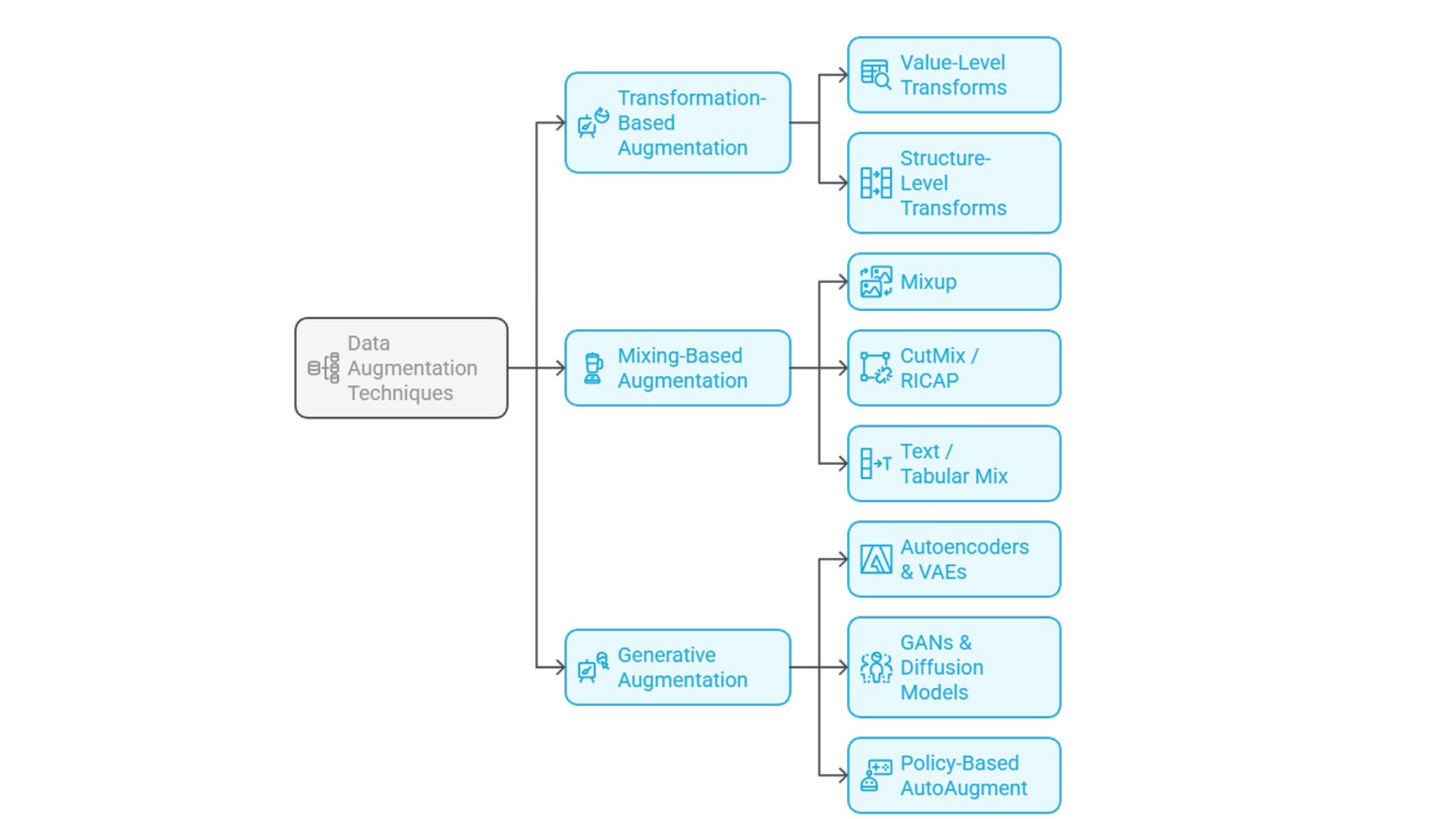

Data Augmentation Techniques

Data augmentation methods fall into three main categories, transformation-based, mixing-based, and generative, each designed to increase dataset diversity without requiring new labels.

Transformation-Based Augmentation

Applies controlled modifications to individual samples while preserving their original labels. This category is divided into:

Value-Level Transforms

Perturb the raw data values to simulate realistic variations:

- Images: Inject noise adjust brightness, contrast or hue, and apply kernel filters. These operations train models to ignore photometric inconsistencies.

- Text: Replace terms with synonyms, insert or delete tokens at random. Such edits diversify wording without altering meaning.

- Tabular/time-series: Add jitter to numerical fields, scale or warp magnitudes. This mimics measurement noise and sampling variability.

Structure-Level Transforms

Modify the positional or sequential ordering of data components:

- Images: Rotate, flip, crop, translate, or shear the frame. Models learn that object identity is invariant under these geometric shifts.

- Text: Swap sentence fragments, convert active to passive voice, or apply back-translation. These changes vary the syntax while preserving semantics.

- Sequences: Extract sliding windows or apply time-warping to reorder segments. This exposes models to different temporal contexts.

Mixing-Based Augmentation

Creates hybrid samples by combining two or more instances, blending inputs and labels for smoother decision surfaces:

- Mixup

Linearly interpolates inputs (pixels or embeddings) and their labels. Models learn to generalize between classes rather than memorize exact examples. - CutMix / RICAP

Connects a patch from one image to another and weights labels by patch area. This forces attention to multiple regions and reduces reliance on a single object part. - Text / Tabular Mix

Interpolates feature vectors or applies SMOTE to minority-class records. New samples reflect intermediate characteristics of combined instances.

Generative Augmentation

Samples entirely new examples from a learned approximation of the data distribution:

- Autoencoders & VAEs

Encode samples into a latent space and decode slight perturbations, yielding variants that follow the original data manifold. - GANs & Diffusion Models

Train generator networks to produce realistic images, tabular entries, or time-series traces. - Policy-Based AutoAugment

Use an automated search to discover optimal sequences of transformations based on validation performance, tailoring augmentation to the specific dataset.

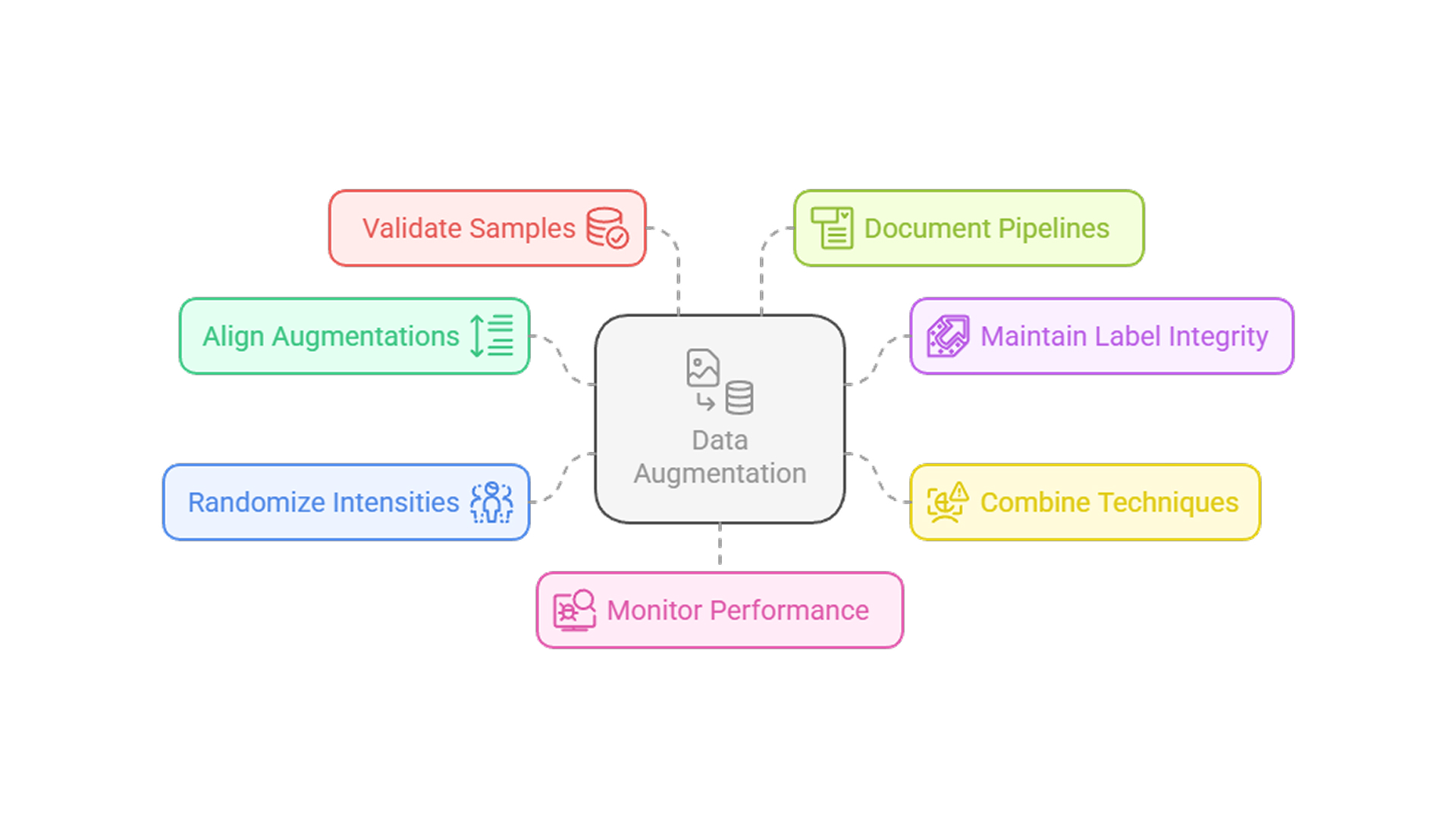

Best Practices in Data Augmentation

- Align augmentations to the task and data

Augmentation transformations must reflect the variations expected in deployment inputs, ensuring that synthetic samples remain representative and maintain model relevance. - Maintain label integrity

Each operation should preserve or correctly adjust ground-truth labels, preventing the introduction of noisy targets that can destabilize training and reduce accuracy. - Combine multiple techniques

Integrating diverse augmentation methods addresses different sources of variability, broadening the data manifold and reducing overfitting to any single perturbation. - Randomize and calibrate intensities

Controlled application probabilities and bounded transform magnitudes prevent repetitive artifacts and maintain sample realism, supporting stable feature learning. - Validate augmented samples

Routine checks of augmentation outputs safeguard dataset fidelity, catching corrupted or unrealistic samples before they degrade model performance. - Document and seed pipelines

Meticulously logging augmentation settings and fixing random seeds fosters consistent results, streamlines troubleshooting, and permits unbiased evaluation of different augmentation methods. - Monitor performance continuously

Ongoing evaluation of validation metrics and robustness tests quickly detects augmentation-induced issues, enabling timely adjustments to the augmentation pipeline.

Conclusion

Data augmentation enlarges the training set with realistic variations, directly improving model resilience and generalization. It underpins advances across computer vision (classification, detection, segmentation), natural language processing (translation, classification, entity recognition), audio and speech recognition, medical imaging, autonomous systems, and more, especially where labeled data is scarce or sensitive.

At the same time, unchecked augmentation can reinforce dataset biases, introduce unrealistic artifacts, and incur significant computational overhead. These risks are mitigated by selecting transforms that match deployment conditions, enforcing strict label preservation, validating augmented samples, tuning policies automatically, and monitoring performance on perturbed data. Hybrid approaches that combine these techniques offer the most resilient solution for building reliable, scalable models when data remain limited.

Also Read

What is Automated Machine Learning?

AutoML automates the discovery of effective augmentation policies, such as those used in AutoAugment, improving performance with less manual effort.

What is Underfitting?

While augmentation fights overfitting, it’s equally important to avoid underfitting, where models fail to capture important patterns due to overly simplistic learning.

What is Prescriptive Analytics?

While data augmentation supports better model training, prescriptive analytics leverages those trained models to recommend optimal actions, completing the pipeline from data to decision.