What is Discriminative Model?

Team Thinkstack

June 16, 2025

Table of Contents

Nearly everything we interact with today, whether it’s personalized recommendations, spam filtering in email, voice assistants like Alexa, or fraud detection, relies on machine learning. It plays a foundational role in modern AI, especially in fields like natural language processing (NLP), computer vision, and predictive analytics.

At the heart of many of these systems are discriminative models, sometimes referred to as conditional models. These are a type of machine learning algorithm designed to predict outcomes by learning the relationship between inputs and outputs, specifically, the conditional probability of a label (Y) given a set of input features (X), written as P(Y|X).

Discriminative models are named for their primary function to discriminate between different classes by learning what separates one category from another. The name stems from this mathematical and functional approach, where training involves finding parameters that best differentiate outcomes, as seen in models like logistic regression, SVMs, and neural networks.

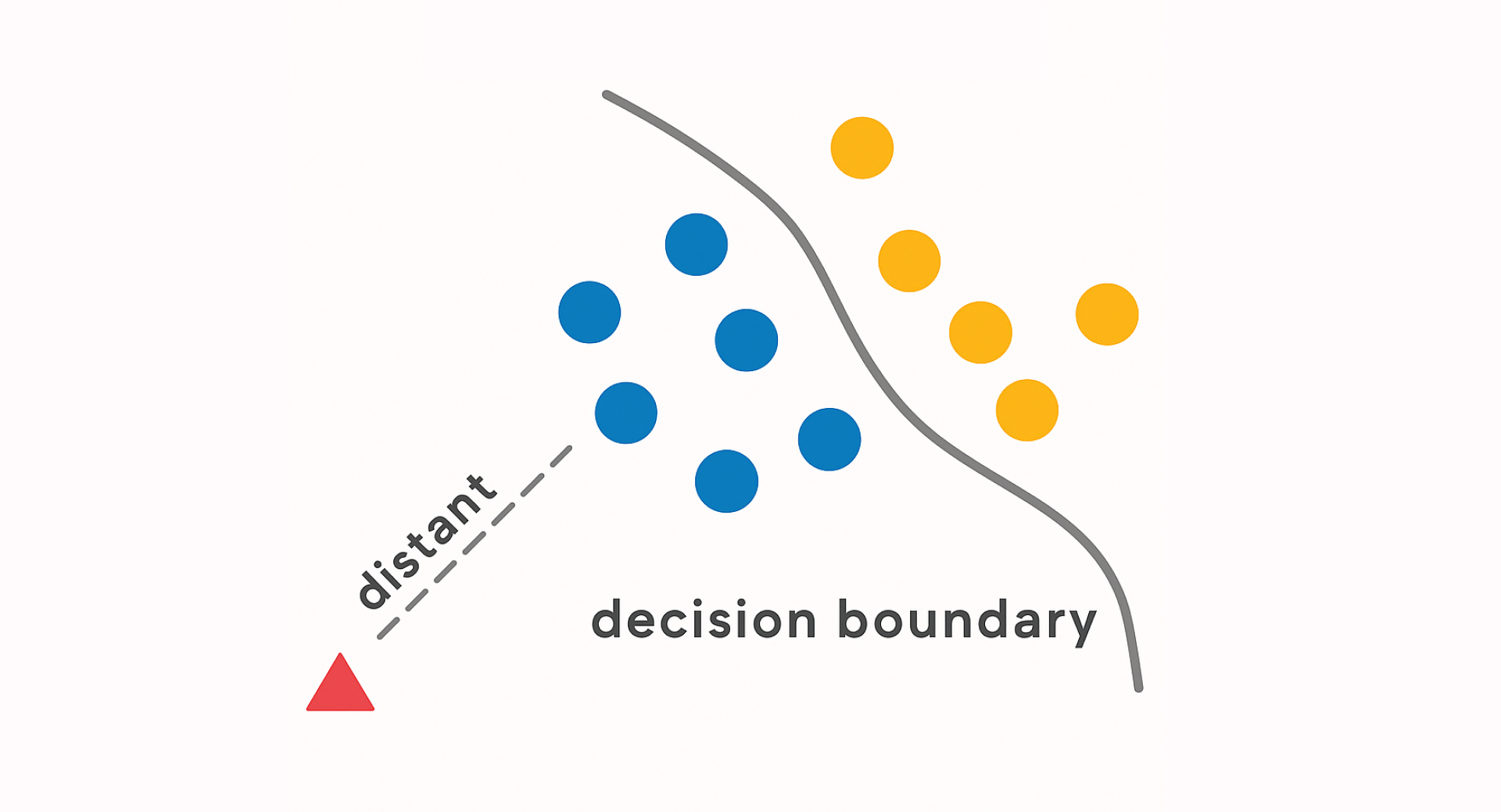

Instead of modeling how data is generated, discriminative models estimate this probability and draw boundaries that separate different classes based on patterns in the data. This direct approach allows them to accurately classify or predict outcomes by focusing purely on the input-output relationship. Discriminative models are highly effective for classification and regression tasks where accuracy and speed are critical. They are indispensable in machine learning and AI for several key reasons:

- Higher predictive accuracy: Directly learn the decision boundary between classes and often outperforms generative models in classification and regression tasks.

- Efficiency: By skipping the modeling of how data is generated, they require less computational overhead, making them faster to train and use.

- Simplicity: Fewer assumptions about the data, these models are easier to implement and adapt across various problems.

- Robustness: Often handles noise and outliers better, reducing the likelihood of errors from unusual inputs.

- Scalability: As more labeled data becomes available, discriminative models become more powerful without needing to redesign the system.

- Generalizability: The ability to perform well across domains like finance, healthcare, and e-commerce makes them ideal for real-world applications.

Common Types of Discriminative Models

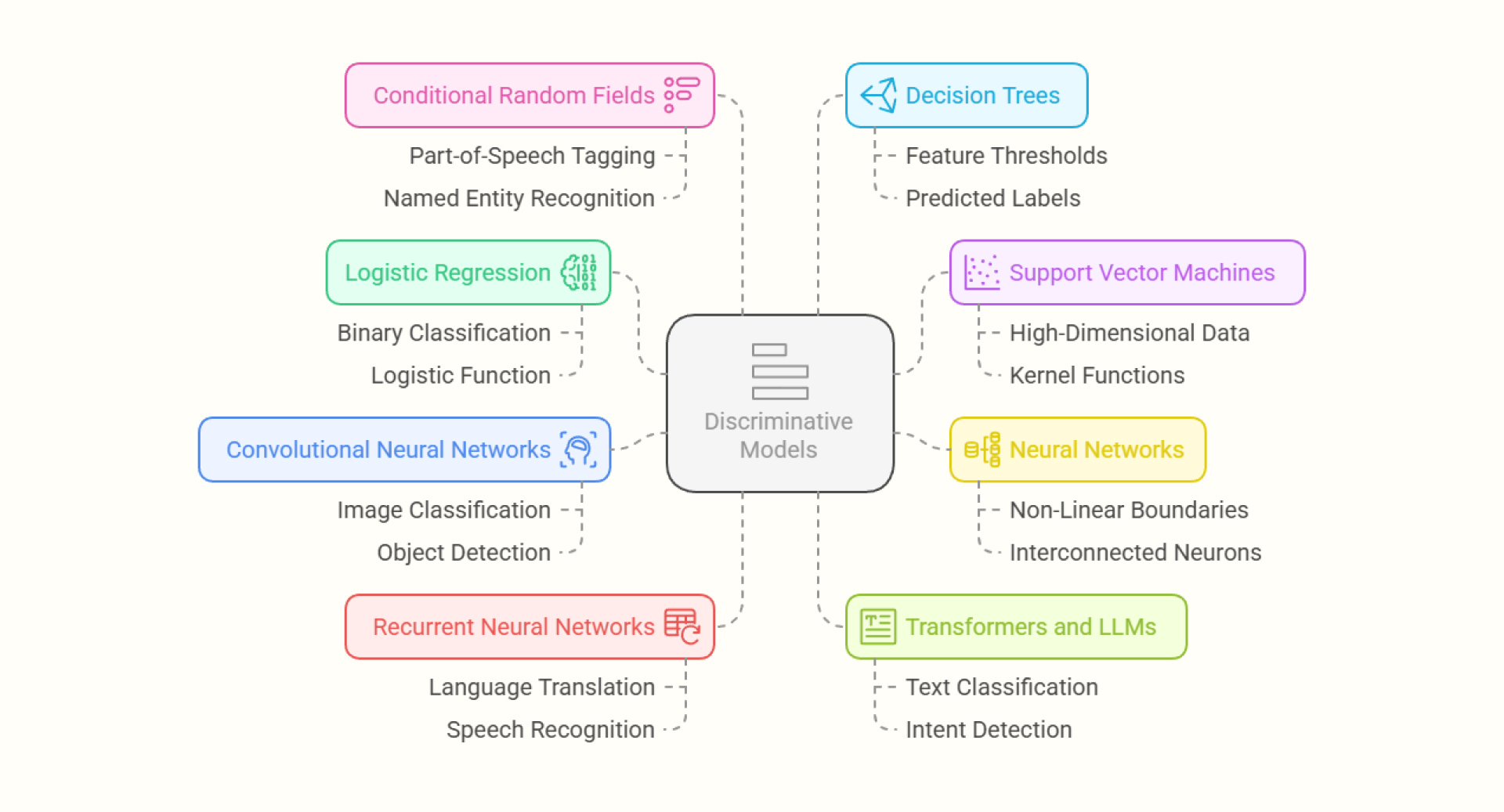

Discriminative models come in many forms, each tailored to different types of data, tasks, and complexities. Some of the most commonly used discriminative models in machine learning are

1. Logistic regression (LR) is one of the simplest and most widely used discriminative models, especially for binary classification tasks. It models the probability of an input belonging to a specific class by applying a logistic function to a linear combination of input features.

2. Support vector machines (SVMs) are models designed to find the optimal hyperplane that separates classes with the maximum margin. They are particularly useful for high-dimensional data and can be extended to handle non-linear boundaries using kernel functions.

3. Neural networks are widely used discriminative models, particularly in applications involving complex or unstructured data. These models learn non-linear decision boundaries by passing input through layers of interconnected neurons.

- Convolutional neural networks (CNNs): Designed for tasks like image classification and object detection, CNNs extract spatial features through convolutional layers.

- Recurrent neural networks (RNNs): Often used in sequence modeling tasks like language translation, speech recognition, or time series prediction.

- Transformers and LLMs: Though many large language models are generative, they can be trained discriminatively for tasks like text classification or intent detection, depending on their training objective.

4. Conditional Random Fields (CRFs) are probabilistic models specialized for structured prediction problems, where outputs depend not only on inputs but also on other outputs. They’re commonly used in NLP tasks such as part-of-speech tagging, named entity recognition, and sequence labeling, where context between words matters.

5. Decision Trees are interpretable models that split data based on feature thresholds to classify inputs. Each decision node in the tree represents a condition, and leaf nodes correspond to predicted labels. They’re useful in domains that require transparency, like finance or healthcare, and are well-suited for handling non-linear relationships in the data.

How Discriminative Models Work

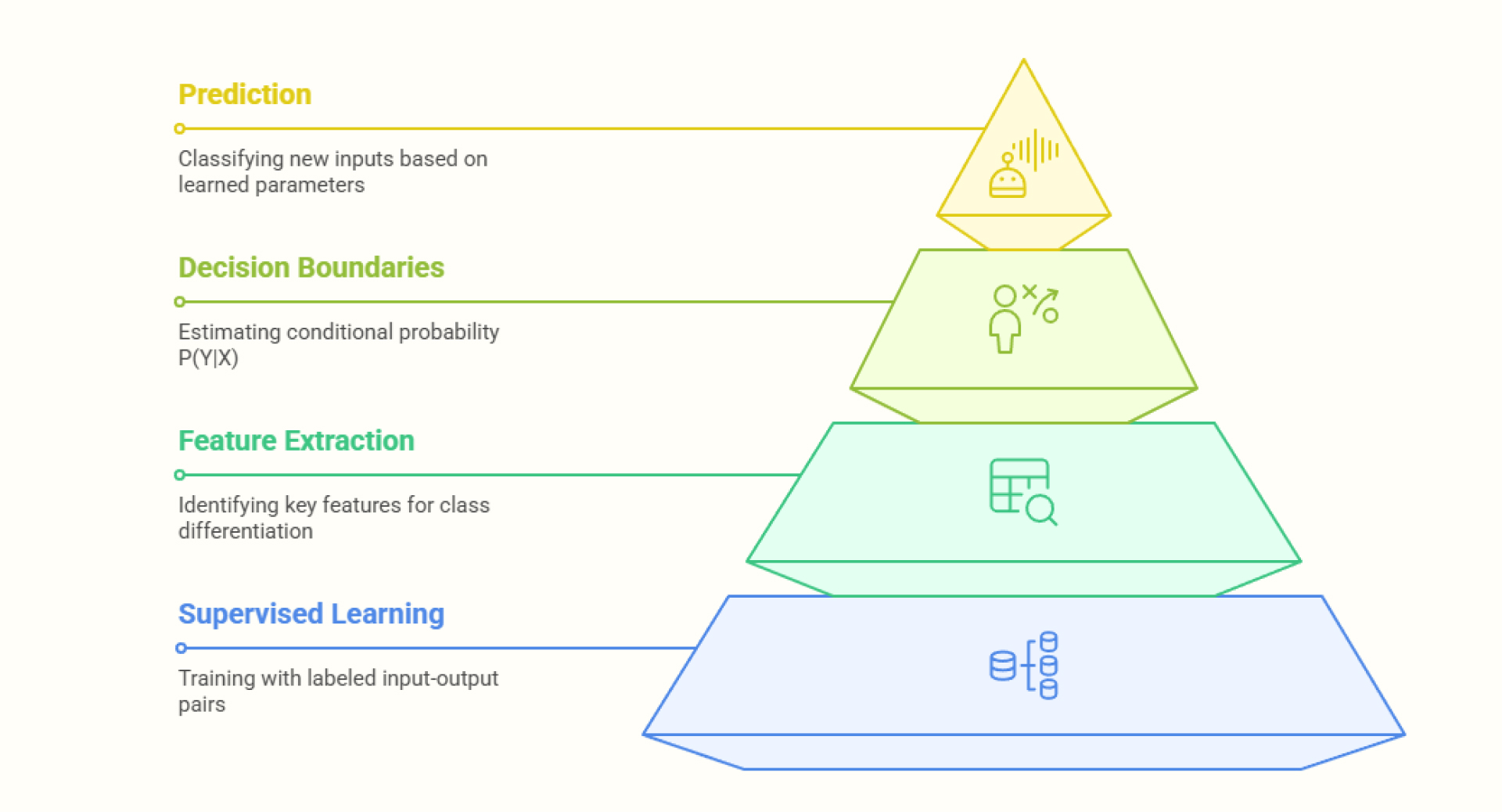

Discriminative models work by learning how to map input features to output labels through a direct and efficient process.

- Supervised learning with labeled data

Training begins with a dataset where each input (X) is paired with a known output label (Y). The model uses these examples to learn which features are most important for making correct predictions. - Feature extraction

The model identifies and processes key features from the input that help differentiate between classes. These could be words in a sentence, pixel patterns in an image, or behaviors in transaction data, depending on the use case. - Learning decision boundaries

Rather than modeling the full probability distribution of the data, discriminative models estimate the conditional probability P(Y|X). This involves finding a mathematical function that best separates the classes. - Prediction on new data

The model uses the learned parameters to classify new, unseen inputs. It evaluates which side of the learned decision boundary the input falls on and predicts the most likely label accordingly.

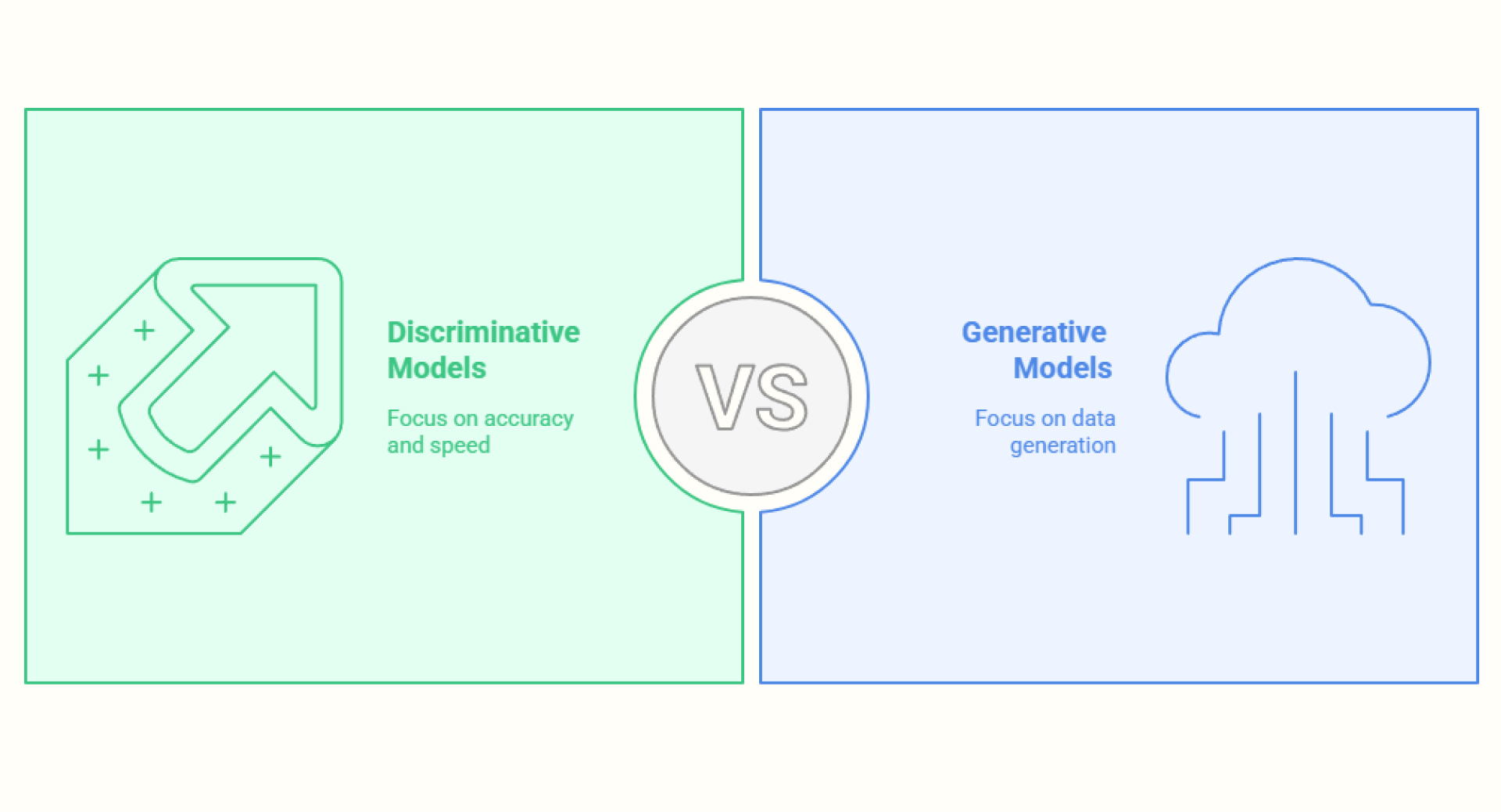

Discriminative vs. Generative Models

Discriminative and generative models are two complementary approaches in machine learning. Discriminative models focus on learning the boundary between classes, predicting the label (Y) directly from the input (X) by estimating P(Y|X).

Generative models learn the underlying distribution of the data. They model how inputs (X) and outputs (Y) occur together, which enables them to generate new data samples. They are behind technologies like ChatGPT and other content generation tools, making them essential for tasks like text generation, image synthesis, and translation.

They can work with incomplete data, but are generally slower and more complex to train. Despite their differences, the two can complement each other. Hybrid models combine the pattern-separation power of discriminative models with the data understanding capabilities of generative models. This allows systems to both classify and generate data effectively, especially useful in areas like semi-supervised learning and AI model pretraining.

Conclusion

Discriminative modeling is a core concept in machine learning and an essential tool across modern AI systems. It plays a central role in applications like natural language processing, computer vision, recommendation systems, and fraud detection by focusing on learning direct mappings between input features and output labels.

While highly effective, discriminative modeling also comes with challenges. It often requires large amounts of labeled data, handles missing inputs less effectively, can inherit bias from training data, and may be harder to interpret depending on the model architecture. In complex tasks, combining multiple subtasks or incorporating additional structures may be necessary to reach optimal performance.

As research advances, hybrid approaches that combine discriminative and generative strengths are opening up new possibilities, making these models even more powerful and versatile.