What is Embedding Space?

Team Thinkstack

June 26, 2025

Table of Contents

An embedding space is a continuous, multi-dimensional vector space in which discrete entities words, images, users, graph nodes, are represented as dense numerical vectors. Embeddings compress high-dimensional or sparse inputs into lower-dimensional coordinates, with each dimension encoding a learned feature. The geometric relationships among these vectors (distances, directions) reflect semantic or functional similarities, entities that share attributes or contexts lie near one another, while unrelated entities lie farther apart.

Embedding space serves two primary purposes. Initially, embeddings convert input data into compact vector representations that downstream models can process efficiently, lowering computational overhead and memory usage. Second, it preserves and exposes latent structure semantic relationships in language, visual features in images, or user–item affinities in recommender systems that traditional feature engineering might overlook. These learned representations are typically obtained via neural networks or factorization methods, trained to optimize an objective function that encourages semantically meaningful geometry.

Embedding spaces differ from generic latent spaces by explicitly targeting semantic relationships rather than mere dimensionality reduction. Whereas techniques like principal component analysis or vanilla autoencoders focus on compressing variance, embedding algorithms prioritize the preservation of context, co-occurrence, or interaction patterns.

Embedding spaces support a variety of machine-learning tasks, such as:

- Natural language processing: Word and sentence embeddings capture meaning, driving advances in translation, sentiment analysis, information retrieval, and conversational agents.

- Computer vision: Image embeddings distill visual features for classification, detection, and similarity search.

- Recommendation systems: User and item embeddings enable personalized suggestions by measuring proximity in a shared vector space.

- Search and retrieval: Query and document embeddings improve relevance by matching semantic intent rather than keyword overlap.

- Cross-modal modeling: Joint embeddings link text and vision (e.g., CLIP), supporting tasks like image captioning and multimodal retrieval.

- Anomaly and fraud detection: Embeddings reveal outliers in transaction or network data by identifying vectors that deviate from normal clusters.

- Interpretability and visualization: Techniques such as t-SNE or UMAP project embeddings for human analysis; novel methods leverage language models to narrate embedding structures.

How Embedding Spaces Work

Embedding spaces are constructed through a series of stages that turn raw, high-dimensional inputs into compact vectors whose relative positions encode meaningful relationships.

Encoding raw inputs

Each discrete entity, whether a word, image patch, user ID, or graph node, is first mapped to a fixed-length vector by an embedding layer. This step replaces sparse or categorical representations with continuous values that downstream models can process efficiently.

Training with objective functions

During model training, these vectors are refined to satisfy a chosen loss function. Predictive objectives, reconstruction objectives (e.g., autoencoder loss), or contrastive objectives guide the adjustment of embedding weights so that semantic or behavioral similarities emerge naturally in the space.

Interpreting distances and directions

Once optimized, the geometry of the embedding space reflects real-world affinities, small distances indicate strong similarity, vector offsets capture relational patterns, and clusters reveal shared attributes.

Choosing dimensionality

The number of embedding dimensions determines the trade-off between expressivity and robustness. Higher-dimensional spaces can model subtle distinctions but demand more data and computation; lower-dimensional spaces simplify training and reduce overfitting risk. Task requirements and data availability guide this choice.

Fine-tuning and adaptation

Embeddings trained on large datasets are frequently reused to initialize or adapt models for new, domain-specific objectives. By adding lightweight projection layers or adapters and continuing training on new data, the space retains its general semantic structure while aligning to domain-specific objectives, cutting data requirements, and accelerating convergence.

Types of Embedding Spaces

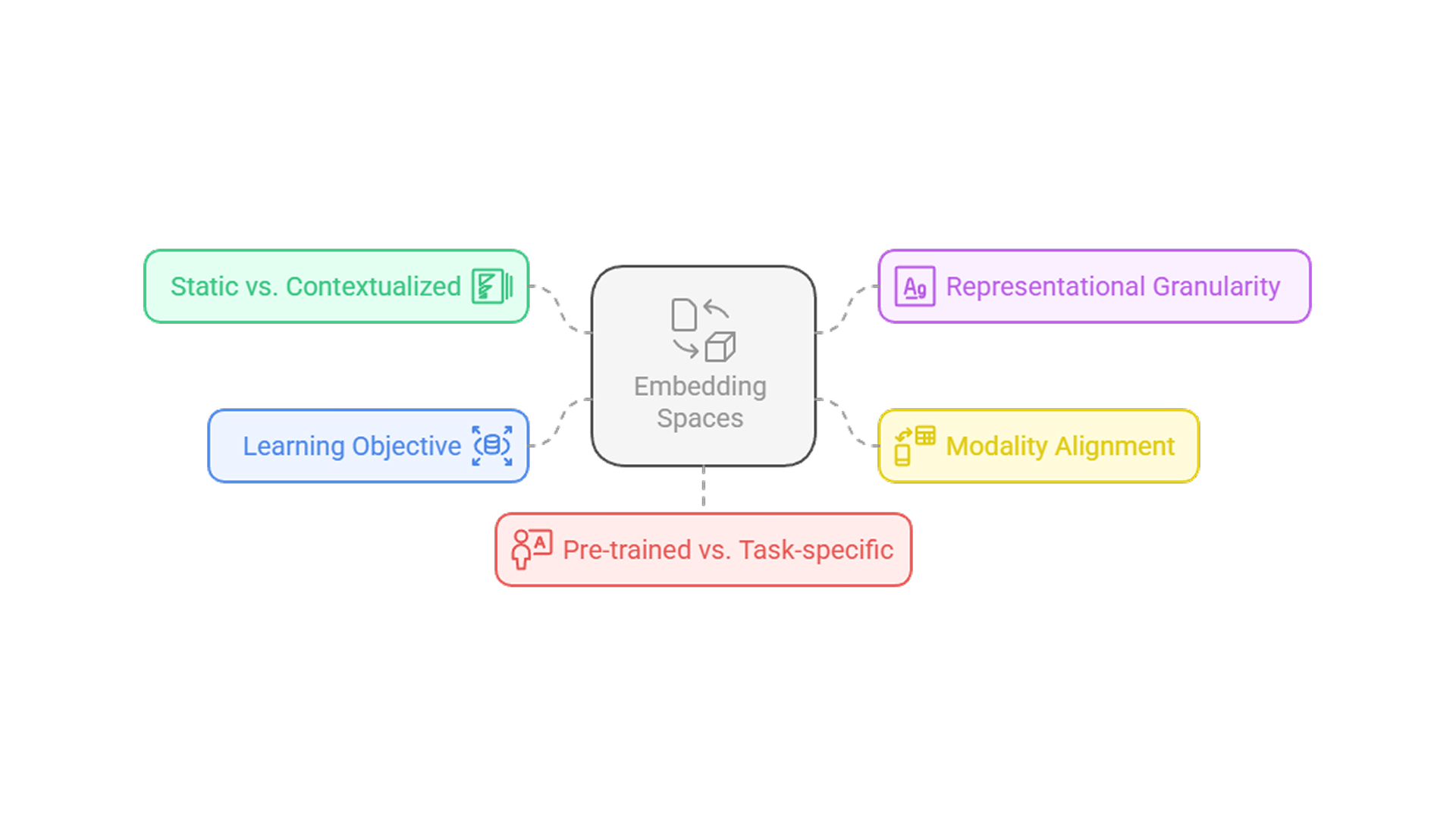

Embedding spaces differ according to how they capture context, the units they encode, the data modalities they serve, the training objectives they optimize, and the degree to which they generalize across tasks.

Static vs. contextualized

Static spaces assign each entity a fixed vector, reflecting global co-occurrence patterns. Contextualized spaces produce vectors that vary with surrounding inputs, disambiguating meaning according to local usage.

Representational granularity

Spaces may encode subword fragments, individual tokens, or entire passages. Finer-grained units improve coverage of rare or compound forms; coarser units capture broader semantic themes at the expense of lexical detail.

Modality alignment

Each embedding space is tailored to its data source text, images, audio, graphs, or tabular fields, so that its vector geometry mirrors the intrinsic structure of that modality. Multimodal spaces reconcile two or more domains within a shared coordinate system.

Learning objective

The loss function used during training defines which relationships are emphasized. Predictive objectives draw co-occurring items together; reconstructive objectives preserve overall structure through compression; contrastive objectives enforce separation of dissimilar pairs; supervised objectives shape vectors for specific downstream labels.

Pre-trained vs. task-specific

Pre-trained spaces provide a broad semantic structure learned on large, generic datasets. Task-specific spaces are trained or fine-tuned on domain data to capture bespoke patterns. Adapter or projection layers enable minimal adjustment of a pre-trained space to new objectives without losing its general organization.

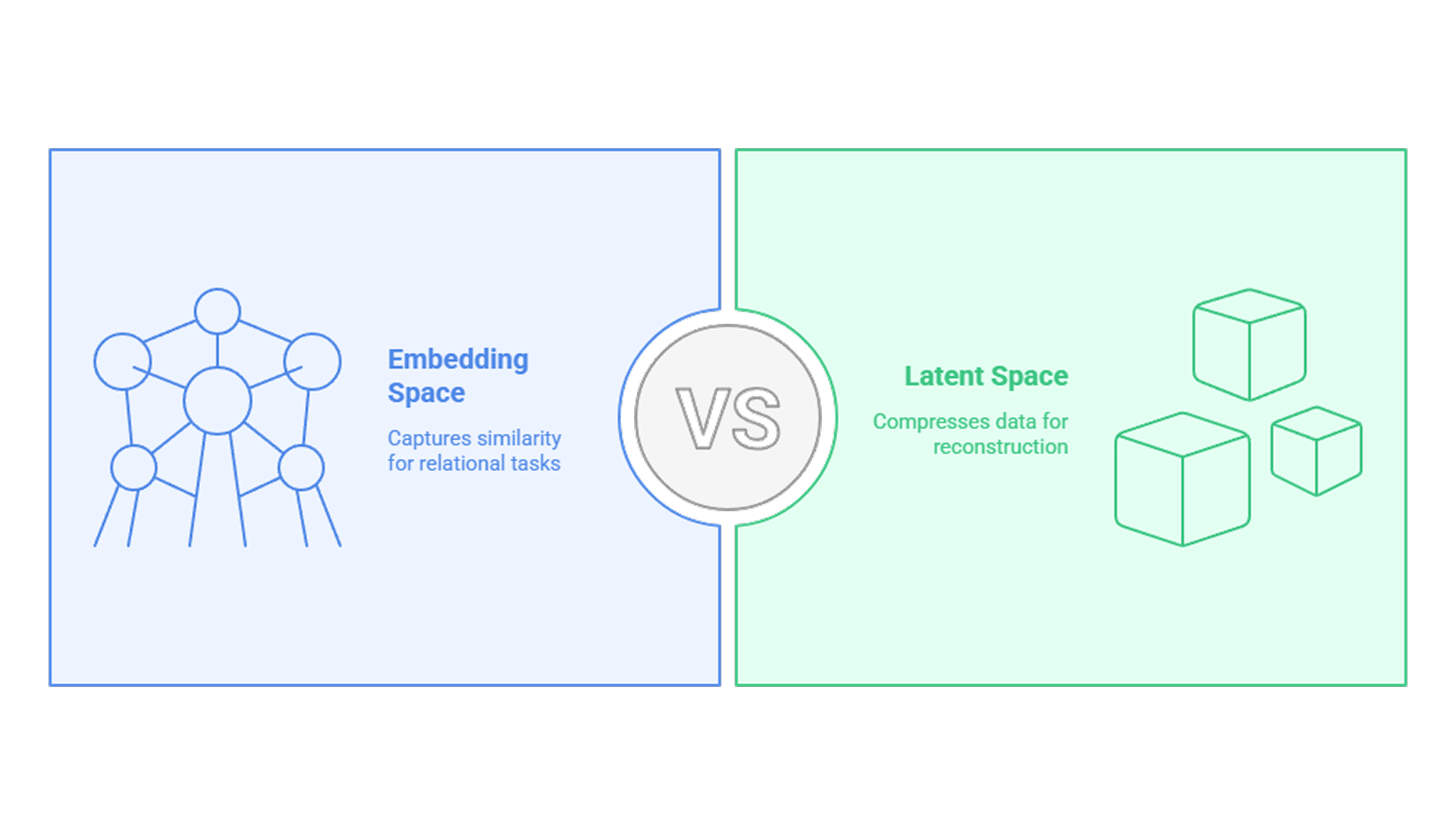

Embedding Space vs. Latent Space

While both reduce high-dimensional data into continuous vectors, they differ in purpose, training, and use.

Purpose

- Embedding spaces capture semantic or relational similarity—placing like entities close together for tasks such as recommendation or analogy.

- Latent spaces focus on compressing data to its core features for faithful reconstruction or generative modeling.

Training Objectives

- Embedding vectors arise from predictive or contrastive losses that shape inter-vector distances according to co-occurrence or similarity criteria.

- Latent representations are learned via reconstructive or statistical losses (e.g., autoencoder reconstruction, PCA variance maximization).

When to Use

- Embedding space excels when capturing similarity, constructing relational models, and powering downstream tasks that depend on vector affinity.

- Latent space excels when compressing data, removing noise, or generating new samples in scenarios where accurate reconstruction is paramount.

Conclusion

Embedding spaces lie at the heart of modern machine learning, distilling raw inputs into compact vectors that capture semantic and functional relationships for tasks like language understanding, image analysis, recommendations, and anomaly detection. While pre-training and fine-tuning tailor these spaces to specific domains, they can inherit biases, misaligned objectives, and become harder to interpret as dimensions grow. Aligning loss functions to task goals, choosing the right dimensionality, and applying adapter layers or targeted fine-tuning help keep embeddings meaningful, and rigorous validation via similarity metrics and downstream benchmarks guards against spurious patterns. Paired with latent-space methods or hybrid training objectives, embedding spaces provide a versatile, scalable foundation for building robust, explainable AI systems.

Also Read

What is Semantic Understanding?

Embedding spaces power semantic understanding by capturing relationships between words, phrases, and concepts based on usage and context.

What is Attention Mechanism?

Modern contextualized embeddings (e.g., from transformers) rely on attention mechanisms to weigh the importance of surrounding tokens.

What is Zero Shot Learning?

Embedding-based similarity allows zero-shot models to generalize to unseen classes by comparing relationships in vector space.