What is Learning Rate?

Team Thinkstack

June 06, 2025

Table of Contents

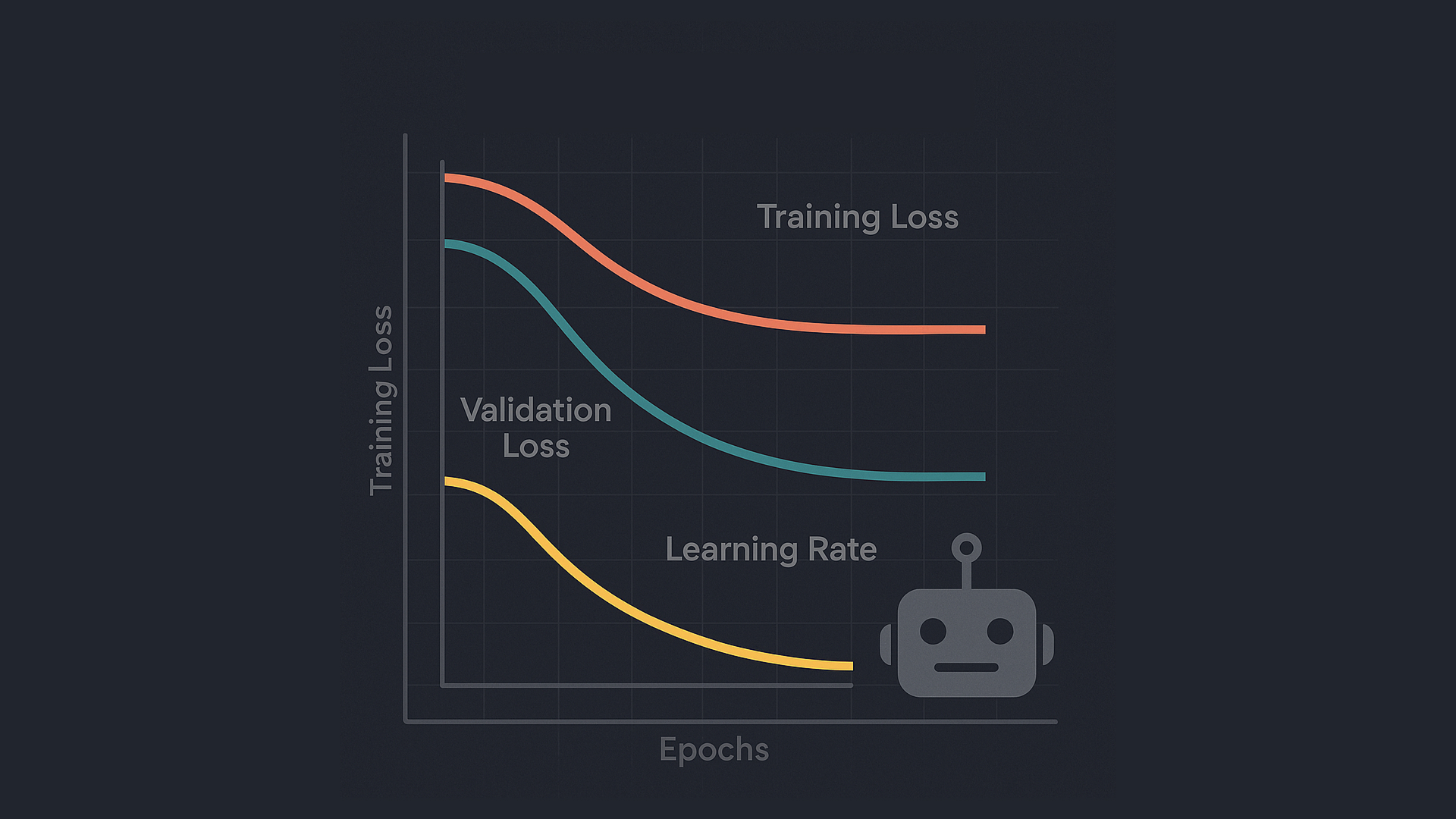

Learning rate is a foundational hyperparameter in the training of machine learning models, particularly deep neural networks. It controls the magnitude of updates applied to model weights in response to the gradient of a loss function, directly influencing how efficiently and stably a model learns. Typically denoted as η or α, it appears simple, but its influence spans convergence, generalization, training dynamics, and model stability.

Alongside learning rate, hyperparameters like batch size and the structure of backpropagation also shape how gradients are computed and applied, affecting the speed, stability, and quality of updates throughout training.

Training a model involves minimizing a loss function over successive iterations, typically using variants of gradient descent. This iterative optimization process is commonly referred to as hill descent, where the model navigates a multidimensional loss landscape to reach a minimum. At each step, model parameters θ are updated according to:

θt+1=θt−η∇J(θt)

Here, ∇J(θₜ) represents the gradient of the loss with respect to the model parameters, and η is the learning rate. The learning rate sets how big a jump the model takes when adjusting weights during training. If η is too small, progress is slow and may stagnate. If it’s set too high, the updates can overshoot the target, bounce around, or cause training to break down.

The learning rate influences more than just training speed. Its value has a direct impact on three critical aspects of model performance:

- Convergence: A well-chosen learning rate helps the model settle into a good solution quickly and with less computational effort. Poorly chosen values can slow training significantly or prevent it from converging at all.

- Generalization: The learning rate shapes how the model explores the loss landscape. It affects whether the optimization settles into a sharp local minimum or a flatter, more generalizable region.

- Training Stability: An unstable learning rate can cause the loss of parameters to oscillate, making learning erratic or leading to divergence.

Together, these factors make the learning rate one of the most sensitive and consequential hyperparameters in deep learning.

Types of Learning Rate

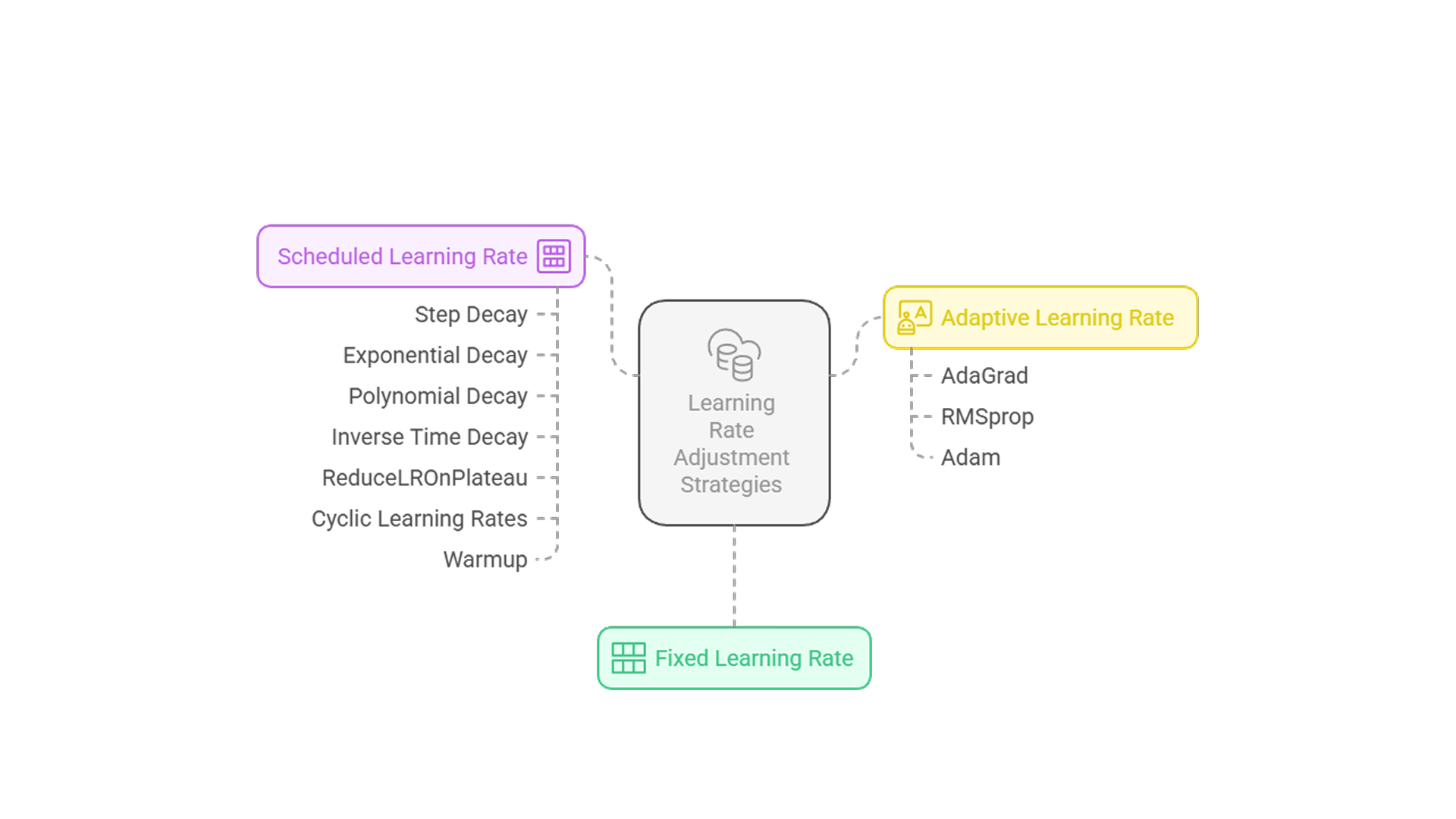

A single fixed value often fails to meet the needs of training at every stage. That’s why a variety of strategies have been developed to manage learning rate dynamically across time or based on feedback from the training process. Learning rate adjustment methods are typically grouped into three main types:

1. Fixed learning rate keeps the step size constant throughout training. While this approach is simple and easy to implement, it offers no flexibility. If the chosen rate is too high or too low, it affects convergence and performance across all stages. It’s often used as a baseline or when computational constraints require minimal tuning. Common values are selected by trial and error. Despite its simplicity, fixed rates can lead to slow training or instability if not tuned carefully.

2. Scheduled learning rate strategies change the learning rate over time using a predefined rule. The general idea is to start with a higher learning rate to enable fast learning early on and gradually reduce it to refine the model as training progresses. These schedules help avoid overshooting and improve convergence near minima.

- Step decay reduces the learning rate at fixed intervals (every few epochs) by a constant factor.

- Exponential decay reduces the learning rate gradually by applying a consistent decay factor at every step in training.

- Polynomial decay modifies the learning rate by applying a polynomial curve over the training epochs to slow it down progressively.

- Inverse time decay, the learning rate shrinks gradually as training progresses, following a curve shaped by the inverse of time.

- ReduceLROnPlateau monitors key metrics such as validation loss and reduces the learning rate when progress stalls.

- Cyclic learning rates (CLR) vary the learning rate up and down within a defined range in a periodic manner, helping the model escape local minima and converge faster.

- Warmup starts the model with a lower learning rate and ramps it up gradually to stabilize early training dynamics. Often used with large models to stabilize early learning.

3. Adaptive learning rate methods adjust the learning rate automatically during training based on gradient behavior. Unlike scheduled approaches, which rely on time or epoch count, adaptive methods respond in real-time to how steep or flat the loss landscape is. This enables them to handle noisy data, sparse gradients, or rapidly changing loss surfaces more effectively.

- AdaGrad customizes the learning rate for each parameter by factoring in the history of past gradients, making it effective for sparse datasets, though it may reduce learning too aggressively over time.

- RMSprop improves on AdaGrad by using a moving average of squared gradients, preventing rapid decay.

- Adam combines momentum and adaptive learning rates using the first and second moments of gradients. It’s widely used due to its strong performance across many tasks.

Common Pitfalls in Learning Rate Selection

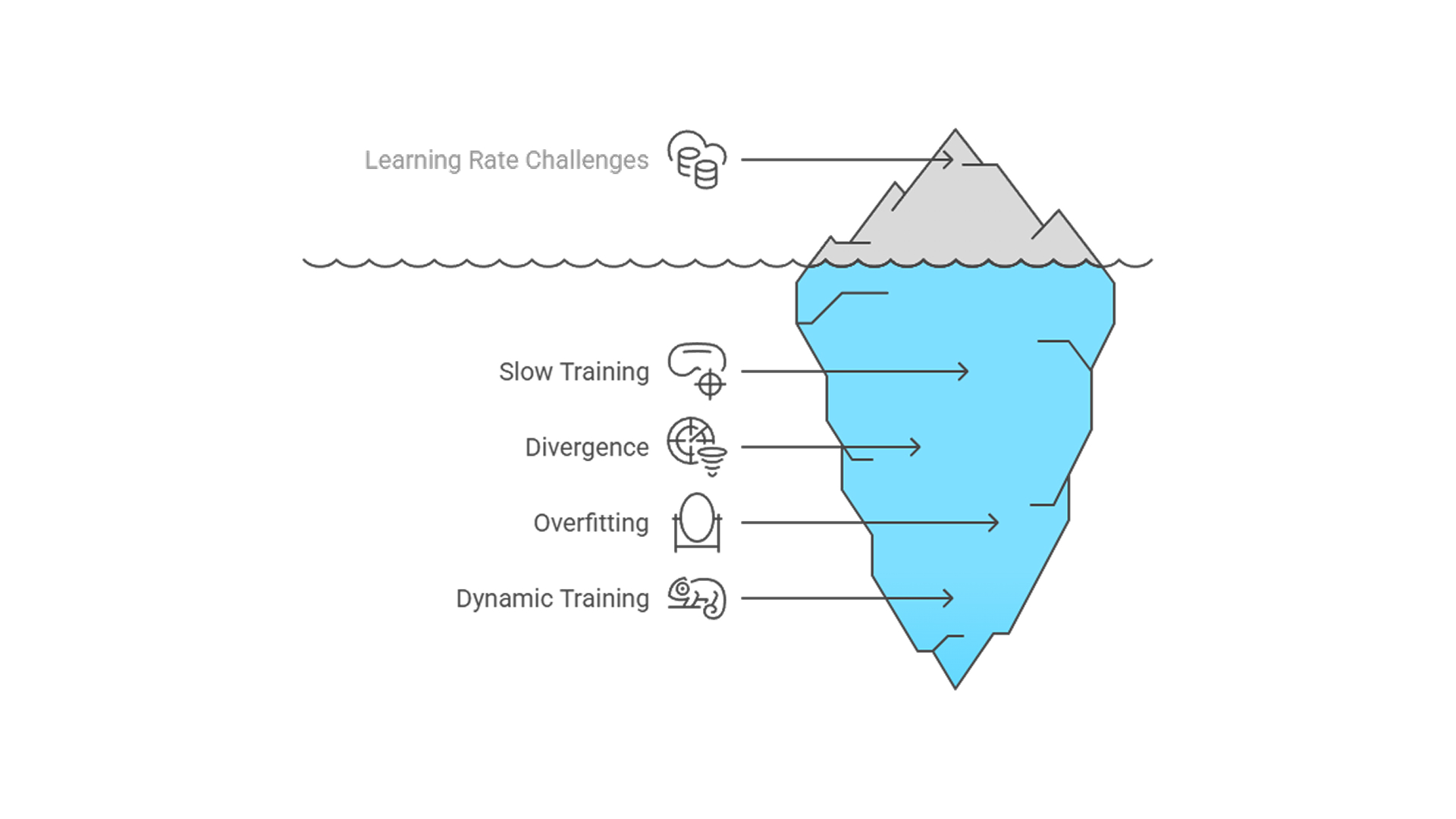

Though conceptually simple, learning rate mistakes are among the most common causes of broken training runs. Missteps typically fall into three main problem types:

1. Too low a learning rate slows training drastically. Models take longer to converge, consume more compute, and may get stuck in suboptimal regions of the loss surface. Underfitting becomes likely as the model struggles to capture meaningful patterns in the data.

2. Too high a learning rate risks overshooting minima, causing divergence or chaotic oscillations in the loss. While they may speed up early training, they often result in unstable convergence or premature settling in poor solutions, increasing the risk of overfitting or model collapse.

3. Poor adaptability of fixed learning rates makes them unsuitable for dynamic training needs. The ideal rate is contingent on factors like data complexity, model architecture, and optimizer dynamics. Static rates fail to respond to evolving gradient signals, often resulting in inefficient or unstable training. This is especially problematic in agent architectures, where models must operate autonomously and adjust in real time to changing environments. To address these demands, modern workflows increasingly adopt scheduled and adaptive learning rate strategies that evolve during training to ensure robustness and stability.

Conclusion

Learning rate sits at the core of training dynamics. Set incorrectly, it can stall learning or destabilize it entirely. But when managed well, through dynamic schedules, adaptive optimizers, and tuning tools, it becomes a precise instrument for achieving fast, stable, and effective training. Mastering it is essential for building performant models that converge reliably and generalize well.