What is Semantic Understanding?

Team Thinkstack

June 06, 2025

Table of Contents

Semantic understanding is the ability of a machine learning system to infer meaning from language, not through surface-level token matching, but by analyzing the structure, context, and conceptual relationships embedded in text. It represents a core shift from syntactic parsing to the interpretation of language in a way that aligns with human communication. In modern AI, this capability is foundational to natural language processing, information retrieval, conversational interfaces, and knowledge extraction.

Language is inherently ambiguous, contextual, and layered with nuance. For machines to operate in language-rich domains, they must interpret not just what is said, but what is meant. Semantic understanding addresses this need by enabling models to resolve word sense ambiguity, infer relational meaning, and represent language in forms that reflect its real-world referents. This capability supports a wide range of tasks, from identifying sentiment and intent to translating language or retrieving relevant information based on meaning rather than keywords.

In practice, semantic understanding is a multi-layered process. At its base is lexical analysis: mapping individual words to their basic meanings. Beyond that lies compositional semantics, where the meaning of a phrase or sentence emerges from the arrangement and interaction of its parts. Effective semantic models must also handle contextual modulation, where identical words may carry different meanings depending on usage, tone, or surrounding discourse. In reinforcement-based systems like contextual bandits, semantic cues help the agent choose actions dynamically based on the linguistic context, optimizing outcomes over time.

The importance of semantic understanding in AI stems from its role in enabling intelligent behavior. It powers systems that can answer questions accurately, translate with fluency, summarize with fidelity, and engage in dialogue that aligns with human expectations. Without it, AI remains constrained to brittle, pattern-matching approximations. This capability is foundational to intelligent systems and autonomous agents that need to act on language inputs in real time, supported by robust dialogue management systems that track context and intent across turns.

How Semantic Understanding Works

Semantic understanding is implemented through a multi-layered workflow that combines linguistic analysis, representational abstraction, and model-driven inference. The process integrates conceptual layers, computational techniques, and structured representation to achieve meaning comprehension.

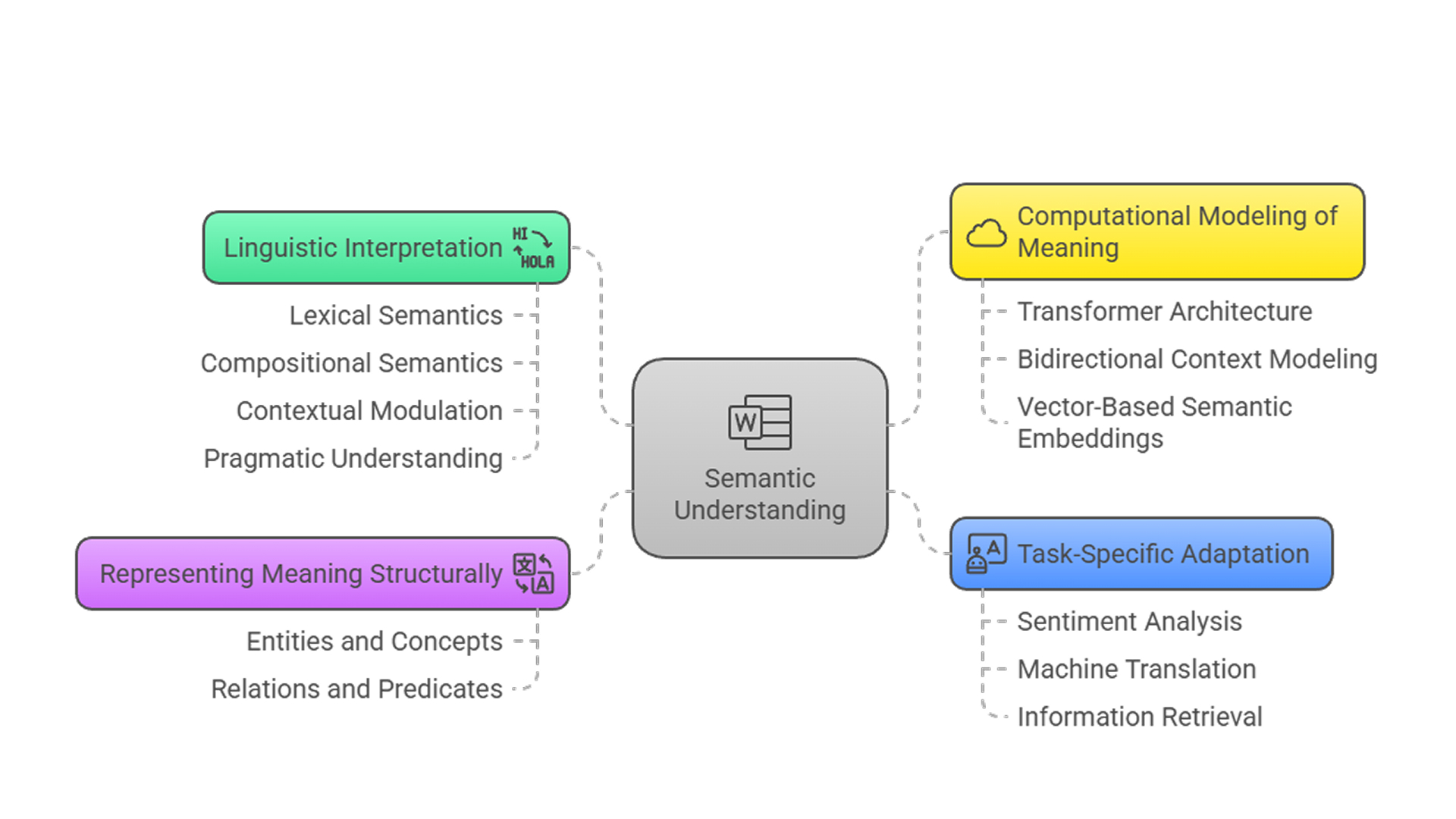

Linguistic interpretation and meaning disambiguation: Understanding begins with analyzing language at different interpretive levels.

- Lexical semantics identifies the base meanings of individual words, serving as the entry point for semantic processing.

- Compositional semantics derives sentence-level meaning from the grammatical and syntactic interaction of words.

- Contextual modulation resolves ambiguity by evaluating the surrounding textual environment. For example, differentiating "bank" as a financial institution versus a river edge.

- Pragmatic understanding interprets implied intent and tone, recognizing sarcasm, politeness, or indirect cues.

- Conceptual relationships capture associative or hierarchical word relations like synonymy or categorical inclusion.

Mechanisms like word sense disambiguation and syntactic parsing ensure that word meanings are resolved in context and structural roles are correctly assigned.

Representing meaning structurally: To support reasoning and interpretation, raw language is converted into structured forms. These formal semantic units enable models to link language to knowledge bases, ontologies, and logic systems for further inference.

- Entities and Concepts identify and categorize what is being referenced (e.g., "Marie Curie" → scientist).

- Relations and Predicates define interactions and actions (e.g., "authored by", "located in").

Computational modeling of meaning: Semantic understanding relies on a set of advanced modeling techniques. These models learn to represent meaning not through symbolic rules but through statistical patterns across massive corpora, enabling generalization and flexibility.

- Transformer architecture uses self-attention to capture semantic relevance across sequences, forming the foundation of models like BERT.

- Bidirectional context modeling, models like BERT and Bi-LSTM evaluate sequences in both forward and backward directions, enhancing context awareness.

- Vector-based semantic embeddings represent words in high-dimensional spaces where semantic similarity is inferred from proximity.

- Attention mechanisms focus computational resources on contextually important tokens, improving interpretation in tasks like summarization and translation.

Task-specific adaptation: Pretraining provides general semantic competence, but real-world utility requires fine-tuning. Fine-tuning ensures that models adapt their semantic representations to the specific structure and language of the target task.

- Sentiment analysis aligns embeddings with emotional tone detection.

- Machine translation enhances alignment between languages.

- Information retrieval matches user queries to document meanings based on semantic content.

Integrated semantic processing workflow: These layers operate in sequence and in parallel:

- Disambiguation establishes word meanings.

- Compositional analysis derives sentence meaning.

- Embeddings encode similarity and nuance.

- Structural representations support logic and inference.

- Fine-tuning ensures domain specificity.

Challenges in Semantic Understanding

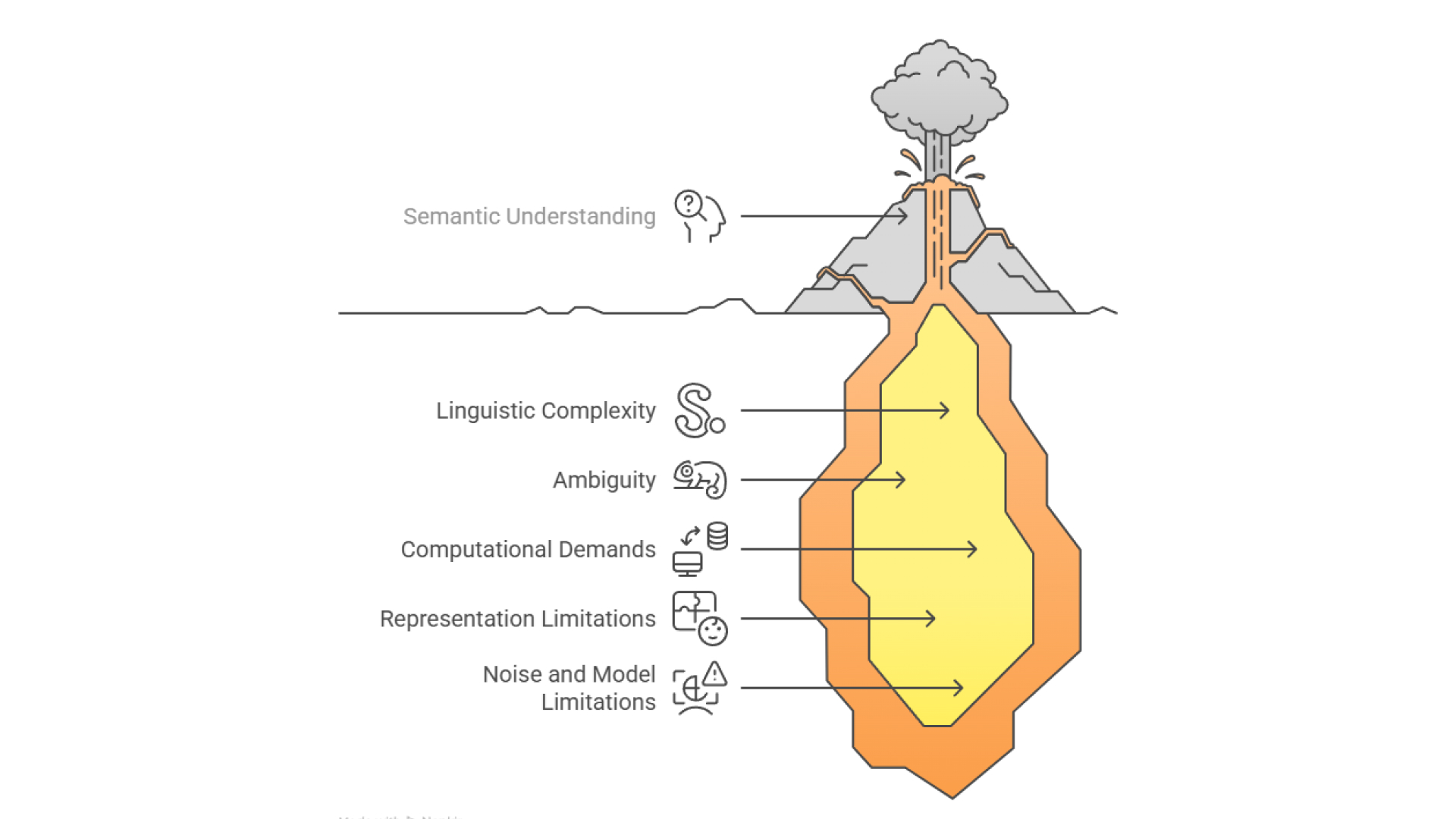

Despite its central role in modern AI, semantic understanding presents several persistent challenges that affect accuracy, scalability, and generalizability:

- Linguistic complexity: Human language is inherently nuanced and subjective. Capturing semantics requires models to interpret meaning across multiple layers, all of which demand interpretive depth beyond surface-level patterns.

- Ambiguity and disambiguation: Many words have multiple meanings, and their correct interpretation depends heavily on context. Additionally, semantic drift over time can complicate interpretation, particularly in dynamic or evolving language environments.

- Computational and resource demands: Training models that perform well in semantic tasks require extensive computational power and access to large, diverse corpora. Fine-tuning and evaluation for multiple domains further increase resource costs.

- Representation limitations: There exists a persistent gap between low-level word embeddings and high-level semantic structures. Bridging this "semantic gap" is critical for deeper scene understanding and logical inference, but remains a complex technical hurdle.

- Noise and model limitations: Methods such as masked language modeling (e.g., in BERT) can introduce artifacts or noise that reduce model performance in downstream tasks. Balancing noise resilience with semantic fidelity is an ongoing concern in model architecture and training.

Conclusion

Semantic understanding plays a central role in enabling AI systems to interpret language with contextual precision and representational depth. While its development is challenged by linguistic ambiguity, structural complexity, and computational demands, these limitations are not fixed barriers. Advances in model architecture, representation learning, and contextual inference continue to improve reliability and scalability. With ongoing refinement, semantic understanding will remain foundational to language-driven AI systems, often embedded within modular agent architecture frameworks operating across tasks, domains, and modalities.