What is Underfitting?

Team Thinkstack

June 06, 2025

Table of Contents

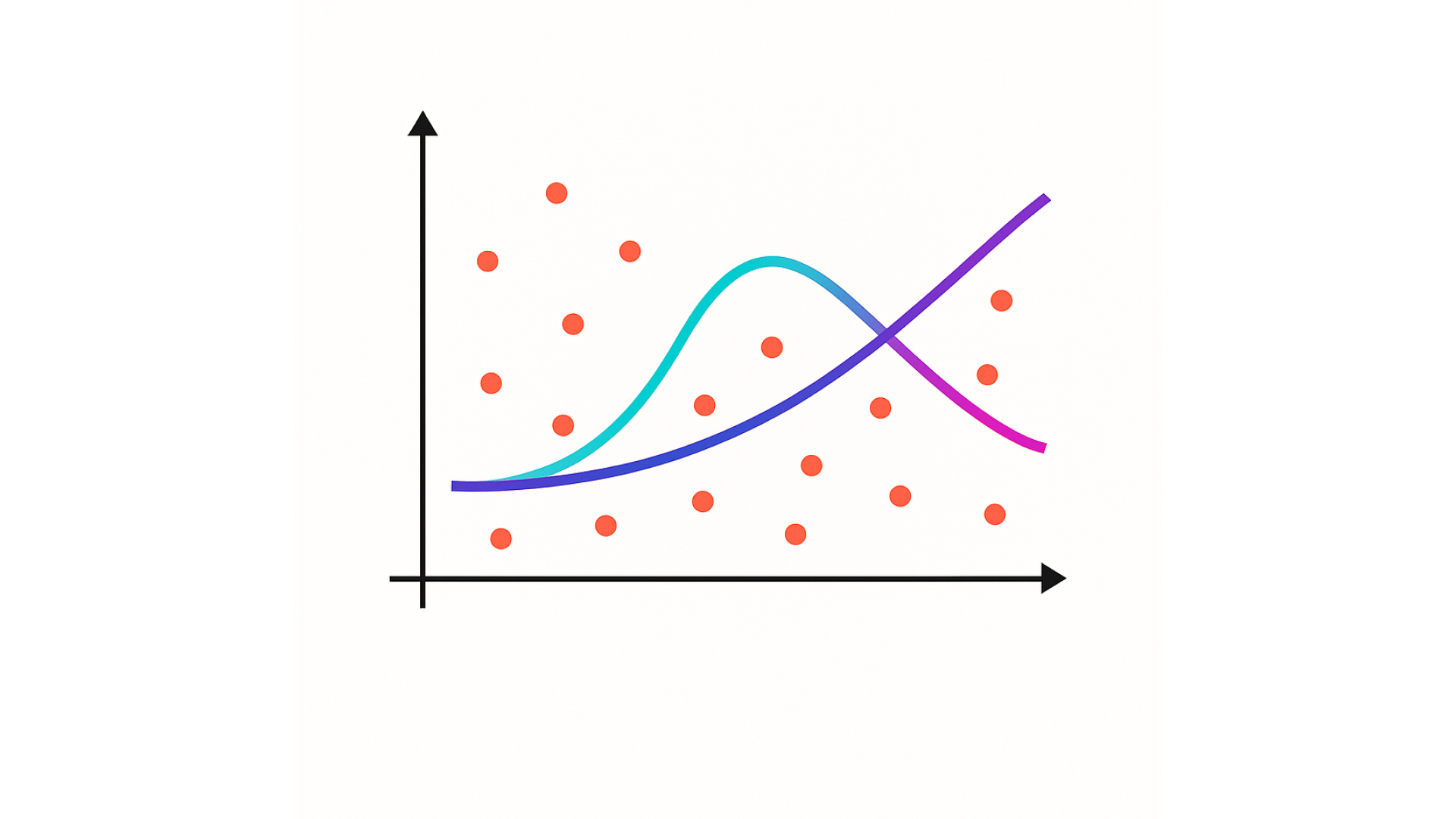

Underfitting occurs when a machine learning model is too simplistic to capture the underlying structure of the training data. It fails to learn essential patterns, resulting in high bias and limited representational capacity. Unlike overfitting, which memorizes noise, an underfit model does not even learn the signal. As a result, its predictions are consistently inaccurate across both training and unseen data, undermining its utility in real-world applications.

Underfitting is a core impediment to model performance and generalization. It reflects a mismatch between model complexity and data complexity, typically caused by inadequate architecture, poor feature representation, insufficient training time, or excessive regularization. A model that underfits will not generalize to new data because it never effectively captured the structure of the training distribution to begin with.

In the model development cycle, underfitting is most easily identified by poor performance on both training and validation sets. Learning curves show high, stagnant error across epochs, and diagnostic plots often reveal systematic residuals. While the challenge is easily detected, its correction requires rebalancing capacity, training duration, and data representation to ensure the model is sufficiently expressive without overfitting.

The consequences of underfitting extend beyond model diagnostics. In real-world systems.

- Missed patterns and relationships: Key associations in the data remain uncaptured, leading to models that cannot detect the underlying structure of the problem. This is especially damaging in structured data tasks like forecasting or classification, where relational features matter.

- Weak predictions in production: In domains like healthcare or finance, where predictive reliability is critical, underfit models fail to generalize and yield poor outcomes—misdiagnoses, failed forecasts, or inaccurate risk assessments.

- Limited operational value: A model that cannot deliver reliable predictions offers no strategic or business advantage. It becomes a bottleneck rather than a tool for automation or insight.

Understanding underfitting is critical because it represents the lower bound of model expressiveness. Addressing it is a prerequisite for developing systems that can learn, generalize, and produce value in real deployment environments.

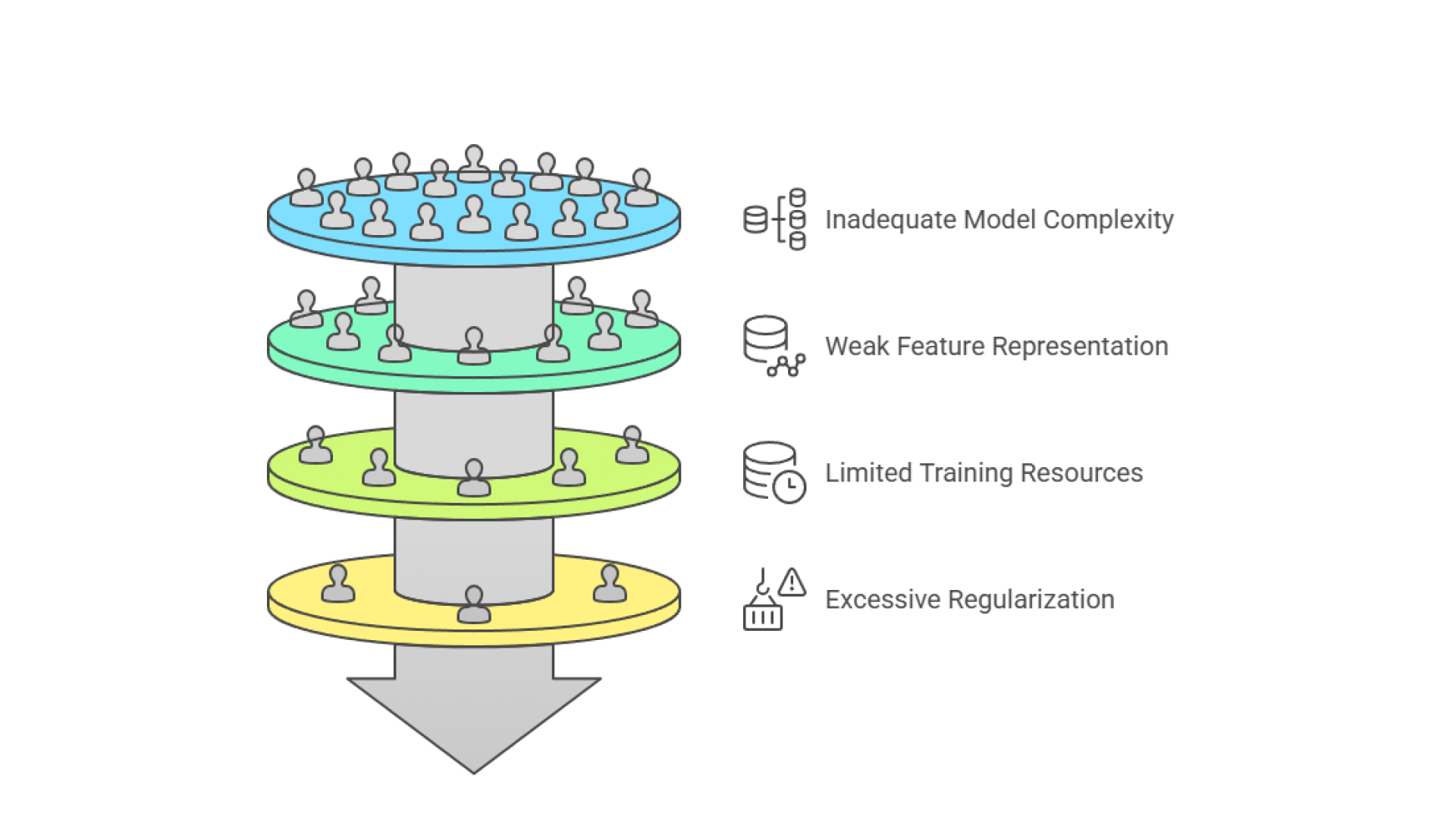

Causes of Underfitting

Several design and development factors contribute to this failure, each rooted in the mismatch between model expressiveness and task complexity.

Inadequate model complexity: The most direct cause of underfitting is choosing a model architecture that is too simplistic relative to the complexity of the problem. Linear models applied to non-linear tasks, shallow trees for high-dimensional classification, or basic neural networks for vision or speech tasks all fall into this category. These models impose strong structural assumptions, often favoring overly simplistic relationships that fail to reflect the data’s actual dynamics. Such bias constrains learning, producing models that generalize poorly and misrepresent the underlying patterns even on training data.

Weak or insufficient feature representation: Even with a suitable model architecture, underfitting can result from poor input representations. If the features lack explanatory power or fail to capture key variables, the model cannot learn the correct associations. Omitting interaction terms, ignoring hierarchical relationships, or relying on overly coarse attributes limits the signal available to the model. Without sufficiently rich or relevant features, prediction remains imprecise regardless of model depth.

Limited training time or data: Models also underfit when they are trained on insufficient data or with inadequate iterations. Short training durations prevent convergence, while small or unrepresentative datasets offer too little signal for effective learning, hindering the backpropagation process essential for updating model weights accurately. In such cases, the model fails to observe enough variation to infer robust patterns. Learning curves for underfit models typically plateau early with persistently high error on both training and validation sets.

Excessive regularization: Regularization is designed to prevent overfitting by penalizing complexity, but excessive use can lead to underfitting. Strong penalties on parameters can overly suppress model flexibility, especially in tasks requiring nuanced or layered representations. L1 and L2 constraints that are too aggressive lead to uniformity in weights and flatten model responses, impeding the learning of meaningful distinctions.

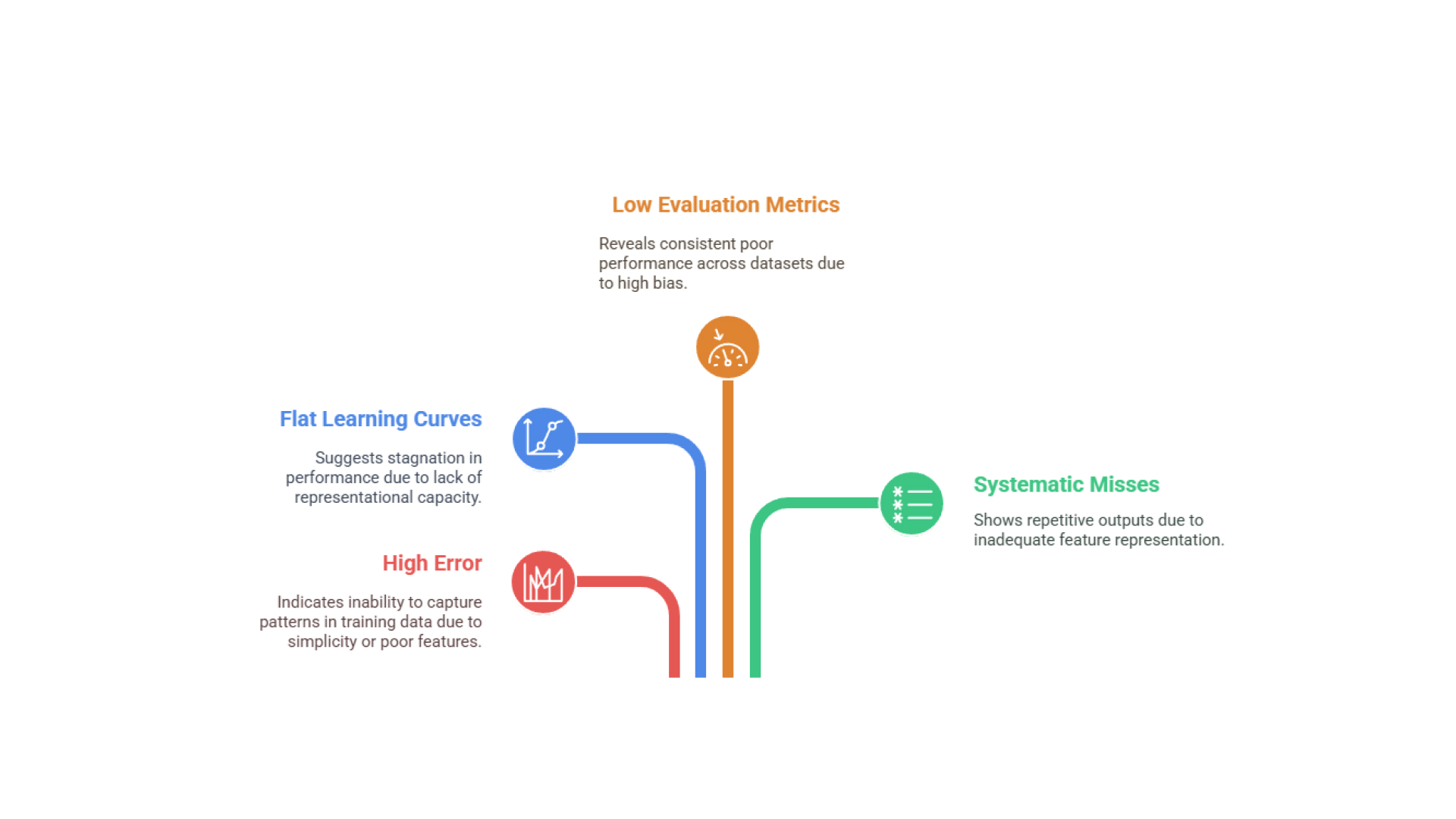

How to Identify Underfitting

Underfitting can be identified through distinct performance patterns that signal a model’s inability to learn from its training data. These signs typically emerge early in the development cycle and are consistent across datasets.

- High error on both training and validation sets indicates that the model is unable to capture even the patterns in its own training data. This typically reflects a model that is either too simplistic in architecture or operating with insufficient or poorly chosen features.

- Flat learning curves with persistently high loss suggest that the model’s performance stagnates early during training. When both training and validation loss plateau at high values, it signals that the model lacks the representational capacity or informative inputs necessary for effective learning.

- Low evaluation metrics across all datasets reveal that the model consistently performs poorly regardless of the dataset. This uniformity in underperformance is symptomatic of high bias and insufficient learning capacity, as the model fails to approximate the target function.

- Systematic misses of obvious patterns emerge when the model produces repetitive or generic outputs across varied inputs. Such behavior points to an inability to internalize meaningful distinctions in the data, often due to inadequate feature representation, shallow architecture, or insufficient training time.

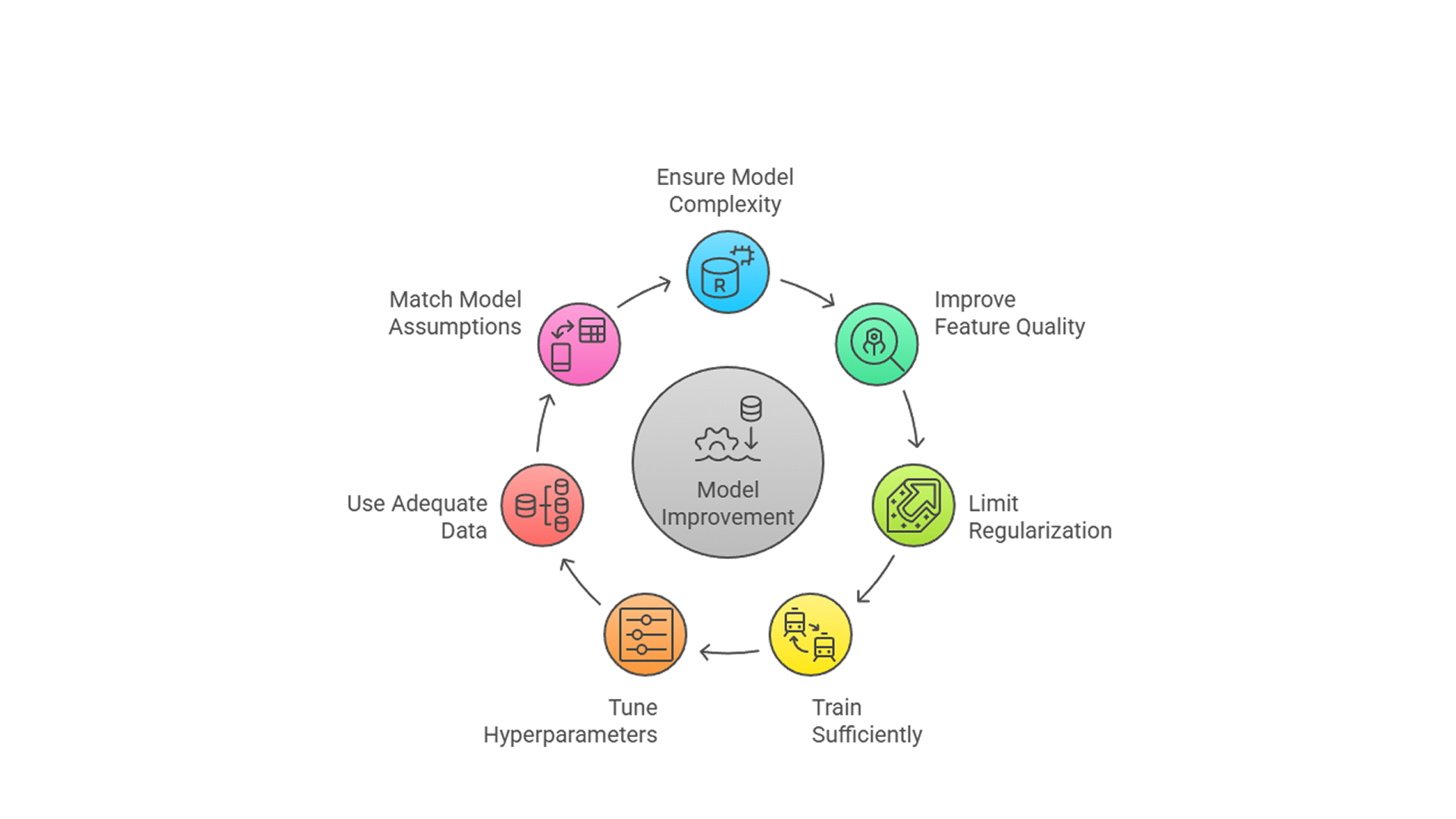

How to Prevent Underfitting

Preventing underfitting centers on ensuring the model has sufficient capacity and information to learn from data effectively.

- Ensure model complexity: Use architectures suited to task complexity; avoid models that are too shallow or under-parameterized.

- Improve feature quality: Add informative, domain-relevant features; use feature engineering to reveal latent structure.

- Limit regularization: Avoid overly strong penalties that restrict learning; tune to preserve model flexibility.

- Train sufficiently: Extend training if loss remains high; monitor learning curves to avoid premature stopping.

- Tune hyperparameters: Adjust learning rate, depth, batch size, and regularization strength to increase model capacity without overfitting. Leveraging automated machine learning (AutoML) tools can streamline this process by automatically identifying optimal configurations.

- Use adequate, clean data: Ensure the dataset is large, diverse, and well-preprocessed to capture key patterns.

- Match model assumptions to data: Align model type with data structure; switch models if assumptions don't hold.

Conclusion

Underfitting is a problem that arises when a model lacks the capacity or information needed to learn from data. It prevents the model from capturing essential structure, leading to uniformly poor performance and missed opportunities for meaningful prediction. While easily detectable, its presence can significantly limit the effectiveness of machine learning systems. Fortunately, underfitting can be addressed through informed adjustments to model design, feature representation, and training configuration. Recognizing and resolving it is not just a technical fix; it is foundational to building models that learn effectively and deliver real-world value, especially when integrated within robust agent architecture frameworks.