What is Working Memory?

Team Thinkstack

June 11, 2025

Table of Contents

Working memory refers to the capacity of a system, biological or artificial, to temporarily hold and manipulate information for goal-directed processing. In both neuroscience and artificial intelligence, it plays a central role in enabling tasks that require sustained attention, sequential reasoning, context tracking, and flexible adaptation. While biological working memory is studied through neural activity in regions such as the prefrontal cortex, its computational analogs are implemented via architectures that can maintain and update internal representations across time steps.

In artificial systems, working memory is not a single mechanism but a functional outcome emerging from architectural design and training dynamics. Models ranging from recurrent networks to transformer-based language models exhibit varying degrees of memory retention and manipulation, enabling a range of capabilities that mirror human cognitive functions. Developing robust working memory mechanisms in neural models is essential for enabling systems that perform sustained reasoning, adaptive planning, and coherent interaction across time.

Applications and implications include:

- Conversational coherence: Large Language Models (LLMs) like GPT-4 exhibit the emergent ability to maintain conversational context across multiple turns, enabling coherent dialogue and topic continuity.

- Sequential decision-making: Recurrent neural networks trained on spatial or action-based tasks demonstrate memory-driven planning behavior, an essential trait in autonomous AI agents that must operate over temporally extended environments.

- Interference resistance: In environments with distractions or irrelevant stimuli, models with robust working memory, such as Transient RNNs (TRNNs), outperform simpler architectures by maintaining task-relevant information under noise.

- Multi-item retention: Models that support encoding of multiple sequential elements, such as direction-following or spatial memory in maze tasks, approximate human-like memory constraints and facilitate more complex reasoning chains.

- Long-context NLP tasks: LLMs apply in-context learning to incorporate prompt-specific information with pre-trained knowledge, enabling dynamic adjustment to user input, document structure, or stylistic constraints.

How Working Memory is Implemented and Measured in Neural Models

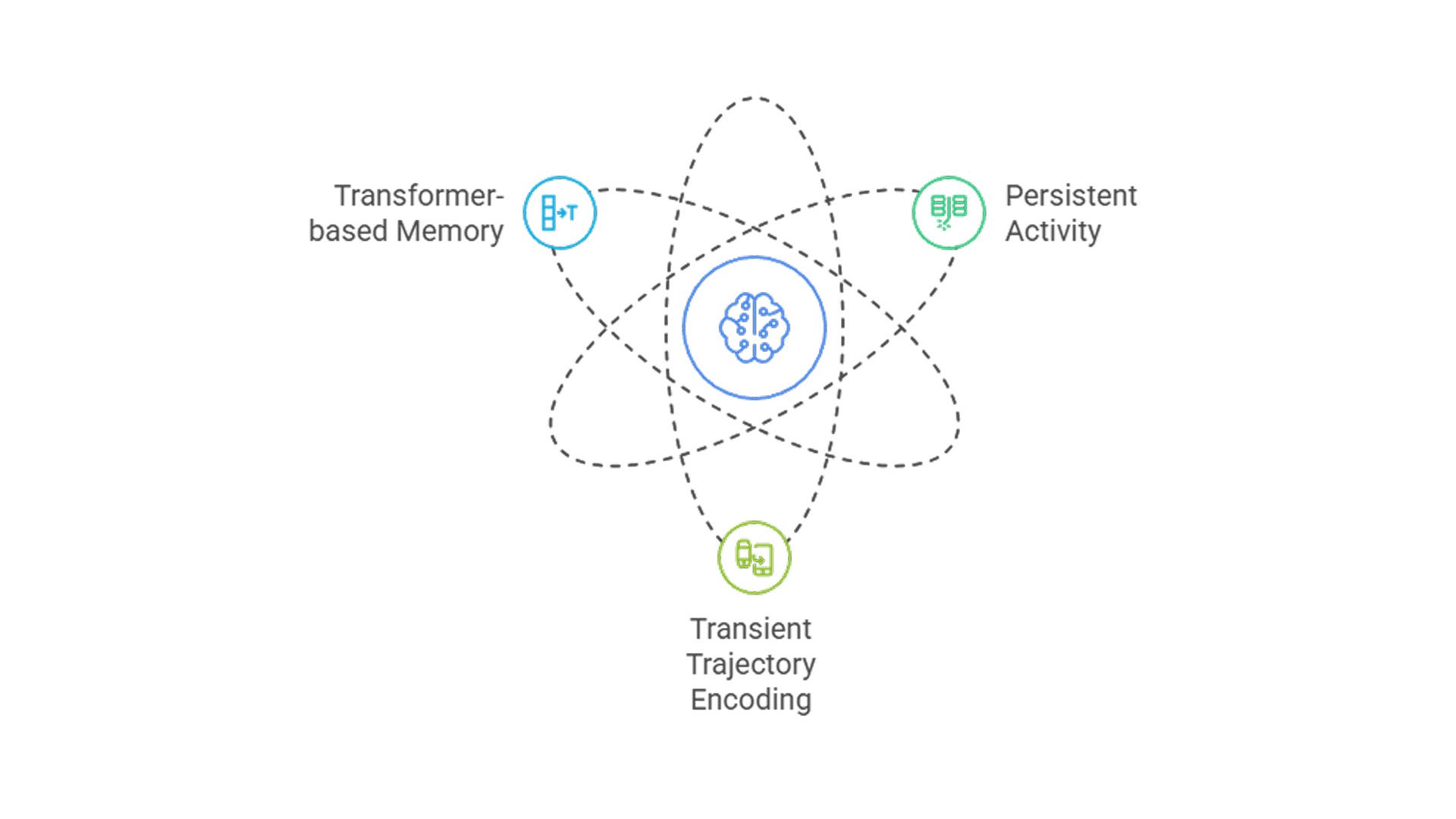

Persistent vs. transient dynamics

Artificial systems implement working memory using different strategies that reflect ideas from neuroscience. The most traditional approach uses persistent activity, where certain neurons (or units) stay active during a delay to hold information. This is common in basic RNNs and resembles bump attractor models, where interconnected units sustain localized activity to represent a memory.

An alternative is transient trajectory encoding, where memory is stored in how patterns of activity evolve over time rather than staying constant. Transient RNNs (TRNNs) are designed to support this by introducing:

- Self-inhibition, which dampens prolonged firing;

- Sparse recurrent connections, which avoid rigid loops;

- Hierarchical structure, which separates processing into functional stages.

This leads to more dynamic and flexible memory traces, matching observations from biological systems. While not typically used for temporal memory, models like autoencoders contribute to efficient encoding and compression of input data, which supports memory-efficient representations in broader neural systems.

Transformer-based memory

Transformers take a different approach. They don’t have explicit delay dynamics or persistent units. Instead, memory emerges from the self-attention mechanism, which allows the model to reweight and retrieve relevant past tokens based on current input. This supports memory-like behavior such as context tracking and in-context learning, even though the architecture lacks a dedicated memory module.

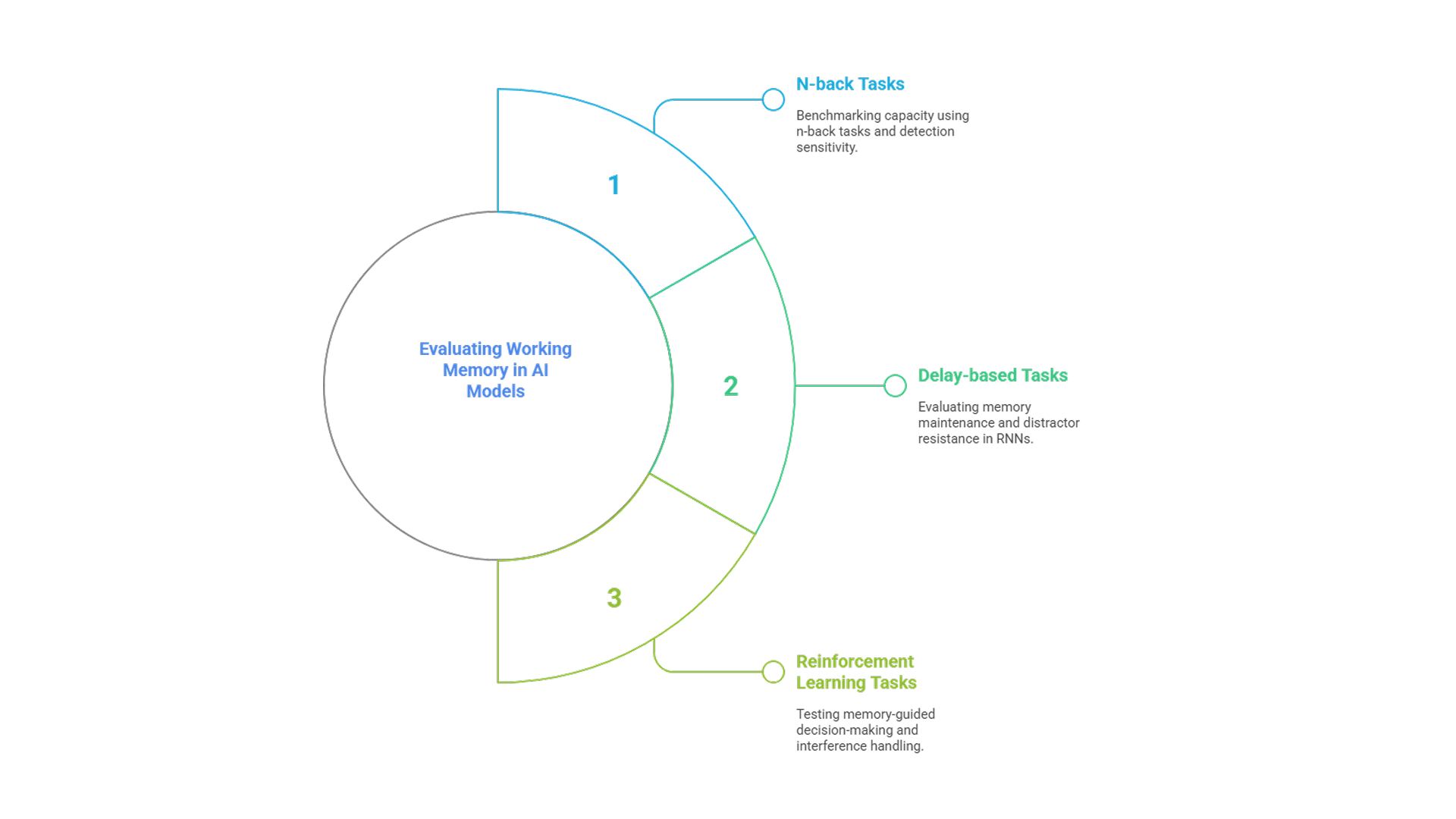

Evaluating working memory in AI models

N-back tasks for capacity benchmarking

To quantify retention limits, models are tested on n-back tasks, where the correct response depends on recognizing whether the current input matches one received n steps prior. This requires continuous memory updating and selective forgetting.

- Detection sensitivity (d') is the primary metric, defining capacity as the point where d' falls below 1.

- Performance across verbal and spatial variants, with or without noise and feedback, reveals susceptibility to interference and effects of reasoning strategies (e.g., Chain-of-Thought prompting).

Delay-based tasks in RNNs

Recurrent models are assessed using delayed-response tasks, such as the Oculomotor Delayed Response (ODR) and its distractor variant (ODRD). These tasks measure the model’s ability to hold a stimulus in memory and act upon it after a delay, testing both maintenance and distractor resistance.

- Output accuracy is evaluated by angular deviation from target locations.

- Activity-behavior correlations quantify how unit firing rates predict behavioral outputs.

- Fano factor analysis of unit variability reflects noise sensitivity and internal drift.

Reinforcement learning tasks for robustness and capacity

Working memory is further tested through tasks requiring action selection based on temporally displaced cues:

- Direction-following and maze navigation evaluate memory-guided decision-making.

- Multi-item tasks test memory span and interference handling.

- Reward metrics offer a functional measure of memory utility across episodes.

Conclusion

Training working memory in neural models depends heavily on backpropagation, which allows gradients to adjust memory-related components over time. Working memory is a core computational function that underpins context-sensitive behavior in both biological and artificial systems. It enables models to maintain relevant information across time, supporting coherence, planning, and resistance to interference.

While current implementations reproduce key aspects of working memory, they remain constrained by capacity limits, representational sparsity, and incomplete alignment with biological variability. These challenges reflect both architectural constraints and evaluation gaps.

Addressing them requires integrating structural modifications, benchmarking strategies, and biologically grounded hypotheses. Doing so not only clarifies the computational basis of memory maintenance but also extends the design space for more capable and interpretable models.