What is Xor Problem?

Team Thinkstack

June 11, 2025

Table of Contents

The XOR (exclusive OR) function maps two binary inputs to an output of 1 if the inputs differ, and 0 if they are the same. This simple rule exposes a foundational limitation in early neural network architectures and marks a turning point in the field’s evolution. When plotted in two-dimensional input space, the outputs of the XOR function cannot be separated by a single straight line, unlike functions such as AND or OR. This property, known as non-linear separability, places XOR outside the representational capacity of a single-layer perceptron.

This limitation was formalized in 1969 by Marvin Minsky and Seymour Papert, whose analysis showed that the perceptron model could not solve XOR. The implication was not just a critique of a specific architecture but a challenge to the broader viability of neural network research at the time. Without a mechanism to learn non-linear mappings, the perceptron was shown to be inadequate for even modestly structured problems. The result was a significant decline in interest and funding for neural networks, a period retrospectively referred to as the “AI winter.”

The importance of the XOR problem is not in its complexity but in its structure. It acts as a minimal example of the need for deeper architectures and more expressive transformations. Solving XOR requires an internal representation that reconfigures the input space, an operation that cannot be achieved through linear mappings alone. This realization laid the groundwork for multi-layer neural networks, where hidden layers equipped with non-linear activation functions enable the construction of decision boundaries that single-layer models cannot reach.

In retrospect, XOR did not expose a failure of neural computation, but rather a boundary—one that could be crossed through architectural depth and algorithmic advances. The problem became a catalyst for the development of backpropagation and multi-layer perceptrons, anchoring concepts that now define modern deep learning. Its pedagogical value persists: it remains the clearest illustration of why non-linear problems demand non-linear models, and why depth in architecture is more than a matter of scale, it is a precondition for capability.

Why Single-Layer Perceptrons Cannot Solve XOR

A single-layer perceptron computes its output by applying a weighted sum to its inputs, followed by a thresholding activation. This structure can classify inputs that are linearly separable, where a single line (or hyperplane) can divide the input space into distinct classes. Functions like AND and OR satisfy this condition and are successfully modeled by such networks.

XOR does not. The XOR function produces outputs that cannot be separated by a single linear boundary. This failure is not due to training or optimization but arises from the perceptron’s structural limitation, it cannot transform inputs or construct intermediate representations. With only direct input-to-output connections, it cannot represent the conditional structure required for XOR.

This reveals a broader limitation, tasks involving non-linear decision boundaries cannot be solved using single-layer architectures alone. To address such problems, the network must be extended to include one or more hidden layers that enable transformations of the input space.

Key structural limitations:

- Only models linearly separable problems

- Fails on XOR due to lack of intermediate feature transformations

- No capacity for combining features in a non-linear way

- Requires additional layers to represent complex mappings

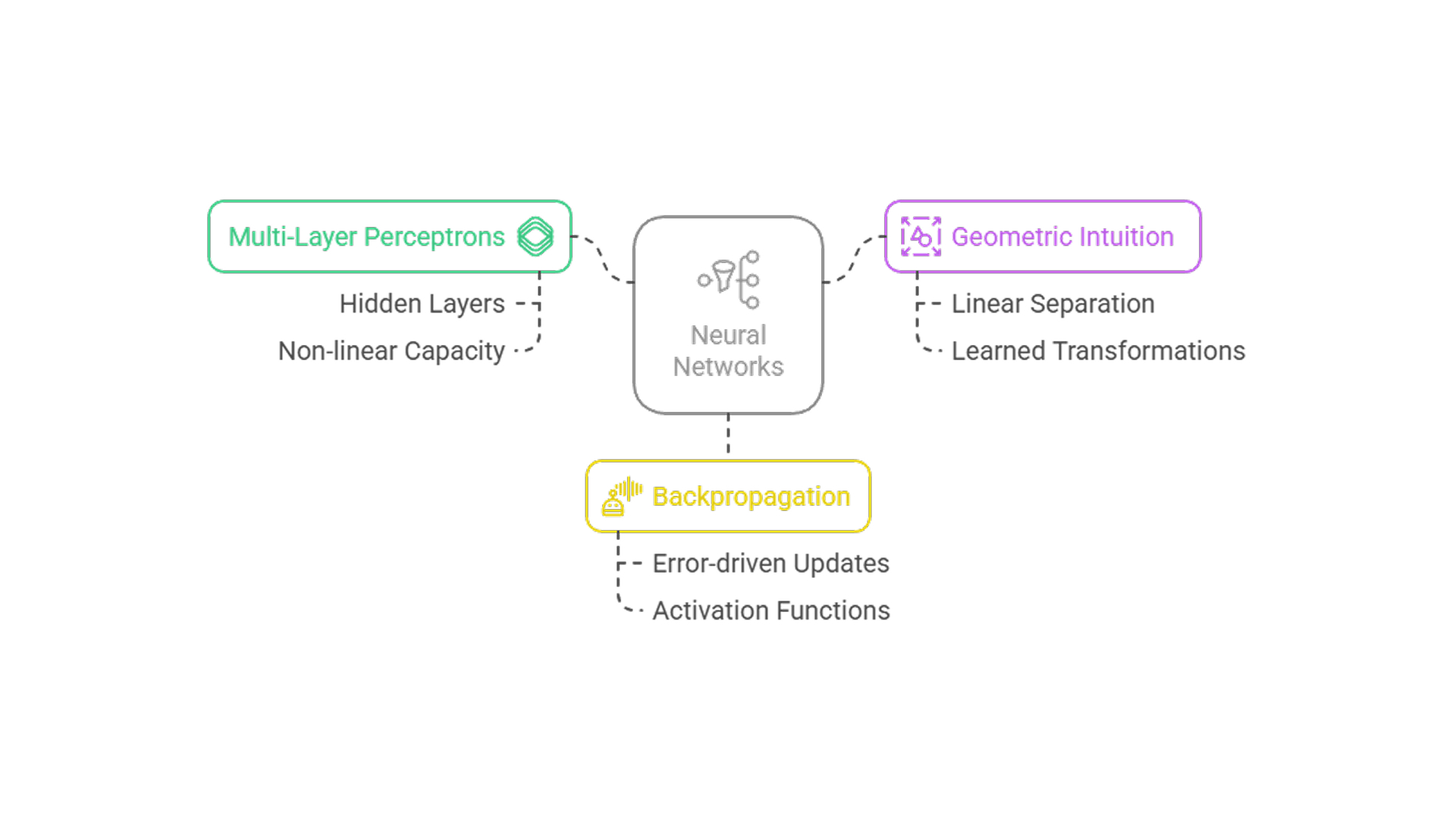

How Neural Networks Solve the XOR Problem

Solving XOR requires a model that can go beyond linear separation. Multi-Layer Perceptrons (MLPs) provide this capability by introducing depth into the network, specifically, one or more hidden layers. These additions allow the network to build intermediate representations that restructure the input space, making non-linear patterns learnable.

Multi-layer perceptrons and non-linear capacity

MLPs extend the architecture by adding one or more hidden layers between input and output. These hidden layers allow the network to build intermediate representations that a single-layer model cannot express.

- Non-linear decision boundaries emerge in MLPs through transformations in hidden layers, allowing them to solve problems like XOR that single-layer perceptrons cannot.

- Activation functions such as sigmoid or ReLU introduce essential non-linearity; without them, multi-layer networks would collapse into linear models.

- Minimal architecture for XOR includes two inputs, one hidden layer with two units, and one output unit—sufficient to classify all XOR input combinations correctly.

Geometric and representational intuition

The hidden layer’s role is to reshape the input space. In the original input space, XOR’s output classes are not linearly separable. The hidden layer transforms this space into a new representation where a linear separation becomes possible.

This transformation is not hard-coded. It is learned through training. The network automatically discovers internal combinations of the input variables that expose the underlying class structure. The result is a reconfiguration of the input data that permits linear separation in the transformed space, which the output layer then classifies.

Backpropagation and weight learning

For the network to perform correctly, it must learn suitable weights and biases. Manually identifying these values is computationally infeasible even for small networks. Training is handled through backpropagation, a gradient-based optimization method.

- Error-driven updates adjust network parameters by propagating the output error backward, using gradients computed at each layer to minimize prediction error.

- Role of differentiability is fulfilled by activation functions like sigmoid and ReLU, which enable gradient-based learning through backpropagation.

- Repeated optimization through multiple training epochs allows the model to iteratively refine weights and improve its input-output mapping.

- Alongside hyperparameters like learning rate and batch size, these influence how effectively the model converges during training.

Conclusion

The XOR problem remains a foundational example in neural network research. It illustrates the representational limitations of single-layer perceptrons and the need for deeper architectures to handle non-linearly separable data. The inability of shallow models to solve XOR highlighted the importance of architectural depth and non-linear activation functions. Shallow networks in such cases are prone to underfitting, where they fail to capture the necessary complexity of the data.

These limitations are addressed by multi-layer perceptrons, which introduce hidden layers that transform the input space and allow linear separation in a higher-dimensional representation. Through training with backpropagation, the network can automatically learn the parameters required to model XOR correctly. XOR remains a standard teaching example because it clearly shows how architectural depth enables models to capture patterns that shallow networks cannot.