What is Zero Shot Learning?

Team Thinkstack

June 11, 2025

Table of Contents

Zero-shot learning (ZSL) enables models to recognize and categorize inputs that belong to classes not present in their training data. Unlike supervised learning, which requires labeled examples for every target class, ZSL generalizes to unseen classes using auxiliary information such as class descriptions or semantic attributes.

It works by mapping both familiar and unfamiliar classes into a common semantic space, allowing the model to compare new inputs against descriptive information learned during training. During inference, it maps unseen inputs into the same space and identifies the closest matching class based on semantic similarity. This allows the model to perform classification without access to labeled examples of the new class.

Zero-shot learning differs from few-shot learning (FSL) and one-shot learning (OSL) in its reliance on zero examples for novel classes. While FSL adapts using a handful of labeled samples and OSL generalizes from a single instance, ZSL operates solely through its prior knowledge and auxiliary descriptors.

This framework is especially valuable in settings where labeled data is costly, unavailable, or changing frequently. It supports generalization, reduces annotation costs, and enables rapid adaptation to new categories.

Because it doesn’t rely on labeled examples of new categories, zero-shot learning is useful in any situation where new types of inputs keep appearing or labeled data is hard to get.

- Natural language processing (NLP) includes applications like text classification, sentiment analysis, intent recognition, and instruction following.

- Computer vision spans tasks such as image classification, unseen object detection, semantic segmentation, sketch-based retrieval, and text-to-image generation.

- Healthcare uses ZSL for diagnosing rare diseases and conditions with limited or no prior training examples.

- Recommendation systems apply ZSL to address cold-start problems in content or product recommendations.

- Retail and E-commerce rely on ZSL to classify new products using only textual metadata.

- Audio and speech processing involves tasks like voice conversion across speakers or languages without the need for parallel data.

- Robotics and control use ZSL to generalize navigation and manipulation tasks in unfamiliar environments.

- Scientific domains apply ZSL in curve-fitting, material discovery, and mathematical modeling, where training examples are sparse.

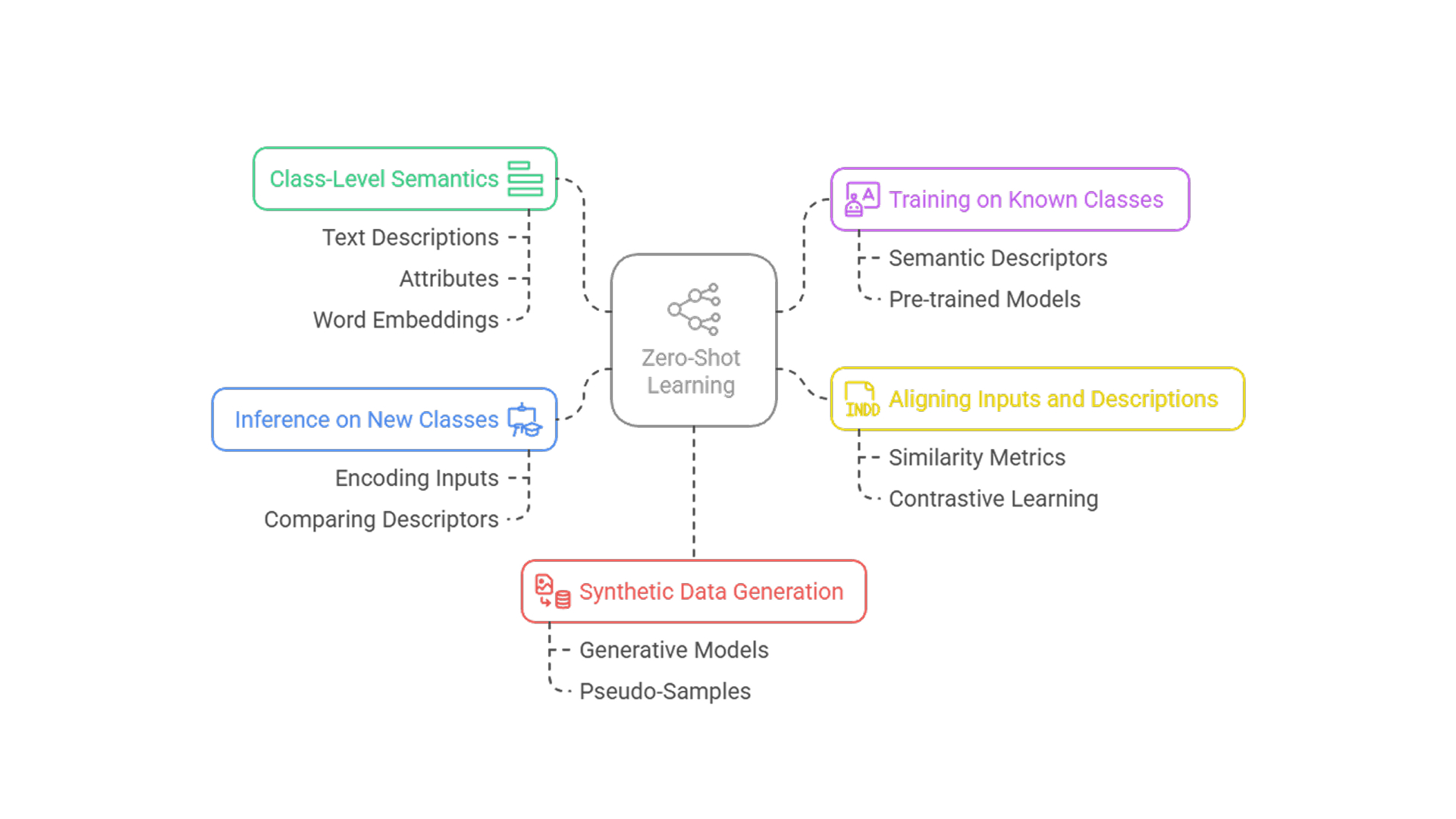

How Zero-Shot Learning Works

Class-level semantics

Each class is defined by auxiliary information text descriptions, attributes, or word embeddings that summarize its core features. These serve as reference points for prediction and are the only specification available for novel categories.

Training on known classes

The model is trained on labeled examples from a limited set of classes, each linked to its semantic descriptor. The goal is to learn a mapping from input features (e.g., images, text) to the semantic space where class descriptors live, typically optimized through backpropagation during training. Pre-trained models like ResNet, BERT, or CLIP often initialize this mapping with general-purpose representations, and training stability may be influenced by hyperparameters like batch size.

Aligning inputs and descriptions

The training objective is to bring input representations close to their correct class descriptors. A shared semantic space is formed, where semantically similar inputs and classes are positioned nearby. Similarity metrics (e.g., cosine distance), alignment techniques like contrastive learning, and attention mechanisms help shape this space by emphasizing relevant semantic features.

Inference on new classes

At test time, the model encodes a new input into the semantic space using the same encoder. It then compares this representation to the descriptors of the target classes. The class with the highest similarity score becomes the predicted label.

Synthetic data generation

Some methods use generative models such as autoencoders (e.g., VAEs) or GANs to create artificial examples of new classes from their descriptions. These pseudo-samples allow training a standard classifier when semantic alignment alone is insufficient.

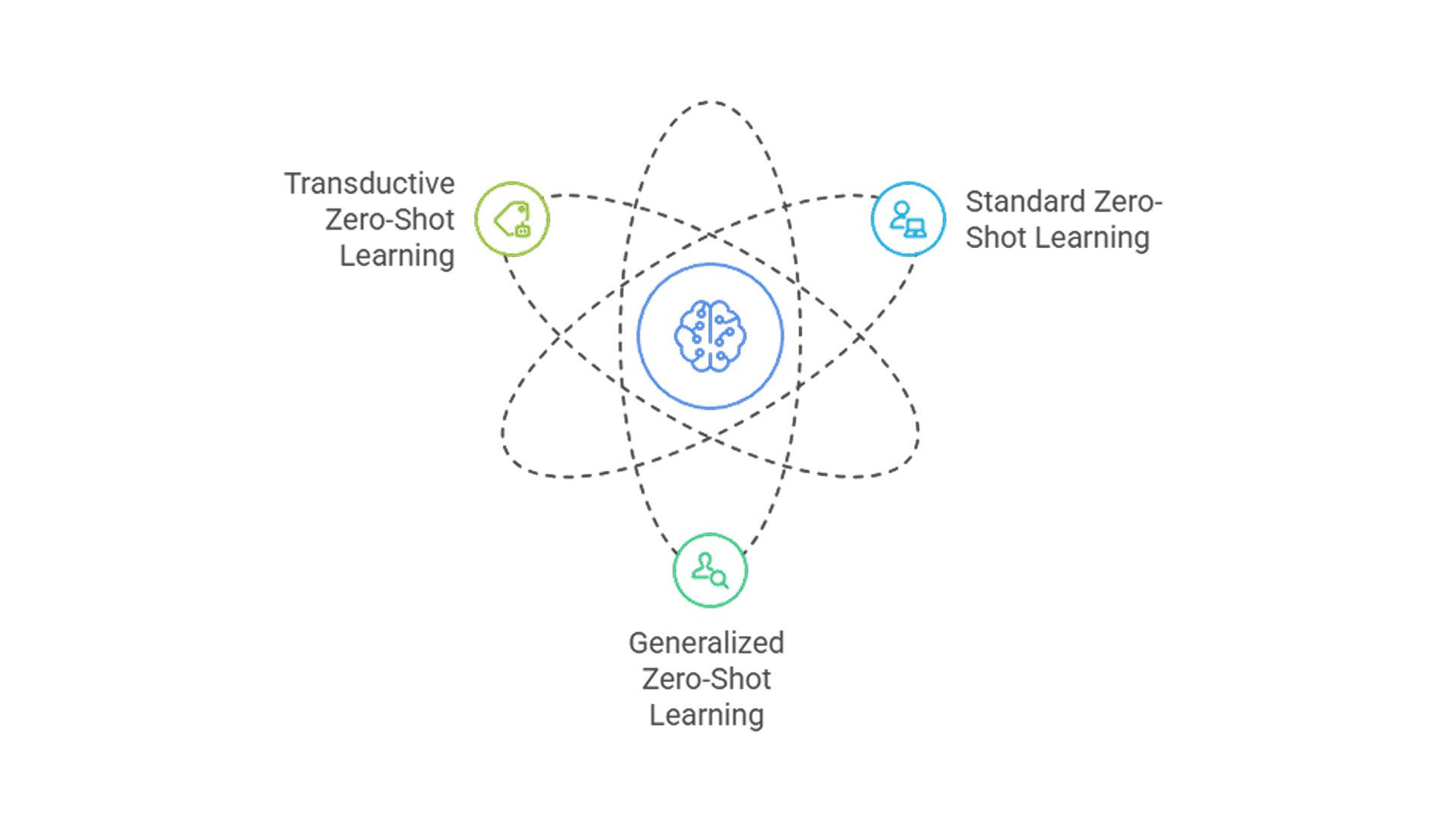

Types of Zero-Shot Learning

Zero-shot learning is not defined by specific model architectures but by the structure of the classification task. Different problem setups determine how unseen classes are incorporated and evaluated.

Three core variants are commonly distinguished:

In Standard zero-shot learning, the model is evaluated exclusively on classes that were absent during training. No labeled examples of these classes are seen at any stage. Classification relies entirely on auxiliary information, such as attributes or text descriptions, to map unseen inputs into a shared semantic space. This isolated formulation highlights the model’s capacity for concept transfer but does not reflect the ambiguity present in real-world deployments.

Generalized zero-shot learning (GZSL) introduces a more realistic condition: test data includes both seen and unseen classes. The model must generalize to novel categories without losing accuracy on previously learned ones. A well-known challenge in this setting is overprediction of seen classes, driven by training data imbalance. GZSL methods typically introduce calibration techniques or generative augmentation to correct for this bias and ensure balanced performance.

Transductive zero-shot learning extends the framework by incorporating unlabeled examples from unseen classes during training. Although no labels are provided, access to the feature distribution of novel categories allows the model to better align semantic descriptors with input space structure. This setup blends ZSL with elements of semi-supervised learning and often improves generalization when test-time data is available in advance.

Conclusion

Zero-shot learning enables classification without labeled examples, making it especially useful when data is scarce or evolving. By leveraging auxiliary information, it extends models to new categories without retraining.

Despite its promise, zero-shot learning faces known challenges like bias toward seen classes, domain shift, and dependence on descriptor quality. Generalized and transductive variants further expose these limits in real-world settings.

Ongoing research in generative modeling, semantic embedding, and calibration techniques continues to address these gaps. As models are applied to more open-ended tasks, zero-shot learning remains an essential approach for building systems that can scale and adapt without needing new labeled data.