What is Model Context Protocol (MCP)?

Team Thinkstack

June 02, 2025

Table of Contents

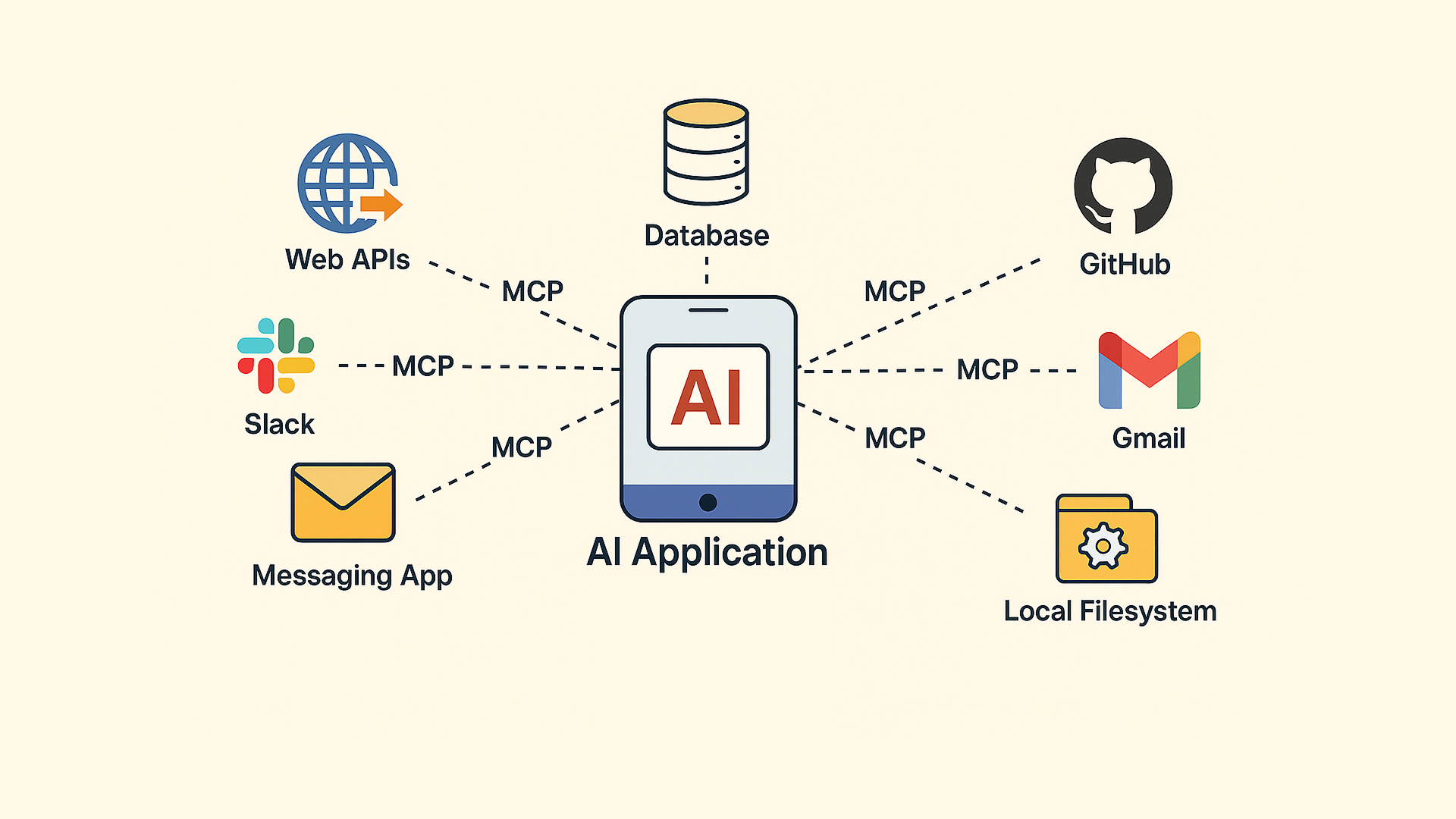

Most AI models today are still disconnected from the real systems people use daily, like file storage, messaging apps, databases, APIs, and more. While models have become more powerful, their real-world usefulness remains limited when they can’t operate with live data or perform actions outside a conversation.

The Model Context Protocol (MCP) is an open standard and open-source framework introduced by Anthropic in November 2024. It replaces scattered, custom-built integrations with a standardized, modular framework that enables real-time adaptability. It provides a universal way for AI models, particularly large language models (LLMs), to connect with external data sources, tools, and applications. MCP simplifies integration by replacing one-off connectors with a standard server interface. Developers build an MCP-compliant server once, and it works with any compatible host, turning the complex M×N integration problem into a streamlined N+M setup.

Security is built in, hosts must request user consent before sharing data or enabling tools. Servers run in sandboxed environments, and developers can enforce role-based access and custom authentication to protect sensitive systems.

Traditional approaches often suffer from fragmentation, lack of interoperability, and high integration overhead. Without a common standard, each AI model needed a custom-built integration for every tool, and vice versa. This led to redundant development work across teams and platforms, inconsistent behavior across integrations, excessive maintenance costs due to API changes and model updates, and slow deployment cycles, with each integration being a one-off project, often relying on hardcoded API calls or custom middleware.

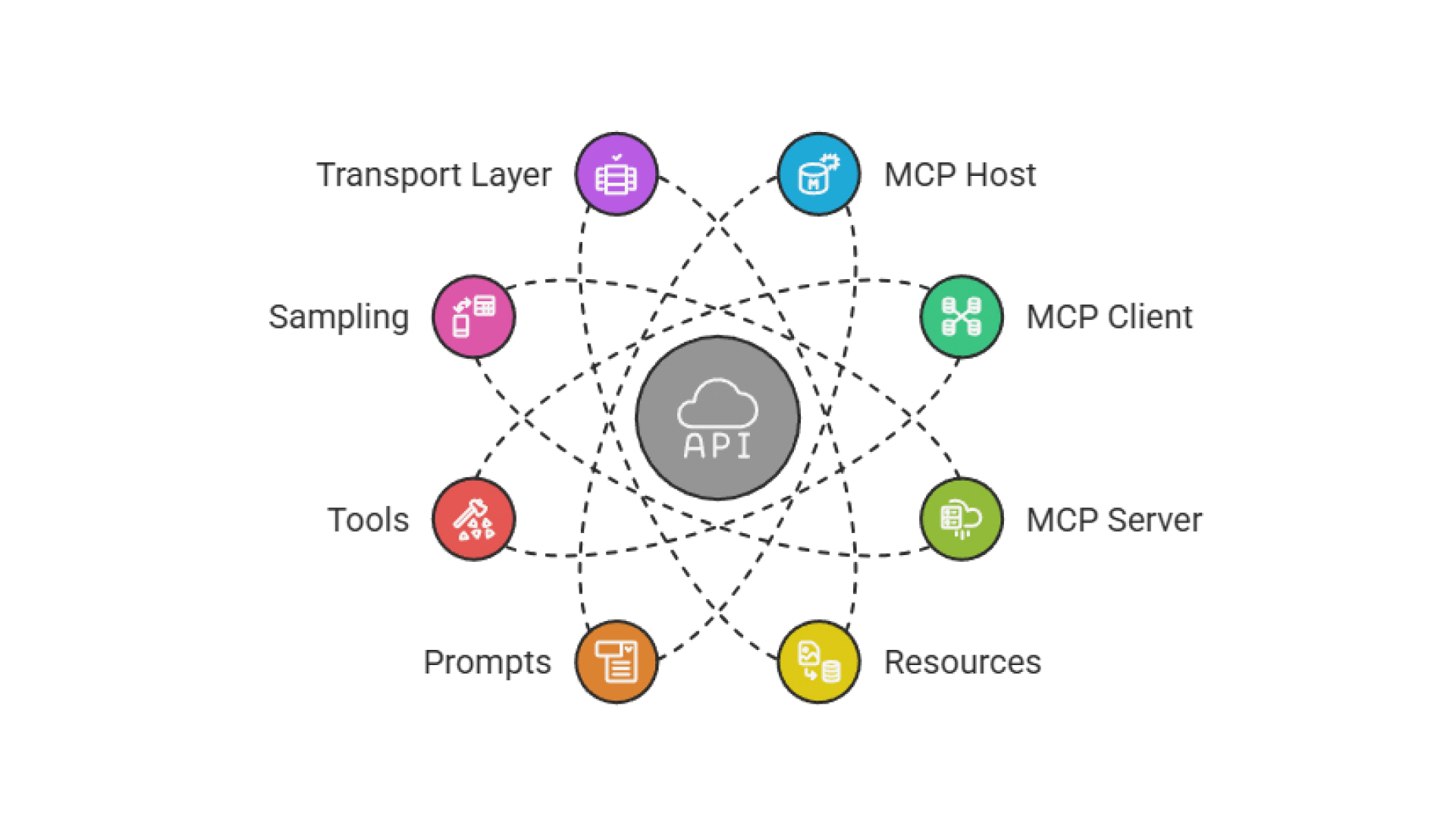

Core Components of MCP Architecture

The Model Context Protocol (MCP) uses a client–server architecture that connects AI models to external tools, data sources, and systems in a modular, secure, and scalable way through four core components working in coordination.

1. MCP host is the environment where users interact with an AI-powered application. It handles user inputs and delegates context-related tasks to MCP clients. It orchestrates interactions and manages connections to multiple servers.

2. MCP client operates within the host. Acts as a bridge between the host and individual MCP servers. Each client maintains a one-to-one session with a specific server, translating host requests into protocol-compliant messages and handling the returned data. Manages sessions, handles errors, and routes responses back to the host.

3. MCP server provides access to a domain-specific set of tools, resources, or prompts. Interfaces with external systems like APIs, databases, or file systems. Exposes capabilities in a standardized, modular, and secure way. Can run locally or remotely. These servers surface their functionality through four key primitives:

- Resources surface structured or unstructured data, like files, documents, or query results, that the model can reference during reasoning.

- Prompts are predefined templates or workflows that guide the model through standard tasks, such as summarizing a document or formatting a response.

- Tools are executable actions models can invoke to interact with external systems, like querying an API, updating a record, or running a script, based on a clear input/output schema.

- Sampling allows servers to delegate reasoning steps back to the model mid-process, enabling dynamic co-processing or multi-step logic.

4. Transport layer handles data exchange between clients and servers using formats like JSON-RPC 2.0. Supports local communication via Stdio and remote communication over HTTP and Server-Sent Events (SSE). Enables real-time, bidirectional communication for dynamic interactions.

How MCP Works

At its foundation, MCP uses JSON-RPC 2.0, a lightweight, standardized protocol for remote procedure calls encoded in JSON. This structure enables consistent, language-neutral exchanges between the host, clients, and servers. MCP supports four main message types:

- Requests are sent by clients to invoke tools, retrieve resources, or trigger prompts.

- Responses are returned by servers with data or confirmation of completed actions.

- Errors are used to report failed requests, along with structured details about the issue.

- Notifications are one-way messages from servers that provide updates without expecting a reply.

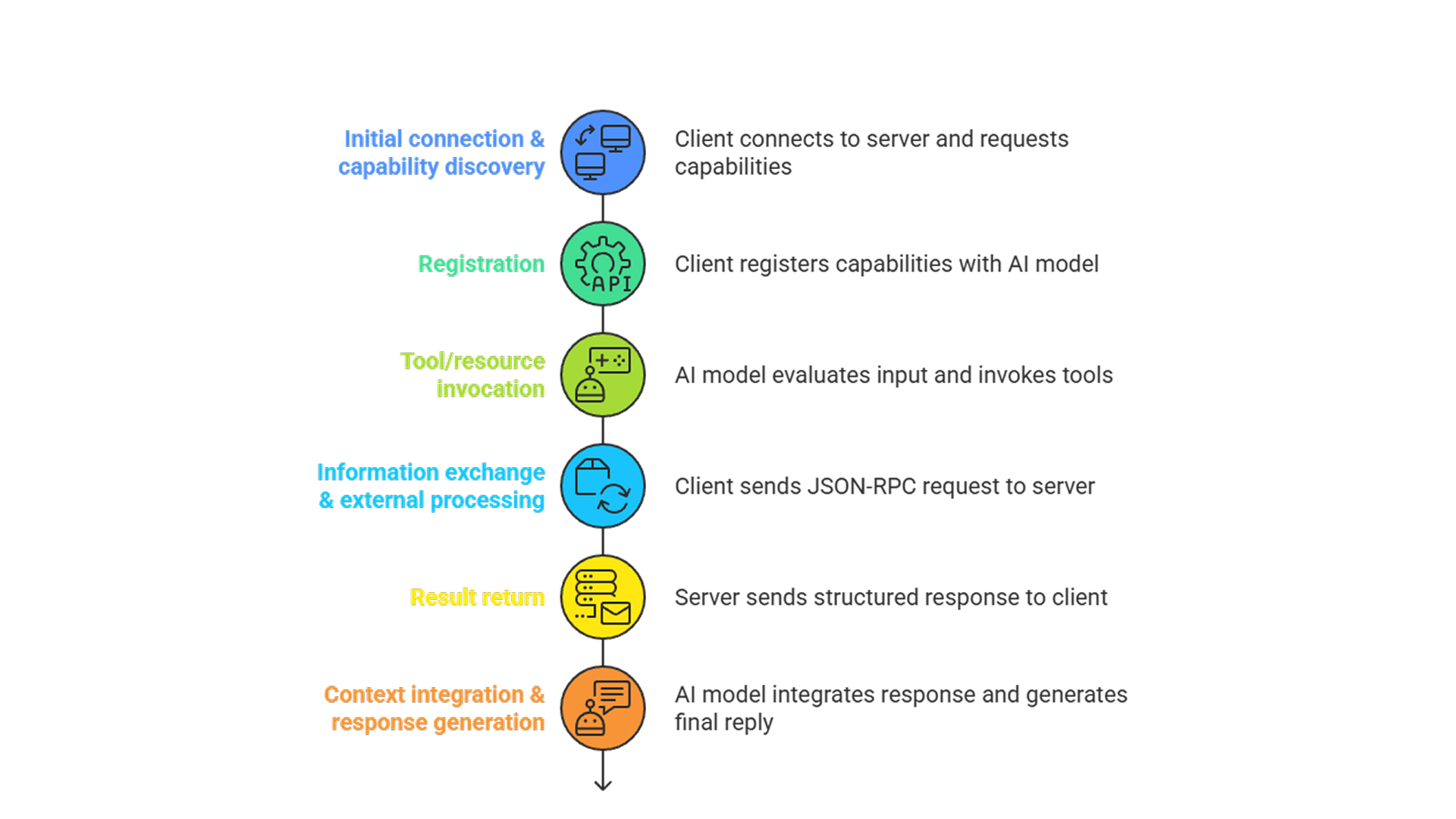

Step-by-Step Workflow

1. Initial connection & capability discovery

When a host application launches, each embedded MCP client connects to its configured server(s). The client sends a discovery request asking what capabilities the server supports, such as tools, resources, or prompts. The server responds with structured metadata describing what it can do.

2. Registration

The client registers these capabilities and passes the information to the AI model, making them available for use during the session. This gives the model full visibility into what’s accessible, without requiring hardcoded integrations.

3. Tool/resource invocation

As the user interacts with the AI, the model evaluates the input and determines if it needs to invoke an external tool, retrieve a resource, or use a predefined prompt. It decides what to do based on the capabilities registered during discovery.

4. Information exchange & external processing

The client translates the model’s decision into a JSON-RPC request and sends it to the appropriate MCP server. The server then carries out the requested task, interacting with APIs, databases, or local systems, and returns the result. In more complex workflows, the server can also issue a sampling request, asking the model for a text generation mid-process.

5. Result return

The server sends back a structured response, whether it’s data, confirmation of an action, or a generated output, which the client receives and passes to the model.

6. Context integration & response generation

The AI model integrates the server’s response into its current reasoning and generates a final reply for the user. This output is now informed by live, external context, enabling the model to act with relevance and precision.

How MCP Compares to Existing Approaches

Retrieval-augmented generation (RAG)

RAG retrieves documents to enrich prompts but typically appends them as raw, unstructured text, offering no built-in support for actions. MCP improves on this by using resource primitives to structure retrieved data and tool primitives to act on it, such as querying databases or calling APIs. MCP can also embed RAG within its framework, combining passive retrieval with active execution.

Plugins or Function Calling

While platforms like OpenAI introduced function calling and plugins, these were platform-specific and required bespoke integrations. MCP standardizes this through tool, resource, and prompt primitives, allowing capabilities to be defined once and used across any compatible model or host. This decouples integration from model internals, promoting interoperability and maintainability.

Conclusion

The Model Context Protocol (MCP) is a practical advance in connecting AI with real-world systems. It enables structured, two-way communication between models and external tools, allowing assistants to act more like agents, capable of reasoning, accessing live data, and taking meaningful actions in real time.

MCP is especially valuable in domains like healthcare, finance, enterprise software, and personal productivity, where secure, real-time access to tools and information is critical. While it’s still evolving and comes with challenges such as security concerns and setup complexity, its open standard, modular design, and developer-friendly tooling support broad adoption.

As more AI systems implement MCP, applications become more capable, personalized, and reliable, able to act, not just respond. With ongoing community support and improving security practices, MCP is well-positioned to become a foundational layer in the modern AI ecosystem.